Java Concurrency Best Practices: Mastering Multithreaded Code

Java concurrency best practices are essential for building efficient and reliable multithreaded applications. In the realm of Java programming, concurrency is a powerful tool that allows us to leverage multiple threads to perform tasks simultaneously, leading to significant performance gains and responsiveness.

However, concurrency comes with its own set of challenges, such as race conditions, deadlocks, and data corruption. This is where a solid understanding of best practices becomes crucial.

This article delves into the core principles of Java concurrency, exploring techniques for thread synchronization, concurrent data structures, and thread management. We’ll cover best practices for writing thread-safe code, avoiding common pitfalls, and optimizing performance. Whether you’re a seasoned Java developer or just starting out, this guide will equip you with the knowledge to confidently navigate the complexities of concurrent programming.

Understanding Concurrency in Java: Java Concurrency Best Practices

Concurrency is a fundamental concept in modern software development, enabling applications to perform multiple tasks simultaneously, improving responsiveness and efficiency. Java provides robust support for concurrency through its threading model, allowing developers to leverage the power of multi-core processors. Understanding the core concepts of concurrency in Java is crucial for building efficient, responsive, and scalable applications.

Mastering Java concurrency best practices is like finding the perfect dress – it requires a keen eye for detail and a dedication to quality. Just like I’m thrilled to announce we’ve restocked the Dagmar sculpted tube dress in white , understanding synchronization, thread pools, and immutable objects is crucial for building robust and scalable Java applications.

These practices are essential for achieving elegance and efficiency in your code, just like a perfectly tailored dress.

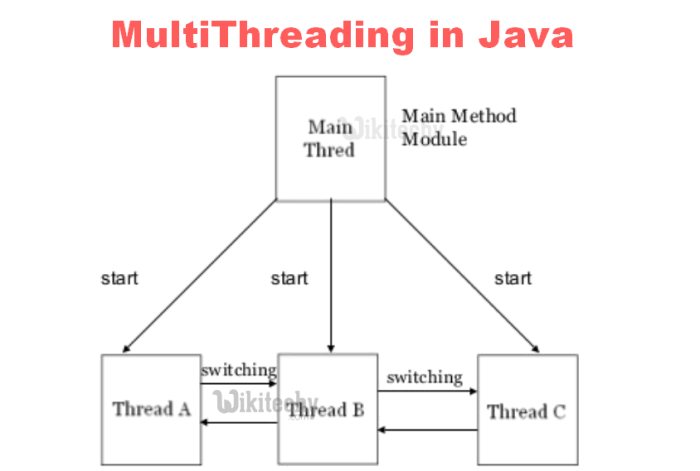

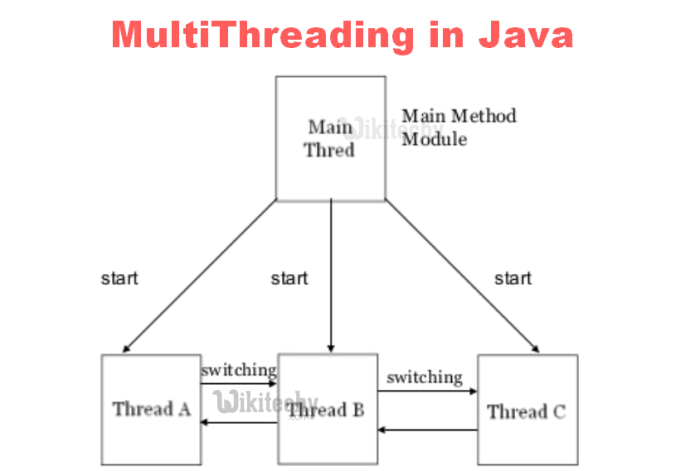

Threads and Processes

Threads and processes are the fundamental building blocks of concurrency. They represent units of execution that can run independently.A processis an independent execution environment that has its own memory space, resources, and address space. Processes are isolated from each other, ensuring that changes made within one process do not affect others.A threadis a lightweight unit of execution that runs within a process.

Threads share the same memory space and resources as the process they belong to, allowing them to communicate and share data efficiently. Multiple threads can run concurrently within a single process, sharing the process’s resources.

The Java Memory Model

The Java Memory Model (JMM) defines the rules and specifications for how threads interact with memory. It ensures that data consistency is maintained even when multiple threads are accessing and modifying shared data.The JMM specifies the following key concepts:* Main Memory:All threads share a common main memory where data is stored.

Just like ensuring thread safety in Java concurrency, crafting a delicious meal requires careful attention to detail. For a vibrant and flavorful twist on tacos, I highly recommend trying these sriracha roasted cauliflower tacos with quick red cabbage slaw.

The recipe is as simple as using immutable objects in Java concurrency, making it perfect for a busy weeknight. And just as thread synchronization prevents data corruption, the slaw’s tangy sweetness balances the spicy kick of the cauliflower, creating a symphony of flavors.

Thread-Local Memory

Each thread has its own local memory where it caches data from main memory for faster access.

Synchronization

Mechanisms like locks and synchronization primitives are used to ensure data consistency between thread-local memories and main memory.The JMM guarantees that all threads see a consistent view of data, but it doesn’t guarantee the order of operations performed by different threads.

Developers need to be aware of the JMM’s rules and use appropriate synchronization techniques to avoid data corruption and race conditions.

The Thread Class

The `Thread` class in Java provides the foundation for creating and managing threads. It encapsulates the functionality for thread execution and management.

Creating Threads

There are two main ways to create threads in Java:* Extending the `Thread` class:This involves creating a new class that extends the `Thread` class and overriding the `run()` method to define the thread’s execution logic.

Implementing the `Runnable` interface

This involves creating a new class that implements the `Runnable` interface and defining the thread’s execution logic within the `run()` method.

Starting and Managing Threads

Once a thread object is created, it can be started using the `start()` method. This method creates a new thread and schedules it for execution by the Java Virtual Machine (JVM).The `run()` method contains the thread’s execution logic. When the `start()` method is called, the JVM invokes the `run()` method in a separate thread.The `Thread` class provides various methods for managing threads, including:* `sleep()`: Suspends the current thread for a specified duration.

`join()`

Waits for the specified thread to complete its execution before continuing.

Mastering Java concurrency best practices is essential for building robust and efficient applications, especially in today’s multi-core world. One aspect that often gets overlooked is security, and that’s where the concept of a “password-free future” comes into play. The article on 1password password free future explores how technologies like biometrics and passwordless authentication can significantly enhance security.

By integrating these concepts into our Java applications, we can build systems that are not only performant but also secure and user-friendly.

`interrupt()`

Interrupts a thread that is currently sleeping or waiting.

`yield()`

Voluntarily releases the processor to allow other threads to run.

Thread Synchronization

Thread synchronization is crucial for ensuring data consistency in concurrent environments where multiple threads access and modify shared data. Without proper synchronization, race conditions can occur, leading to data corruption and unpredictable program behavior.

Race Conditions

A race condition occurs when multiple threads access and modify shared data concurrently, resulting in an unexpected outcome. This can happen when the order of operations performed by different threads is not synchronized, leading to data inconsistencies.

Synchronization Techniques

Java provides various synchronization techniques to prevent race conditions and ensure data consistency:* Synchronized Blocks:Synchronized blocks use the `synchronized` to create critical sections of code that can only be accessed by one thread at a time. This ensures that only one thread can modify shared data within the synchronized block, preventing race conditions.

Synchronized Methods

Similar to synchronized blocks, synchronized methods ensure that only one thread can execute the method at a time. This is useful for methods that access and modify shared data.

Locks

Java provides more advanced synchronization mechanisms like locks, which offer finer-grained control over access to shared resources. Locks can be used to protect specific sections of code or objects, allowing multiple threads to access different parts of the code concurrently while ensuring data consistency.

Importance of Thread Synchronization

Thread synchronization is crucial for maintaining data integrity and ensuring predictable behavior in concurrent applications. It helps to prevent race conditions and ensures that shared data is accessed and modified in a consistent and controlled manner. By using appropriate synchronization techniques, developers can build reliable and scalable concurrent applications.

Thread Synchronization Techniques

In the realm of multithreaded programming, synchronization techniques play a pivotal role in ensuring the integrity of shared resources and preventing race conditions. Java provides a robust set of mechanisms to manage concurrent access to data, allowing developers to build reliable and efficient applications.

Synchronized Blocks and Methods, Java concurrency best practices

Synchronized blocks and methods are fundamental synchronization mechanisms in Java. They provide a way to control access to shared resources by ensuring that only one thread can execute a critical section of code at a time.

- Synchronized Blocks: These blocks are defined using the `synchronized` followed by a lock object. The lock object can be any object, and the thread acquiring the lock can only execute the code within the synchronized block. Once the thread finishes executing the block, it releases the lock, allowing other threads to acquire it.

- Synchronized Methods: Similar to synchronized blocks, synchronized methods acquire a lock on the object instance associated with the method. Any thread attempting to invoke a synchronized method on the same object instance must wait until the lock is released. This ensures that only one thread can execute the method at a time.

The `synchronized` essentially provides a mechanism for mutual exclusion, guaranteeing that only one thread can access a shared resource at a given time.

Code Example:“`javapublic class Counter private int count = 0; public synchronized void increment() count++; public synchronized int getCount() return count; “`In this example, the `increment()` and `getCount()` methods are synchronized, ensuring that only one thread can modify or read the `count` variable at a time.

This prevents race conditions and maintains data consistency.

Volatile Variables

Volatile variables are a lighter-weight synchronization mechanism compared to synchronized blocks and methods. They provide a way to ensure that changes to a variable are immediately visible to all threads.

- Visibility Guarantee: When a variable is declared as `volatile`, the Java Virtual Machine (JVM) guarantees that any write to the variable will be immediately visible to other threads. This ensures that all threads are working with the latest value of the variable.

- No Atomic Operations: It’s important to note that `volatile` variables do not provide atomic operations. If a variable is modified by multiple threads, there’s still a possibility of race conditions if the modification involves multiple steps. For instance, incrementing a `volatile` variable is not atomic because it involves multiple operations (reading the current value, adding one, and writing the new value).

Comparison with Synchronized Blocks:| Feature | Synchronized Blocks | Volatile Variables ||—|—|—|| Synchronization | Provides mutual exclusion, guaranteeing only one thread can access a shared resource at a time. | Ensures visibility of changes to the variable across threads. || Atomic Operations | Provides atomic operations, ensuring that operations on the shared resource are executed as a single, indivisible unit.

| Does not provide atomic operations. || Overhead | Relatively higher overhead due to locking and unlocking mechanisms. | Lower overhead compared to synchronized blocks. || Use Cases | Ideal for protecting critical sections of code that modify shared resources.

| Suitable for variables that are frequently read but less frequently written, and where atomic operations are not required. |

Semaphores

Semaphores are synchronization primitives that control access to a limited number of resources. They act as a gatekeeper, allowing a certain number of threads to pass through at a time.

- Semaphore Initialization: Semaphores are initialized with a specific number of permits, representing the maximum number of threads that can access the resource simultaneously.

- Acquire and Release Permits: Threads can acquire a permit from the semaphore using the `acquire()` method. If all permits are already acquired, the thread will block until a permit becomes available. When a thread is finished using the resource, it releases the permit using the `release()` method.

Semaphores are useful for controlling access to resources like database connections, network sockets, or print queues, where only a limited number of threads can access the resource at a time.

Condition Variables

Condition variables are synchronization primitives that allow threads to wait for specific conditions to become true. They are often used in conjunction with locks to coordinate the actions of multiple threads.

- Signal and Wait: Threads can signal a condition variable using the `signal()` method, indicating that the condition has become true. Threads waiting on the condition variable can be awakened using the `await()` method. When a thread calls `await()`, it releases the lock and blocks until it is notified by another thread calling `signal()`.

Condition variables are useful for scenarios where threads need to wait for specific events to occur, such as the availability of data or the completion of a task.

Concurrent Collections

Concurrent collections in Java are specially designed data structures that provide thread-safe operations, allowing multiple threads to access and modify the collection concurrently without causing data corruption or unexpected behavior. These collections are essential for building robust and efficient multi-threaded applications.

Concurrent Collections in the Java Collections Framework

The Java Collections Framework (JCF) provides a range of concurrent collections that offer thread-safe alternatives to their non-concurrent counterparts. These collections are specifically designed to handle concurrent access from multiple threads without requiring explicit synchronization.

- ConcurrentHashMap:A thread-safe hash table implementation that provides efficient concurrent access to its entries. It uses a segmented locking strategy, allowing multiple threads to access different segments of the map concurrently.

- CopyOnWriteArrayList:A thread-safe list implementation that uses a copy-on-write strategy. When a modification is made, a new copy of the underlying array is created, ensuring that concurrent readers do not encounter any modifications. This approach provides read-only operations without any locks, but modifications can be expensive as they involve copying the entire list.

- BlockingQueue:An interface that represents a queue with blocking operations. When a thread tries to remove an element from an empty queue, it will block until an element is available. Similarly, when a thread tries to add an element to a full queue, it will block until space becomes available.

Blocking queues are commonly used in producer-consumer scenarios and other thread synchronization patterns.

- ConcurrentLinkedQueue:A thread-safe queue implementation that uses a linked list data structure. It provides fast insertion and removal operations, making it suitable for high-throughput scenarios.

- ConcurrentSkipListSet:A thread-safe sorted set implementation based on a skip list data structure. It offers efficient search, insertion, and removal operations, making it suitable for scenarios where sorted order is required.

Advantages and Disadvantages of Concurrent Collections

Using concurrent collections offers several advantages over their non-concurrent counterparts, but it’s crucial to understand the trade-offs involved.

Advantages:

- Thread Safety:Concurrent collections provide inherent thread safety, eliminating the need for explicit synchronization mechanisms like locks or semaphores. This simplifies multi-threaded programming and reduces the risk of data corruption.

- Improved Concurrency:Concurrent collections are designed for concurrent access, allowing multiple threads to operate on the collection simultaneously. This can significantly improve performance in multi-threaded applications.

- Simplified Code:Using concurrent collections eliminates the need for manual synchronization, leading to cleaner and more maintainable code.

Disadvantages:

- Performance Overhead:Concurrent collections typically involve some overhead compared to their non-concurrent counterparts due to the underlying synchronization mechanisms. This overhead can be significant in scenarios with high contention or frequent modifications.

- Complexity:Understanding the behavior and nuances of concurrent collections can be more complex than working with non-concurrent collections. Developers need to be aware of the potential for race conditions and other concurrency-related issues.

- Limited Functionality:Some concurrent collections may not provide all the functionality of their non-concurrent counterparts. For example, `CopyOnWriteArrayList` does not support `remove(int index)` operation.

Key Features and Use Cases of Concurrent Collections

| Collection Class | Key Features | Use Cases ||—|—|—|| `ConcurrentHashMap` | Thread-safe hash table, efficient concurrent access, segmented locking | Caching, data sharing between threads, high-performance maps || `CopyOnWriteArrayList` | Thread-safe list, copy-on-write strategy, read-only operations without locks | Scenarios where read operations are much more frequent than writes, maintaining consistency during iteration || `BlockingQueue` | Thread-safe queue, blocking operations, producer-consumer pattern | Asynchronous communication between threads, task queues, message queues || `ConcurrentLinkedQueue` | Thread-safe queue, linked list implementation, fast insertion and removal | High-throughput queues, event queues, task processing || `ConcurrentSkipListSet` | Thread-safe sorted set, skip list implementation, efficient search, insertion, and removal | Sorted data structures, priority queues, leader election |