Apple Vision Pro Sensors: What They Do & Where They Are

Apple vision pro sensors what they all do and where they are on the headset – Apple Vision Pro Sensors: What They Do & Where They Are on the headset – it’s a question many are asking as Apple’s latest foray into the world of mixed reality promises an unprecedented level of immersion and interaction.

The Apple Vision Pro, with its sleek design and advanced technology, relies heavily on a complex array of sensors to deliver a truly captivating experience. This exploration delves into the heart of the headset, uncovering the roles of each sensor and their strategic placement, revealing the secrets behind the magic.

From the cameras that capture your world and track your movements to the proximity sensors that respond to your touch, each sensor plays a vital role in creating a seamless and intuitive user experience. We’ll explore how these sensors work together, transforming the way you interact with digital content and the physical world around you.

Future Developments in Sensor Technology: Apple Vision Pro Sensors What They All Do And Where They Are On The Headset

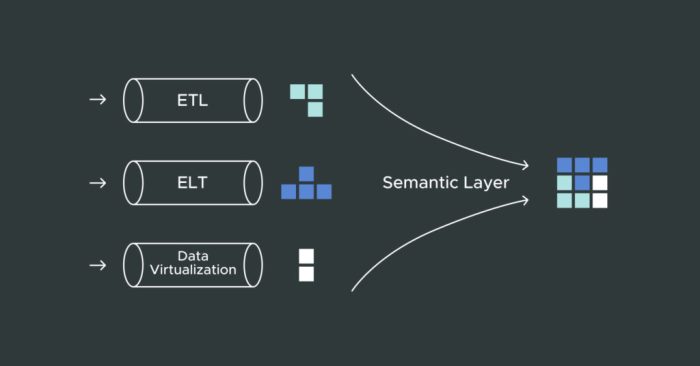

The world of augmented and virtual reality (AR/VR) is constantly evolving, and one of the key drivers of this evolution is the development of increasingly sophisticated sensor technology. As these sensors become more advanced, they promise to deliver even more immersive and interactive experiences, blurring the lines between the digital and physical worlds.

Advancements in Sensor Technology

Sensor technology is rapidly advancing, paving the way for a new generation of AR/VR headsets with enhanced capabilities. These advancements will significantly impact user experiences, opening up new possibilities for applications in various industries.

- Eye Tracking:Current eye-tracking technology in AR/VR headsets primarily focuses on gaze detection and interaction. However, future advancements will incorporate more detailed eye movement analysis, enabling the devices to understand user intent and emotions. This will lead to more intuitive and personalized experiences, allowing users to control the virtual environment with their gaze alone.

Imagine controlling menus, selecting objects, or navigating virtual spaces simply by looking at them. This technology can also be used to analyze user behavior and provide insights into their preferences, further enhancing the user experience.

- Depth Sensing:Depth sensing is crucial for creating realistic and immersive AR experiences, enabling the headset to understand the distance between objects and the user. Future advancements in depth sensing technology will involve the integration of more sophisticated sensors, such as LiDAR and Time-of-Flight (ToF) sensors, leading to more accurate and detailed 3D mapping of the environment.

This will allow for more precise object placement and interaction in AR applications, making the virtual objects appear seamlessly integrated with the real world.

- Biometric Sensors:Biometric sensors will play a crucial role in enhancing the user experience and personalizing AR/VR interactions. These sensors can track various physiological parameters like heart rate, skin temperature, and even brain activity, providing real-time insights into the user’s emotional state.

This information can be used to adjust the virtual environment accordingly, creating more immersive and engaging experiences. For example, a VR game could adjust the difficulty level based on the user’s stress level, or an AR training simulation could provide personalized feedback based on their emotional response to different scenarios.

Improved User Experiences

The advancements in sensor technology will directly translate into improved user experiences in various ways.

- Enhanced Immersion:By providing more accurate and detailed information about the user’s surroundings and their physical state, these sensors will contribute to a more immersive and realistic virtual environment. This will be particularly important for applications in gaming, training, and education, where a sense of presence is crucial for engagement and learning.

- Increased Interactivity:More sophisticated sensors will allow for more intuitive and natural interactions with the virtual world. Users will be able to control the environment with their gaze, gestures, and even their emotions, making the experience feel more natural and less reliant on clunky controllers.

This will open up new possibilities for interaction design, allowing for more engaging and immersive experiences.

- Personalized Experiences:By collecting and analyzing user data, these sensors can personalize the virtual experience to each individual’s preferences and needs. This could include adjusting the virtual environment, providing tailored content, and offering personalized feedback, leading to a more engaging and relevant experience for each user.

Challenges and Ethical Considerations, Apple vision pro sensors what they all do and where they are on the headset

While the potential of advanced sensor technology in AR/VR is vast, there are also significant challenges and ethical considerations to address.

- Privacy Concerns:The collection of user data, particularly biometric information, raises concerns about privacy. It’s crucial to ensure that data is collected and used ethically and transparently, with appropriate safeguards in place to protect user privacy. This will involve clear communication about data collection practices, user consent mechanisms, and robust data security measures.

- Security Risks:The increased reliance on sensors could create new security vulnerabilities. Hackers could potentially exploit these sensors to gain unauthorized access to user data or even manipulate the virtual environment. Secure development practices, robust encryption, and continuous monitoring will be crucial to mitigate these risks.

- Social Implications:The blurring of lines between the digital and physical worlds raises questions about the potential social implications of AR/VR technology. It’s important to consider how these technologies could impact social interaction, human relationships, and even our perception of reality. This will require careful consideration of the potential impact on society and the development of ethical guidelines for the use of these technologies.

The Apple Vision Pro is packed with sensors, from the cameras that capture your surroundings for passthrough to the depth sensors that track your hand movements. These are all cleverly integrated around the headset, allowing for a truly immersive experience.

But sometimes, I crave a different kind of immersion, like a cozy escape into a holiday themed book nook filled with twinkling lights and the scent of pine. Back to the Vision Pro, though, the sensors are what really make it sing, enabling features like eye tracking for precise control and even spatial audio that makes you feel like you’re truly in the virtual world.

The Apple Vision Pro headset is packed with sensors that allow it to track your movements, understand your environment, and even respond to your gaze. You’ve got the cameras for depth sensing and eye tracking, the microphones for voice commands, and the inertial sensors for motion tracking.

It’s a pretty impressive setup, and it’s all designed to create a truly immersive experience. Speaking of immersive experiences, have you checked out this article on sister style room to improve ? It’s got some great ideas for making your space feel more personal and inviting.

But back to the Vision Pro, it’s the combination of all these sensors that makes the headset so special, and I can’t wait to see what developers come up with to take advantage of them.

The Apple Vision Pro is packed with sensors, from the inward-facing cameras that track your eye movements to the outward-facing cameras that map your surroundings. These sensors work together to create a truly immersive experience, and while I’m still exploring the world of augmented reality, I can’t help but think about how this technology could be used to enhance our everyday lives.

Maybe we could even have a virtual reality experience where we’re enjoying a delicious peaches whiskey ice cream float on a sunny afternoon! But back to the sensors, the placement of these sensors on the headset is crucial for optimal performance, ensuring that the Vision Pro can accurately track your movements and provide a seamless user experience.