Apache Spark vs Hadoop: Which Big Data Tool Is Right for You?

Apache Spark vs Hadoop – the age-old debate continues! Both are titans in the world of big data processing, but each has its own strengths and weaknesses. Understanding their core functionalities and comparing their performance can help you choose the right tool for your specific needs.

Whether you’re a seasoned data scientist or just starting your big data journey, this guide will shed light on the key differences and help you navigate the world of Spark and Hadoop.

At their heart, both Spark and Hadoop are designed to handle massive datasets, but they achieve this goal in different ways. Spark, known for its lightning-fast speed, utilizes in-memory processing to execute tasks much faster than Hadoop’s disk-based approach. This speed advantage makes Spark ideal for real-time applications and interactive data analysis.

However, Hadoop, while slower, excels in its ability to handle extremely large datasets and its robust distributed storage capabilities.

Introduction

Apache Spark and Hadoop are two of the most popular frameworks for processing large datasets. They have revolutionized the way we handle big data, enabling organizations to extract valuable insights from massive amounts of information. While both technologies play crucial roles in big data processing, they have distinct strengths and weaknesses.This blog post will delve into the fundamental differences between Apache Spark and Hadoop, highlighting their unique features and use cases.

Key Differences Between Apache Spark and Hadoop

Apache Spark and Hadoop differ significantly in their architecture, processing capabilities, and overall performance. Understanding these differences is essential for choosing the right technology for your specific needs.

- Data Processing Model:Hadoop uses a MapReduce paradigm, where data is processed in batches. Spark, on the other hand, supports both batch and real-time processing, offering more flexibility. Spark’s in-memory processing capabilities allow for faster execution of queries and tasks.

- Programming Languages:Hadoop primarily uses Java, while Spark offers support for multiple languages, including Java, Scala, Python, and R. This flexibility makes Spark more accessible to a wider range of developers.

- Performance:Spark significantly outperforms Hadoop in terms of speed. Its in-memory processing capabilities and optimized execution engine enable faster data processing, making it suitable for real-time applications.

- Scalability:Both Hadoop and Spark are designed for scalability. Hadoop distributes data across multiple nodes, while Spark uses a distributed architecture for parallel processing, allowing for efficient handling of large datasets.

Apache Spark

Apache Spark is a powerful open-source distributed processing framework that has gained significant popularity for its ability to handle large-scale data processing tasks with speed and efficiency. It is known for its in-memory processing capabilities, which allow it to perform computations much faster than traditional Hadoop MapReduce.

Choosing between Apache Spark and Hadoop is a common dilemma for data engineers, each with its strengths and weaknesses. While Hadoop excels in handling massive datasets, Spark’s in-memory processing makes it ideal for real-time analytics. Speaking of real-time, logitech announces m4 ipad pro and m2 ipad air keyboard and trackpad accessories undercutting apples dollar299 magic keyboard , which could be a game-changer for iPad users who need a more affordable and versatile keyboard solution.

Back to Spark and Hadoop, the choice ultimately depends on the specific use case and the required processing speed.

Architecture and Core Components

Spark’s architecture is designed for distributed computing, enabling it to process massive datasets across a cluster of machines. It consists of several key components:

- Spark Driver:This is the main program that runs the Spark application and coordinates the tasks across the cluster.

- Spark Executor:These are worker processes that run on each node in the cluster, executing tasks assigned by the driver.

- Cluster Manager:This component manages the resources of the cluster, such as nodes and executors, and allocates them to Spark applications. Popular cluster managers include YARN, Mesos, and Standalone.

- Spark Core:The foundation of Spark, providing the core functionalities for distributed task scheduling, memory management, and fault tolerance.

- Spark SQL:An extension that allows users to query data using SQL, providing a familiar interface for data analysts and data scientists.

- Spark Streaming:A module for real-time data processing, enabling applications to process data streams as they arrive.

- Spark MLlib:A library for machine learning algorithms, providing tools for classification, regression, clustering, and more.

- GraphX:A library for graph processing, enabling applications to analyze and manipulate large-scale graphs.

Benefits of Apache Spark, Apache spark vs hadoop

Apache Spark offers several advantages over traditional Hadoop MapReduce, making it a compelling choice for data processing:

- Faster Processing:Spark’s in-memory processing capabilities significantly reduce the time required to execute data processing tasks, compared to disk-based processing in Hadoop MapReduce.

- General-Purpose Engine:Spark is not limited to batch processing like Hadoop. It supports a wide range of data processing workloads, including batch, streaming, interactive queries, and machine learning.

- Unified Platform:Spark provides a unified platform for various data processing tasks, eliminating the need for multiple tools and technologies.

- Ease of Use:Spark offers a high-level API, making it easier to write and debug data processing applications.

- Scalability:Spark is designed to scale horizontally, allowing it to handle increasing data volumes and processing demands by adding more nodes to the cluster.

Real-World Use Cases

Apache Spark is widely used in various industries and applications, demonstrating its versatility and power. Here are some real-world use cases:

- E-commerce:Retailers use Spark to analyze customer behavior, recommend products, and personalize shopping experiences. For example, Amazon uses Spark to analyze customer purchase history and recommend relevant products based on past purchases and browsing behavior.

- Financial Services:Banks and financial institutions use Spark for fraud detection, risk analysis, and real-time trading. For example, banks can use Spark to analyze transaction data and identify unusual patterns that might indicate fraudulent activity.

- Healthcare:Hospitals and healthcare providers use Spark to analyze patient data, identify trends, and improve patient care. For example, hospitals can use Spark to analyze patient records and identify potential risks or areas for improvement in treatment plans.

- Social Media:Social media companies use Spark to analyze user data, track trends, and personalize content. For example, Facebook uses Spark to analyze user interactions, recommend friends, and target advertisements.

- Scientific Research:Researchers use Spark to analyze large datasets in fields such as genomics, climate science, and astronomy. For example, researchers in genomics can use Spark to analyze DNA sequences and identify genetic variations associated with diseases.

Hadoop

Hadoop is an open-source framework that provides a distributed processing platform for large datasets. It was initially developed by Yahoo! and has become a popular choice for handling big data challenges across various industries. Hadoop’s architecture is designed to handle massive amounts of data efficiently, making it a powerful tool for organizations dealing with data-intensive workloads.

Architecture and Core Components

Hadoop’s architecture is based on the concept of distributed processing, where data is split into smaller chunks and processed concurrently across multiple nodes in a cluster. The framework comprises two core components: Hadoop Distributed File System (HDFS) and Hadoop YARN (Yet Another Resource Negotiator).

- Hadoop Distributed File System (HDFS): HDFS is a distributed file system designed to store large datasets across multiple nodes. It provides a robust and reliable way to store data, ensuring high availability and fault tolerance. Data is stored in blocks, and each block is replicated across multiple nodes for redundancy.

The debate between Apache Spark and Hadoop often centers around performance and scalability. Spark’s in-memory processing capabilities offer significant speed advantages, but Hadoop’s distributed file system remains a cornerstone of data storage. Understanding how these technologies align with broader industry trends, like those outlined in the recent Gartner trends report on enterprise teams , is crucial for making informed decisions about data processing strategies.

This ensures that data remains accessible even if some nodes fail.

- Hadoop YARN (Yet Another Resource Negotiator): YARN is responsible for managing resources within the Hadoop cluster. It acts as a resource manager, allocating resources to different applications running on the cluster. YARN provides a framework for scheduling and running applications, ensuring efficient utilization of cluster resources.

Benefits of Using Hadoop

Hadoop offers several benefits for data processing, making it a popular choice for organizations dealing with large datasets. Some key benefits include:

- Scalability: Hadoop is highly scalable, allowing it to handle massive datasets by distributing processing across multiple nodes. As data volumes increase, Hadoop can easily scale out by adding more nodes to the cluster.

- Cost-Effectiveness: Hadoop leverages commodity hardware, making it a cost-effective solution for data processing. It can run on clusters of inexpensive servers, reducing infrastructure costs compared to traditional data processing solutions.

- Fault Tolerance: Hadoop is designed to be fault-tolerant, ensuring data availability even if some nodes fail. Data is replicated across multiple nodes, and YARN can seamlessly re-allocate tasks to other nodes in case of failures.

- Flexibility: Hadoop is a flexible framework that supports various data processing tasks, including batch processing, real-time processing, and data analytics. It allows for customization and integration with other tools and technologies.

Real-World Use Cases

Hadoop is widely used in various industries for a range of data processing tasks. Some real-world use cases include:

- E-commerce: Hadoop is used by e-commerce companies to analyze customer data, track website traffic, and personalize shopping experiences. For example, Amazon leverages Hadoop to process vast amounts of customer data, enabling them to provide personalized recommendations and optimize product listings.

- Financial Services: Financial institutions use Hadoop for fraud detection, risk assessment, and customer profiling. For instance, banks use Hadoop to analyze transaction data and identify suspicious activities, helping them prevent fraud and protect customer accounts.

- Healthcare: Hadoop is used in healthcare for medical imaging analysis, genomics research, and patient data management. For example, hospitals use Hadoop to store and analyze medical images, enabling faster diagnosis and treatment planning.

- Social Media: Social media companies use Hadoop to analyze user interactions, track trends, and personalize content. For example, Facebook uses Hadoop to process massive amounts of user data, enabling them to deliver relevant content and ads to users.

Spark vs. Hadoop

Spark and Hadoop are two popular technologies for big data processing. Both offer powerful tools for analyzing massive datasets, but they differ significantly in their architecture, performance, and ease of use.

Performance Characteristics

The performance of Spark and Hadoop varies considerably due to their fundamental design differences. Spark utilizes in-memory processing, allowing it to perform operations much faster than Hadoop, which relies on disk-based processing. This advantage becomes more pronounced when dealing with iterative algorithms or real-time applications, where data is constantly being processed.

- Spark:Spark’s in-memory processing enables it to execute tasks much faster than Hadoop. Its use of a DAG (Directed Acyclic Graph) execution engine optimizes data flow and reduces redundant computations. This makes it ideal for applications requiring low latency, such as real-time analytics and machine learning.

Choosing between Apache Spark and Hadoop for big data processing is like deciding which phone case best suits your iPhone 16 Pro. Both have their strengths, but it ultimately depends on your needs. For example, if you need to analyze data in real-time, Spark is the way to go, but if you’re working with massive datasets, Hadoop might be more suitable.

Speaking of massive datasets, I’m so excited to finally upgrade to the iPhone 16 Pro, and I’ve already started planning out the accessories I need. These are the 7 accessories I’m buying for my iPhone 16 Pro upgrade.

Just like choosing the right phone case, selecting the right big data processing framework is crucial for maximizing efficiency and performance.

- Hadoop:Hadoop’s disk-based processing can lead to slower execution times compared to Spark, especially when dealing with iterative computations or large datasets. However, Hadoop excels in batch processing scenarios where data is processed in large chunks over extended periods.

Scalability and Fault Tolerance

Both Spark and Hadoop are designed to scale horizontally, meaning they can handle massive datasets by distributing processing across multiple nodes.

- Spark:Spark leverages a master-slave architecture, with a central master node coordinating the work of multiple worker nodes. It provides fault tolerance by replicating data across nodes and restarting tasks if a node fails.

- Hadoop:Hadoop also employs a master-slave architecture, with a NameNode managing the file system and DataNodes storing data. It uses the concept of “block replication” to ensure data redundancy and recover from node failures.

Ease of Use and Learning Curve

Spark generally offers a simpler and more intuitive programming model compared to Hadoop.

- Spark:Spark’s APIs are designed to be user-friendly and support multiple programming languages, including Python, Scala, Java, and R. Its unified API for data processing simplifies tasks and makes it easier to learn and use.

- Hadoop:Hadoop requires a deeper understanding of its underlying concepts, including the Hadoop Distributed File System (HDFS), MapReduce framework, and YARN resource manager. Its programming model can be more complex and requires familiarity with Java or other supported languages.

Key Differences

| Feature | Spark | Hadoop |

|---|---|---|

| Processing Model | In-memory processing | Disk-based processing |

| Performance | Faster, especially for iterative computations and real-time applications | Slower than Spark, but efficient for batch processing |

| Scalability | Scales horizontally through master-slave architecture | Scales horizontally through NameNode and DataNodes |

| Fault Tolerance | Data replication and task restart on node failure | Block replication and data redundancy for fault tolerance |

| Ease of Use | User-friendly APIs, multiple programming languages supported | More complex programming model, requires deeper understanding of underlying concepts |

| Applications | Real-time analytics, machine learning, graph processing | Batch processing, data warehousing, data mining |

Choosing the Right Technology

The decision to choose between Apache Spark and Hadoop hinges on several factors, each contributing to the effectiveness of your data processing solution. Understanding these factors is crucial for selecting the best technology for your specific needs.

Factors to Consider

When choosing between Spark and Hadoop, several key factors come into play:

- Data Volume and Velocity:Spark excels in processing large volumes of data at high speeds, making it ideal for real-time applications and streaming data. Hadoop, while also capable of handling large datasets, is better suited for batch processing and offline analysis.

- Processing Requirements:If your data processing needs involve complex transformations, machine learning algorithms, or real-time analysis, Spark’s in-memory processing and optimized libraries provide a significant advantage. For simpler data processing tasks, Hadoop’s batch processing approach might suffice.

- Resource Availability:Spark requires more memory than Hadoop, making it crucial to have sufficient resources available. If your infrastructure is constrained, Hadoop might be a better choice.

- Skillset and Expertise:The development and deployment of Spark applications require a more specialized skillset compared to Hadoop. If your team has expertise in Hadoop, transitioning to Spark might require additional training.

- Ecosystem and Integrations:Both Spark and Hadoop have robust ecosystems with various tools and libraries. However, Spark’s integration with machine learning libraries and other data processing tools might be more extensive in some cases.

Guidelines for Determining the Best Fit

Based on your specific data processing needs, you can apply these guidelines to select the best technology:

- Real-time Data Processing:Spark is highly recommended for real-time applications, such as fraud detection, recommendation systems, and stream processing, where low latency and high throughput are critical.

- Batch Processing:For large-scale batch processing, Hadoop remains a reliable choice, particularly when dealing with massive datasets and complex workflows.

- Machine Learning and Analytics:Spark’s in-memory processing and integration with machine learning libraries make it an excellent choice for advanced analytics and model building.

- Cost Optimization:Hadoop might be a more cost-effective option if you have limited resources and your data processing needs are less demanding.

- Skillset and Expertise:Consider the skillset of your team and the availability of resources for training and development when making your decision.

Scenarios Where Spark is More Suitable

Spark excels in scenarios involving:

- Real-time data processing:Applications like fraud detection, recommendation systems, and streaming data analysis require Spark’s high-speed processing capabilities.

- Interactive data analysis:Spark’s in-memory processing allows for interactive data exploration and analysis, enabling faster insights.

- Machine learning and AI:Spark’s integration with machine learning libraries and its ability to handle large datasets make it suitable for advanced analytics and model building.

Scenarios Where Hadoop is More Suitable

Hadoop is a better choice for:

- Batch processing of large datasets:Hadoop is well-suited for processing massive datasets offline, where latency is not a major concern.

- Cost-effective storage and processing:Hadoop’s distributed file system (HDFS) offers cost-effective storage and processing for large datasets.

- Existing infrastructure:If your organization already has a Hadoop infrastructure in place, transitioning to Spark might require significant changes and investments.

Future of Spark and Hadoop: Apache Spark Vs Hadoop

The future of Apache Spark and Hadoop is intertwined with the ever-evolving landscape of big data technologies. Both platforms are continuously evolving, adapting to emerging trends, and embracing new advancements to address the growing demands of data processing and analytics.

Impact of Emerging Technologies

The rise of emerging technologies like artificial intelligence (AI), machine learning (ML), and cloud computing is significantly influencing the future of Spark and Hadoop. These technologies are driving the need for faster, more scalable, and more efficient data processing capabilities.

- AI and ML:AI and ML algorithms require massive amounts of data for training and inference. Both Spark and Hadoop are being enhanced to support these workloads more effectively. Spark, with its in-memory processing capabilities, is well-suited for iterative ML algorithms, while Hadoop’s distributed storage and processing capabilities are crucial for managing large datasets used in AI training.

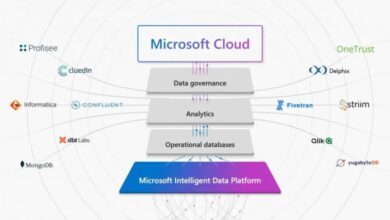

- Cloud Computing:Cloud platforms like AWS, Azure, and GCP offer scalable and cost-effective solutions for deploying and managing Spark and Hadoop clusters. The integration of these platforms with Spark and Hadoop is enabling organizations to leverage the benefits of cloud computing, such as on-demand resources, pay-as-you-go pricing, and enhanced security.

Future Directions of Spark and Hadoop

Both Spark and Hadoop are continuously evolving to meet the growing demands of big data analytics.

- Spark:

- Spark Streaming:Spark Streaming is being enhanced to handle real-time data processing with even greater efficiency and scalability, making it suitable for applications like fraud detection, anomaly detection, and real-time analytics.

- Spark SQL:Spark SQL is becoming more powerful, supporting a wider range of data sources and providing advanced query optimization capabilities for complex data analysis tasks.

- Spark GraphX:Spark GraphX is gaining traction for graph processing tasks, enabling efficient analysis of complex relationships within datasets.

- Hadoop:

- Hadoop YARN:Hadoop YARN (Yet Another Resource Negotiator) is being enhanced to provide better resource management and scheduling for diverse workloads, including Spark applications.

- Hadoop 3.0:Hadoop 3.0 introduced significant improvements in performance, scalability, and security, making it more robust and efficient for handling massive datasets.

- Hadoop Ecosystem:The Hadoop ecosystem is expanding with new tools and libraries that enhance its capabilities for data ingestion, processing, and analysis.