Data Governance for AI Systems: Ensuring Responsible Use

Data governance ai systems – Data governance for AI systems is no longer a niche concern but a fundamental requirement for responsible and ethical development. As AI models become increasingly sophisticated and integrated into our lives, the data that fuels them must be carefully managed to prevent bias, ensure fairness, and safeguard privacy.

This critical area intersects with ethical considerations, legal frameworks, and technical practices, demanding a comprehensive approach that encompasses data quality, security, and responsible use. From mitigating bias in training data to implementing robust security measures, data governance plays a crucial role in shaping the future of AI.

The Importance of Data Governance in AI Systems

The rise of artificial intelligence (AI) has brought about transformative changes across industries, revolutionizing how we work, live, and interact with the world. However, as AI systems become increasingly sophisticated and pervasive, the need for robust data governance practices becomes paramount.

Data governance for AI systems is crucial for ensuring ethical and responsible use of these powerful tools. One area where this becomes particularly relevant is in the development of smart devices, like those recently announced by Logitech. Logitech announces M4 iPad Pro and M2 iPad Air keyboard and trackpad accessories undercutting Apple’s $299 Magic Keyboard – these new accessories offer users a more affordable way to enhance their iPad experience.

As these devices collect data about user behavior, ensuring proper data governance practices becomes even more vital to protect user privacy and security.

Data governance in AI ensures that data used to train and operate these systems is ethical, legal, and responsible, safeguarding against potential risks and promoting responsible AI development and deployment.

Ethical Considerations in AI Data Governance

Data governance plays a critical role in addressing ethical considerations in AI development and deployment. AI systems are often trained on massive datasets, which can inadvertently reflect and amplify existing societal biases present in the data. These biases can lead to discriminatory outcomes, perpetuating inequalities and harming individuals or groups.

Data governance frameworks aim to mitigate these risks by establishing mechanisms for identifying, mitigating, and monitoring biases in AI datasets.

- Fairness and Non-discrimination:Data governance frameworks emphasize the importance of fairness and non-discrimination in AI systems. This involves ensuring that AI models are trained on data that represents diverse populations and does not perpetuate existing societal biases. For instance, in the context of loan applications, AI systems should not be trained on data that disproportionately favors individuals from certain demographics, leading to unfair loan approvals or denials.

- Transparency and Explainability:Transparency and explainability are crucial for understanding how AI systems arrive at their decisions. Data governance practices encourage the development of AI models that are transparent and interpretable, allowing stakeholders to understand the rationale behind AI decisions. This helps to build trust in AI systems and address concerns about potential biases or unfair outcomes.

- Privacy and Data Security:Data governance frameworks prioritize the protection of individuals’ privacy and data security. This involves implementing measures to ensure that personal data used to train and operate AI systems is collected, stored, and processed ethically and securely. For example, anonymization techniques can be employed to protect sensitive information while still allowing for valuable insights to be derived from the data.

Risks of Poor Data Governance in AI

The absence of robust data governance frameworks can lead to significant risks and consequences in AI development and deployment. Poor data governance can result in biased AI systems, compromised data security, and ethical violations, ultimately undermining trust in AI and hindering its widespread adoption.

- Biased AI Systems:AI systems trained on biased data can perpetuate existing inequalities and lead to discriminatory outcomes. For instance, an AI system used for hiring decisions trained on data that reflects historical biases in the workforce might disproportionately favor candidates from certain demographics, perpetuating inequalities in the hiring process.

- Data Security Breaches:Poor data governance can increase the risk of data security breaches, leading to the unauthorized access, use, or disclosure of sensitive information. This can have serious consequences for individuals and organizations, potentially resulting in financial losses, reputational damage, and legal repercussions.

- Ethical Violations:AI systems developed and deployed without adequate data governance can violate ethical principles, leading to unintended consequences and harming individuals or groups. For example, an AI system used for facial recognition might be trained on data that is not representative of all ethnicities, leading to inaccurate or biased identification.

Real-World Examples of Data Governance Issues in AI

Several real-world examples illustrate the importance of data governance in AI systems.

- Facial Recognition Bias:Studies have shown that facial recognition systems trained on datasets that are not representative of diverse populations can exhibit significant bias, leading to inaccurate or discriminatory identification. For example, a study by the National Institute of Standards and Technology (NIST) found that facial recognition systems were more likely to misidentify individuals with darker skin tones than those with lighter skin tones.

- Loan Application Bias:AI systems used to assess loan applications have been criticized for perpetuating existing biases in the financial system. For instance, an AI system trained on data that reflects historical lending practices might disproportionately deny loans to individuals from certain demographics, even if they are financially qualified.

This can exacerbate existing inequalities and limit access to credit for marginalized communities.

- Healthcare Data Privacy:AI systems are increasingly being used in healthcare to diagnose diseases, predict patient outcomes, and personalize treatment plans. However, the use of sensitive healthcare data in AI systems raises concerns about patient privacy and data security. Data governance frameworks are crucial for ensuring that healthcare data is collected, stored, and used ethically and securely, protecting patient privacy while enabling the development of innovative AI applications in healthcare.

Key Principles of Data Governance for AI Systems

Data governance in AI is not just about managing data, but about ensuring that data is used ethically, responsibly, and in alignment with broader organizational goals. Key principles underpin this process, ensuring that AI systems are developed and deployed in a way that benefits society.

Transparency and Explainability

Transparency and explainability are crucial for building trust in AI systems. It’s important to understand how AI systems make decisions, especially when those decisions have significant impacts on individuals or society. This involves documenting the data used, the algorithms employed, and the decision-making process.

Data governance in AI systems is crucial for ensuring responsible and ethical use of these powerful tools. It’s a complex field, but one that’s becoming increasingly important as AI systems become more sophisticated and integrated into our lives. For example, if you’re considering a powerful device for working with AI systems, you might want to check out the comparison of the M4 iPad Pro and the Surface Pro 11, as it provides a great overview of how Microsoft’s iPad Pro alternative stacks up.

Ultimately, choosing the right device depends on your specific needs and how you plan to use it in your work with AI systems.

- Data Lineage Tracking:Trace the origin and transformation of data throughout its lifecycle, from collection to analysis and model training. This helps understand how data influences AI outcomes and identify potential biases.

- Algorithm Transparency:Document the algorithms used in AI systems, making them understandable to stakeholders. This can involve using interpretable algorithms, providing clear explanations of complex models, or offering visualizations of model behavior.

- Decision Explainability:Explain how AI systems arrive at specific decisions. This can involve providing insights into the factors influencing the decision, highlighting the most important features, or showing the reasoning behind the output.

Accountability and Responsibility

Accountability in AI systems refers to clearly identifying who is responsible for the data used, the algorithms developed, and the decisions made by AI systems. This principle helps ensure that ethical considerations are addressed and that appropriate actions are taken when AI systems malfunction or produce biased results.

Data governance for AI systems is crucial, especially when dealing with sensitive information. A key aspect of this is ensuring data privacy, which is particularly important when traveling abroad. For example, you might want to consider using a best vpn for travel to protect your online activity and keep your data secure.

By implementing robust data governance practices, organizations can build trust and confidence in their AI systems, while safeguarding the privacy of individuals.

- Data Ownership and Management:Clearly define data ownership and establish processes for data management, including data quality checks, access control, and data retention policies.

- Algorithm Development and Deployment:Establish clear roles and responsibilities for the development, testing, and deployment of AI algorithms. This includes defining the accountability for the algorithms’ performance and the potential consequences of their decisions.

- Impact Assessment and Monitoring:Regularly assess the impact of AI systems on individuals and society. This involves monitoring for biases, unintended consequences, and ethical concerns, and taking corrective actions as needed.

Fairness and Non-discrimination

Fairness in AI systems is about ensuring that AI decisions are not biased against specific individuals or groups. This requires addressing potential biases in the data used to train AI models and ensuring that AI systems treat all individuals fairly.

- Data Bias Detection and Mitigation:Implement techniques to detect and mitigate biases in data used to train AI models. This involves identifying and addressing data imbalances, removing discriminatory features, and using fairness-aware algorithms.

- Fairness Metrics and Evaluation:Develop and use metrics to evaluate the fairness of AI systems. This involves measuring the impact of AI decisions on different groups and ensuring that the system treats individuals equitably.

- Fairness Auditing and Monitoring:Regularly audit AI systems for fairness and monitor their performance over time. This helps identify potential biases and ensure that AI systems remain fair and unbiased.

Privacy and Data Protection

Privacy in AI systems is about safeguarding personal information and ensuring that data is used ethically and responsibly. This involves adhering to data protection regulations and implementing strong data security measures.

- Data Minimization and Anonymization:Collect only the necessary data for AI training and use techniques like anonymization to protect sensitive information.

- Data Security and Access Control:Implement strong security measures to protect data from unauthorized access, use, or disclosure. This includes encryption, access controls, and regular security audits.

- Data Subject Rights:Ensure that individuals have control over their data, including the right to access, rectify, and delete their personal information.

Data Quality and Integrity in AI Systems: Data Governance Ai Systems

Data quality and integrity are fundamental pillars for the success of AI systems. Just as a building’s foundation determines its stability, the quality of data used to train and evaluate AI models directly influences their performance, reliability, and trustworthiness. Garbage in, garbage out—this adage holds true for AI, emphasizing the critical role of data quality in achieving desired outcomes.

Data Quality Assessment Framework

A comprehensive data quality assessment framework is crucial for monitoring and improving data quality in AI systems. This framework should encompass various aspects, including accuracy, completeness, consistency, timeliness, and validity. A robust data quality assessment framework can be structured as follows:

- Data Profiling:This step involves analyzing the characteristics of the data, including data types, distributions, missing values, and outliers. This helps identify potential data quality issues and understand the data’s overall health.

- Data Validation:This step ensures that the data conforms to predefined rules and constraints. This includes verifying data types, ranges, and relationships between different data elements. Validation helps detect and correct inconsistencies and errors in the data.

- Data Cleansing:This step involves correcting or removing erroneous or incomplete data. This can include handling missing values, resolving inconsistencies, and transforming data into a consistent format. Data cleansing enhances the quality and reliability of the data used for AI model training and evaluation.

- Data Monitoring:This step involves continuously tracking data quality metrics over time. This helps identify potential drifts or changes in data quality that could impact the performance of AI models. Continuous monitoring enables proactive measures to address emerging data quality issues.

Data quality is not a one-time event but an ongoing process that requires continuous monitoring and improvement.

Data Security and Privacy in AI Systems

The rise of AI systems has ushered in a new era of data-driven innovation, but with this advancement comes a heightened need for robust data security and privacy practices. The very nature of AI, which relies on vast datasets to learn and make predictions, presents unique challenges in safeguarding sensitive information.

Data Security Challenges in AI Systems

AI systems often require access to sensitive data, such as personal information, financial records, and medical data, to function effectively. This creates a number of security risks, including:

- Data Breaches:The vast amount of data used by AI systems makes them prime targets for cyberattacks. Hackers can exploit vulnerabilities in the systems or the underlying infrastructure to gain unauthorized access to sensitive data. For example, in 2017, a major data breach at Equifax exposed the personal information of over 147 million people, highlighting the vulnerability of large datasets.

- Data Poisoning:Malicious actors can deliberately corrupt the training data used to build AI models, leading to biased or inaccurate predictions. This can have serious consequences, especially in critical applications like healthcare or finance. For example, a poisoned dataset used to train a facial recognition system could lead to false identifications and miscarriages of justice.

- Data Leakage:AI systems often process and store data in different locations, increasing the risk of data leakage. Accidental or intentional disclosure of sensitive information can have severe legal and reputational consequences. For example, a recent study found that many AI-powered healthcare applications store sensitive patient data on unsecured cloud servers, making them vulnerable to unauthorized access.

Data Privacy Challenges in AI Systems

The use of data in AI systems raises significant privacy concerns, particularly regarding:

- Data Collection and Use:AI systems often collect vast amounts of data, including personal information, without explicit consent. This raises concerns about the transparency and accountability of data collection practices. For example, facial recognition systems used in public spaces collect and analyze images of individuals without their knowledge or consent.

- Data Retention and Sharing:AI systems may retain sensitive data for extended periods, raising concerns about the long-term storage and potential misuse of personal information. Additionally, the sharing of data between different AI systems or organizations can increase the risk of privacy violations.

- Algorithmic Bias:AI algorithms trained on biased data can perpetuate existing societal inequalities and discriminate against certain groups. This can have significant consequences in areas like employment, lending, and criminal justice. For example, a study found that facial recognition systems were less accurate at identifying people of color than white individuals, highlighting the potential for algorithmic bias to exacerbate racial disparities.

Strategies for Protecting Sensitive Data in AI Systems

To address the security and privacy challenges posed by AI systems, it is crucial to implement robust data protection strategies, including:

- Encryption:Encrypting data both at rest and in transit can help protect it from unauthorized access. This involves using strong encryption algorithms to scramble data, making it unreadable to anyone without the appropriate decryption key. For example, using Transport Layer Security (TLS) to encrypt data transmitted over the internet can help prevent eavesdropping and data interception.

- Access Control:Implementing strict access control mechanisms can limit who has access to sensitive data. This involves assigning different levels of access based on user roles and responsibilities. For example, only authorized personnel should have access to sensitive patient data in a healthcare AI system.

- Anonymization Techniques:Anonymization techniques can help protect the privacy of individuals by removing or masking personally identifiable information from data. This can involve techniques like data aggregation, generalization, or differential privacy. For example, replacing specific dates of birth with age ranges can anonymize data while still allowing for useful analysis.

Data Security and Privacy Regulations, Data governance ai systems

A number of regulations have been introduced to address the security and privacy challenges posed by AI systems. These regulations often include requirements for data security, privacy, transparency, and accountability.

| Regulation | Key Requirements |

|---|---|

| General Data Protection Regulation (GDPR) |

|

| California Consumer Privacy Act (CCPA) |

|

| Health Insurance Portability and Accountability Act (HIPAA) |

|

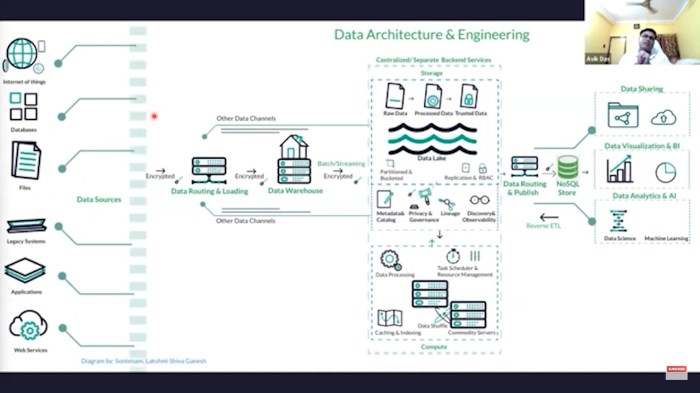

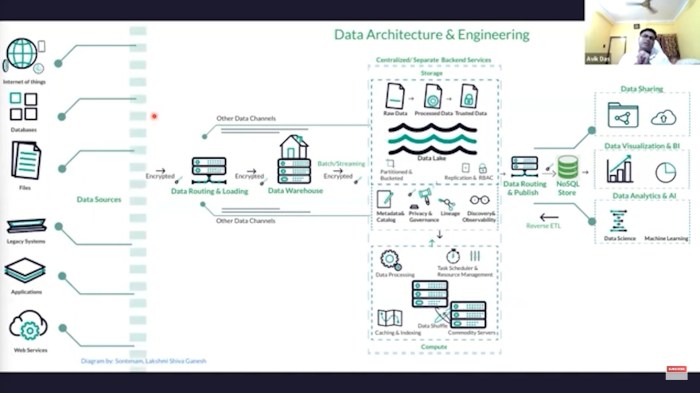

Data Management and Infrastructure for AI Systems

AI systems are data-hungry beasts, requiring vast amounts of information to learn, adapt, and deliver accurate results. This necessitates a robust data management and infrastructure that can handle the unique demands of AI development and deployment.

Data Volume, Velocity, and Variety

The sheer volume, velocity, and variety of data used in AI systems present significant challenges for traditional data management approaches.

- Data Volume:AI models often require massive datasets, sometimes spanning terabytes or even petabytes. This necessitates efficient storage and retrieval mechanisms.

- Data Velocity:Real-time AI applications demand the ability to process data streams at high speeds, requiring specialized data pipelines and processing frameworks.

- Data Variety:AI systems deal with diverse data types, including structured data (like databases), unstructured data (like text, images, and videos), and semi-structured data (like JSON files). This requires flexible data management tools that can handle various formats.

Data Lakes and Data Warehouses

Data lakes and data warehouses play crucial roles in storing and processing data for AI systems.

- Data Lakes:These are large repositories that store raw data in its native format, without any pre-processing or transformation. Data lakes are ideal for storing vast amounts of data from diverse sources, including sensor data, social media feeds, and log files.

They enable exploration and experimentation with different data sets without imposing rigid schemas.

- Data Warehouses:These are structured repositories designed for analytical reporting and business intelligence. Data warehouses typically store data in a star schema, facilitating efficient querying and analysis. While not as flexible as data lakes, data warehouses are well-suited for storing and analyzing data used for training and evaluating AI models.

Data Lifecycle Management in AI Systems

The data lifecycle in an AI system encompasses various stages, from data collection to model deployment and monitoring.

- Data Collection:This involves gathering data from various sources, including sensors, APIs, databases, and web scraping. The quality and relevance of collected data are crucial for the accuracy and effectiveness of AI models.

- Data Cleaning and Preprocessing:This stage involves removing errors, inconsistencies, and irrelevant data from the collected dataset. Data preprocessing techniques include data normalization, feature engineering, and handling missing values.

- Data Exploration and Feature Engineering:This involves analyzing the data to understand its characteristics and identify patterns and relationships. Feature engineering involves creating new features from existing ones, improving the model’s performance.

- Model Training and Evaluation:The cleaned and preprocessed data is used to train the AI model. The model’s performance is evaluated using metrics like accuracy, precision, and recall.

- Model Deployment and Monitoring:Once trained, the model is deployed into production, where it can be used to make predictions or decisions. Continuous monitoring of the model’s performance is essential to ensure its accuracy and effectiveness over time.

Workflow Diagram

The following workflow diagram illustrates the data lifecycle in an AI system:[A visual representation of the data lifecycle in an AI system, highlighting the key stages and their relationships.]

The data lifecycle in an AI system is a continuous process, with feedback loops enabling model improvement and adaptation to changing data patterns.

Data Ethics and Bias in AI Systems

The advent of AI systems has brought about a new era of technological advancement, but it has also raised crucial ethical considerations, particularly concerning the use of data. AI systems are trained on vast amounts of data, and if this data reflects existing societal biases, the AI systems themselves can perpetuate and even amplify those biases, leading to discriminatory outcomes.

Addressing Bias in AI Data Sets and Models

Mitigating bias in AI systems requires a multifaceted approach that addresses bias at various stages of the AI development lifecycle. This includes:

- Data Augmentation: This technique involves expanding the training data set with additional data points that represent underrepresented groups or counterbalance existing biases. For example, if an AI system for loan approvals is trained on a data set that primarily reflects the financial history of white males, data augmentation could involve adding data points from diverse demographics to ensure a more balanced representation.

- Fairness-Aware Algorithms: These algorithms are specifically designed to minimize bias in the decision-making process. They employ techniques such as “fairness constraints” that ensure the model’s predictions are equitable across different groups. For instance, in a hiring system, a fairness-aware algorithm could ensure that the selection process is not biased against candidates based on their gender or ethnicity.

- Ethical Review Processes: Establishing robust ethical review processes is essential to ensure that AI systems are developed and deployed responsibly. These processes involve independent evaluation of the data, algorithms, and potential impacts of the system to identify and address any ethical concerns.

For example, an ethical review board could assess the potential for bias in a facial recognition system before its deployment, ensuring that it does not disproportionately target certain racial or ethnic groups.

The Role of Data Governance in Ethical AI

Data governance plays a crucial role in fostering ethical and responsible AI systems. It provides a framework for:

- Data Quality and Integrity: Data governance ensures that the data used to train AI systems is accurate, complete, and reliable. This helps to mitigate bias by ensuring that the data reflects reality as closely as possible. For example, data governance policies can establish procedures for data validation and verification to ensure that the data used for training an AI system for medical diagnosis is accurate and free from errors.

- Data Transparency and Accountability: Data governance promotes transparency by requiring clear documentation of the data sources, algorithms, and decision-making processes used in AI systems. This transparency enables stakeholders to understand how the system works and identify any potential biases. For instance, a data governance framework could mandate the publication of reports detailing the data sources and algorithms used in an AI system for loan approvals, allowing for scrutiny and accountability.

- Data Security and Privacy: Data governance ensures the secure and responsible handling of personal data used in AI systems. This is crucial for protecting individual privacy and mitigating potential harm from biased or discriminatory outcomes. For example, data governance policies can require encryption and access control measures to safeguard sensitive data used in a healthcare AI system, preventing unauthorized access and potential misuse.

Data Governance and AI Regulation

The rapid development and deployment of AI systems have brought forth a critical need for robust data governance frameworks to ensure responsible and ethical use of data. This is particularly important as AI systems are increasingly integrated into various aspects of our lives, influencing decision-making in domains such as healthcare, finance, and law enforcement.

The Emerging Landscape of Regulations and Standards

The evolving landscape of regulations and standards is crucial for guiding the development and deployment of AI systems. These regulations aim to address concerns regarding data privacy, security, transparency, and fairness.

- General Data Protection Regulation (GDPR):Enacted by the European Union, GDPR sets stringent requirements for data protection and privacy, impacting how organizations collect, process, and store personal data. This regulation has significant implications for AI systems that rely on personal data, requiring organizations to ensure compliance with principles like data minimization, purpose limitation, and consent.

- California Consumer Privacy Act (CCPA):Similar to GDPR, CCPA grants California residents certain rights regarding their personal data, including the right to know what data is collected, the right to delete data, and the right to opt-out of data sales. This regulation has implications for AI systems that utilize data about California residents, demanding transparency and control over data usage.

- AI Act (EU):The proposed AI Act by the European Union seeks to establish a comprehensive regulatory framework for AI systems. This legislation aims to address potential risks associated with AI, including bias, discrimination, and lack of transparency. It proposes a risk-based approach, categorizing AI systems based on their potential impact and imposing specific requirements for high-risk systems.

- NIST AI Risk Management Framework:The National Institute of Standards and Technology (NIST) has developed a framework for managing the risks associated with AI systems. This framework provides a comprehensive approach to identifying, assessing, and mitigating risks related to data quality, bias, security, and privacy.

Impact of Regulations on AI Development and Deployment

Regulations like GDPR and CCPA have a significant impact on the development and deployment of AI systems, particularly those relying on personal data. These regulations have led to:

- Increased focus on data privacy and security:Organizations are compelled to implement robust data security measures and ensure compliance with data privacy regulations, impacting how they collect, store, and process data for AI systems.

- Greater transparency and accountability:Regulations demand greater transparency in AI algorithms and decision-making processes. Organizations are required to provide clear explanations of how AI systems work and the data used to train them.

- Emphasis on fairness and bias mitigation:Regulations emphasize the need to address bias and discrimination in AI systems, promoting fairness and equity in decision-making. This has led to increased efforts in developing techniques for bias detection and mitigation in AI models.

- Challenges for data collection and use:Regulations like GDPR and CCPA impose restrictions on data collection and usage, requiring organizations to obtain explicit consent for data processing and limiting the use of certain types of data. This can impact the availability and quality of data for training AI models.

Industry Best Practices and Compliance Frameworks

Several industry best practices and compliance frameworks have emerged to guide organizations in implementing data governance for AI systems.

- Data Governance Frameworks:Frameworks like the Data Governance Institute’s (DGI) Data Governance Framework and the International Organization for Standardization (ISO) 38500 provide guidance on establishing and managing data governance processes. These frameworks cover aspects like data policy development, data quality management, data security, and data risk assessment.

- Privacy by Design:This principle emphasizes incorporating privacy considerations into the design and development of AI systems from the outset. It involves proactively implementing privacy-enhancing technologies and ensuring data minimization and purpose limitation.

- Transparency and Explainability:Organizations are encouraged to provide clear explanations of how AI systems work and the data used to train them. This promotes trust and accountability, enabling users to understand the basis for AI-driven decisions.

- Data Ethics and Bias Mitigation:Implementing data ethics principles and developing strategies for bias mitigation are crucial for ensuring fairness and equity in AI systems. This involves identifying and addressing potential sources of bias in data and algorithms.