Def Con Hackers Embrace Generative AI

Def Con hackers generative AI sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. Def Con, the legendary hacking conference, has always been at the forefront of cybersecurity innovation.

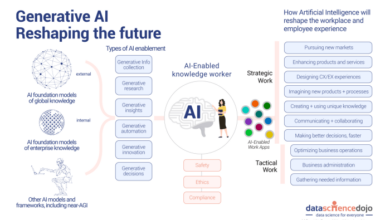

Now, generative AI is taking center stage, captivating the minds of hackers and sparking a new era of possibilities. Generative AI, with its ability to create novel content, has ignited both excitement and apprehension within the hacking community.

From crafting realistic phishing emails to generating complex malware, the applications of generative AI in both ethical and malicious hacking are vast and evolving. The use of generative AI at Def Con is not merely a trend; it’s a reflection of the dynamic landscape of cybersecurity, where both defenders and attackers are constantly adapting to new technologies.

Def Con Hackers and Generative AI

Def Con, the infamous hacking convention, has long been a hub for cybersecurity professionals, researchers, and enthusiasts to showcase their skills, share knowledge, and push the boundaries of security. With the rise of generative AI, this year’s Def Con promises to be even more exciting, as hackers explore the potential of this powerful technology for both ethical and malicious purposes.

Generative AI in Cybersecurity

Generative AI, a type of artificial intelligence that can create new content, is quickly gaining traction in the cybersecurity world. This technology can be used to generate realistic phishing emails, malware code, and even fake social media profiles, making it a potent tool for malicious actors.

On the other hand, generative AI can also be used for ethical purposes, such as creating security training simulations, detecting vulnerabilities in code, and even generating security awareness campaigns.

Potential Applications of Generative AI at Def Con

The use of generative AI at Def Con is expected to be a major topic of discussion, with potential applications spanning both ethical and malicious use cases.

Ethical Applications

- Creating Realistic Security Training Simulations:Generative AI can be used to create realistic phishing emails, malware code, and other security threats that can be used to train security professionals and test their ability to identify and respond to attacks.

- Detecting Vulnerabilities in Code:Generative AI can be used to analyze code and identify potential vulnerabilities that might be missed by traditional security tools.

- Generating Security Awareness Campaigns:Generative AI can be used to create engaging and informative security awareness campaigns that can help educate users about cybersecurity best practices.

Malicious Applications

- Generating Realistic Phishing Emails:Generative AI can be used to create highly convincing phishing emails that are difficult to distinguish from legitimate communications.

- Creating Malware Code:Generative AI can be used to create new and sophisticated malware code that can evade detection by traditional security tools.

- Generating Fake Social Media Profiles:Generative AI can be used to create fake social media profiles that can be used to spread misinformation or launch social engineering attacks.

Generative AI Tools Used by Def Con Hackers: Def Con Hackers Generative Ai

Generative AI tools have become increasingly popular among Def Con hackers, offering a unique set of capabilities that can be leveraged for both offensive and defensive security purposes. These tools are designed to create new content, such as text, images, audio, and code, and can be used to automate tasks, generate realistic-looking data, and even create new security vulnerabilities.

Text Generation Tools

Text generation tools are a common category of generative AI used by Def Con hackers. These tools can be used to create realistic-looking phishing emails, generate social engineering scripts, and even create convincing fake news articles.

- GPT-3:Developed by OpenAI, GPT-3 is a powerful language model capable of generating human-quality text. Hackers can use GPT-3 to create realistic-looking phishing emails or generate convincing social engineering scripts, making them more difficult to detect.

- BERT:Another popular language model, BERT (Bidirectional Encoder Representations from Transformers) is known for its ability to understand the context of text. Hackers can use BERT to generate text that is more likely to be convincing to humans.

Image Generation Tools

Image generation tools allow hackers to create realistic-looking images, which can be used for various purposes. These tools can be used to create fake social media profiles, generate images for phishing attacks, or even create fake security camera footage.

- DALL-E 2:DALL-E 2, developed by OpenAI, can generate realistic images from text descriptions. Hackers could use DALL-E 2 to create images for social engineering attacks, creating fake identities or manipulating existing images.

- Stable Diffusion:This open-source image generation tool is known for its ability to generate high-quality images based on user prompts. Hackers could use Stable Diffusion to create images for phishing attacks or to generate fake security camera footage.

Code Generation Tools

Code generation tools can be used to automate the process of writing code, which can be beneficial for both offensive and defensive security purposes. These tools can be used to create exploits, generate security scripts, and even create custom malware.

The Def Con hacker conference is always a hotbed of innovation, and this year, generative AI is taking center stage. It’s fascinating to see how these powerful tools are being used to create everything from realistic deepfakes to sophisticated phishing attacks.

But while we’re all focused on the future of AI, we can’t forget about the present. Netflix is ditching support for older Apple TV streaming boxes, so if you’re planning on binging the next big show, make sure to upgrade before it’s too late! netflix is ditching support for these older apple tv streaming boxes upgrade before the next binge worthy show premieres Back at Def Con, the future of AI is looking bright, but we need to make sure our devices are up to the task!

- GitHub Copilot:This AI-powered code completion tool can suggest code snippets and complete lines of code based on the context of the code being written. Hackers could use GitHub Copilot to speed up the process of writing exploits or to generate code for custom malware.

- TabNine:TabNine is another AI-powered code completion tool that can suggest code snippets and complete lines of code based on the context of the code being written. Hackers could use TabNine to speed up the process of writing exploits or to generate code for custom malware.

Audio Generation Tools, Def con hackers generative ai

Audio generation tools can be used to create realistic-sounding audio, which can be used for various purposes, such as creating deepfakes, generating fake audio recordings, and even creating custom sound effects.

At Def Con, we saw some incredible uses of generative AI by hackers, from crafting convincing phishing emails to generating custom malware. It’s fascinating to see how this technology is evolving, and how it’s being used to both defend and attack.

It’s a reminder that AI is a double-edged sword, and it’s important to stay ahead of the curve. Google’s recent preview of Duet AI for Chronicle security operations, which promises to automate security tasks and provide insights , is a promising development in this space.

The combination of AI and human expertise is critical in the fight against cybercrime, and it’s exciting to see how these technologies are being combined to protect us all.

- Jukebox:Jukebox is a generative AI model capable of generating music in various styles and genres. Hackers could use Jukebox to create fake audio recordings for social engineering attacks or to create custom sound effects for their tools.

- WaveNet:WaveNet is a deep learning model capable of generating realistic-sounding audio. Hackers could use WaveNet to create deepfakes or to generate fake audio recordings for social engineering attacks.

Ethical Hacking and Generative AI

The intersection of ethical hacking and generative AI presents a fascinating landscape where AI’s capabilities can be harnessed to enhance security practices. Generative AI models, known for their ability to create realistic and complex data, can be leveraged to augment ethical hacking efforts, particularly in penetration testing.

Using Generative AI for Penetration Testing

Generative AI can play a significant role in penetration testing by automating tasks, generating realistic attack scenarios, and even suggesting potential vulnerabilities. Here’s how:

- Automated Vulnerability Scanning:Generative AI can be trained on vast datasets of known vulnerabilities and attack patterns. This knowledge base allows it to automatically scan systems and applications, identifying potential weaknesses that might otherwise go unnoticed. For example, a model could analyze code repositories, searching for common vulnerabilities like SQL injection or cross-site scripting (XSS) flaws.

- Realistic Attack Simulation:Generative AI can create realistic attack scenarios by generating realistic network traffic, malicious code, and even social engineering tactics. These simulations help security professionals understand how attackers might exploit vulnerabilities and test the effectiveness of their defenses. Imagine a scenario where a generative AI model creates a convincing phishing email campaign, complete with realistic social engineering techniques, to evaluate the vulnerability of a company’s employees.

- Vulnerability Discovery:By analyzing vast amounts of data, generative AI can identify patterns and anomalies that might indicate hidden vulnerabilities. It can also generate new attack vectors that traditional security tools might miss. For instance, a generative AI model could analyze network traffic patterns to detect unusual behavior that might signal a previously unknown vulnerability.

Hypothetical Scenario: Generative AI Assists in Vulnerability Identification

Consider a hypothetical scenario where a company is developing a new online banking platform. Before launching the platform, they want to conduct a thorough penetration test to identify any potential vulnerabilities. They decide to use a generative AI model trained on real-world attack data and banking platform vulnerabilities.The generative AI model analyzes the platform’s code, network configuration, and user interface.

It identifies a potential vulnerability in the platform’s authentication system. The model suggests that an attacker could exploit a weakness in the password hashing algorithm to gain unauthorized access to user accounts.Based on this information, the company’s security team investigates the vulnerability further.

They confirm the model’s findings and implement a stronger password hashing algorithm to mitigate the risk.

Generating Realistic and Complex Attack Simulations

Generative AI can be used to create realistic and complex attack simulations, helping security professionals better understand how attackers might operate. This involves generating various attack elements, including:

- Realistic Network Traffic:Generative AI models can create realistic network traffic patterns, simulating the behavior of malicious actors. This allows security professionals to test their intrusion detection systems and network security tools against realistic attack scenarios.

- Malicious Code Generation:Generative AI can generate realistic malicious code, such as exploits, malware, and phishing emails. This enables security professionals to test their antivirus software and other security measures against a wide range of threats.

- Social Engineering Tactics:Generative AI can create realistic social engineering scenarios, such as phishing emails, malicious websites, and even fake social media profiles. This helps security professionals train their employees on how to identify and avoid social engineering attacks.

For example, a generative AI model could be used to create a realistic phishing campaign that targets a specific organization. The model could generate convincing emails, websites, and even social media posts, designed to trick employees into revealing sensitive information.

The Def Con hackers’ use of generative AI to create sophisticated attacks is a serious concern, especially when you consider the recent global meltdown that crippled Delta Airlines. The CEO, in a scathing statement, blamed Microsoft and Crowdstrike for the outage, highlighting the vulnerability of even the most secure systems.

This incident serves as a stark reminder of the ever-evolving threat landscape and the need for constant vigilance against AI-powered attacks.

This simulation would allow the organization to test its security awareness training and identify any vulnerabilities in its phishing detection systems.

Malicious Applications of Generative AI

Generative AI, with its ability to create realistic and convincing content, poses a significant threat in the hands of malicious actors. These actors can leverage the power of generative AI to craft sophisticated phishing campaigns, generate realistic malware and exploit code, and even create deepfakes for social engineering purposes.

Phishing Campaigns

Generative AI can be used to create highly convincing phishing emails and websites that are virtually indistinguishable from legitimate ones.

- Realistic Email Content:Generative AI can generate highly persuasive email content that mimics the writing style and tone of legitimate organizations. This makes it harder for users to detect phishing attempts.

- Personalized Phishing Attacks:Generative AI can be used to create personalized phishing emails that target specific individuals. By analyzing social media profiles and other publicly available information, malicious actors can tailor phishing emails to individual preferences and interests, increasing the likelihood of success.

- Sophisticated Phishing Websites:Generative AI can be used to create highly realistic phishing websites that mimic the look and feel of legitimate websites. This makes it difficult for users to discern the difference between a legitimate website and a phishing site.

Malware Generation

Generative AI can be used to generate highly effective malware that is difficult to detect and analyze.

- Zero-Day Exploits:Generative AI can be used to create new and unknown exploits that bypass traditional security measures. This makes it difficult for security researchers to develop defenses against these attacks.

- Customizable Malware:Generative AI can be used to create customized malware that targets specific vulnerabilities in specific systems. This allows malicious actors to create highly effective attacks that are tailored to their specific goals.

- Evasion Techniques:Generative AI can be used to create malware that can evade detection by antivirus software and other security tools. This allows malicious actors to deploy malware that can remain undetected for extended periods.

The Future of Generative AI in Cybersecurity

Generative AI, with its capacity to create realistic and complex data, is poised to reshape the cybersecurity landscape. Its potential applications extend beyond traditional security measures, promising both innovative defenses and sophisticated attack vectors.

The Impact of Generative AI on Ethical Hacking

Generative AI can significantly enhance ethical hacking activities by automating tasks, generating realistic attack scenarios, and accelerating vulnerability discovery. Ethical hackers can leverage these tools to:

- Generate Realistic Attack Scenarios:Generative AI can create realistic attack simulations, mimicking the actions of real-world attackers. This allows security professionals to test their defenses against a wide range of threats, identify vulnerabilities, and refine their security strategies.

- Automate Repetitive Tasks:Generative AI can automate repetitive tasks, such as code analysis, vulnerability scanning, and log analysis, freeing up ethical hackers to focus on more strategic and complex security challenges.

- Generate Customized Attack Vectors:Generative AI can create custom attack vectors tailored to specific vulnerabilities, allowing ethical hackers to test the effectiveness of their security measures in a targeted manner.

The Impact of Generative AI on Malicious Hacking

Generative AI can be used by malicious actors to create more sophisticated and effective attacks, presenting a significant challenge to cybersecurity.

- Generating Realistic Phishing Emails:Generative AI can create highly convincing phishing emails that mimic the style and content of legitimate communications, making them more likely to deceive victims.

- Creating Custom Malware:Generative AI can be used to create custom malware tailored to specific targets, making it more difficult to detect and mitigate.

- Generating Fake Data:Generative AI can generate fake data, such as social media posts, news articles, and financial reports, to manipulate public opinion or create false narratives.

Challenges and Opportunities in Integrating Generative AI into Cybersecurity

The integration of generative AI into cybersecurity presents both challenges and opportunities.