Java Big Data Tools: Your Guide to Processing Massive Data

Java Big Data Tools are essential for handling the massive datasets that define our digital age. These tools empower developers to build robust and scalable applications capable of processing, analyzing, and extracting valuable insights from data at an unprecedented scale.

From the ubiquitous Apache Hadoop to the lightning-fast Apache Spark, Java offers a rich ecosystem of tools for every Big Data challenge.

This blog post delves into the world of Java Big Data tools, exploring their core concepts, popular frameworks, and best practices. We’ll cover everything from the fundamental Java concepts that power Big Data processing to building real-world applications and staying ahead of the curve with emerging trends.

Introduction to Java in Big Data

Java has emerged as a dominant force in the Big Data landscape, playing a crucial role in handling and processing vast amounts of data. Its versatility, robustness, and mature ecosystem make it an ideal choice for Big Data applications.

Advantages of Java for Big Data Processing

Java offers several advantages that make it a compelling choice for Big Data processing.

- Mature Ecosystem:Java boasts a rich ecosystem of libraries, frameworks, and tools specifically designed for Big Data. These resources provide developers with ready-made solutions for common Big Data tasks, simplifying development and accelerating project timelines.

- Performance:Java is known for its performance, particularly in handling large datasets. Its efficient memory management and optimized runtime environment ensure that Big Data applications can execute swiftly and efficiently.

- Scalability:Java’s inherent scalability allows Big Data applications to handle growing data volumes without compromising performance. Its support for distributed computing frameworks and parallel processing capabilities enable efficient processing of massive datasets.

- Community Support:Java has a vast and active community of developers, contributing to its continuous improvement and providing ample resources for learning and problem-solving. This strong community support ensures that developers have access to a wealth of knowledge and assistance.

Popular Java Libraries and Frameworks for Big Data

Java offers a wide array of libraries and frameworks specifically designed for Big Data processing, catering to diverse needs and use cases.

- Apache Hadoop:A cornerstone of the Big Data ecosystem, Hadoop provides a framework for distributed storage and processing of large datasets. Java plays a central role in Hadoop, with its core components, such as HDFS (Hadoop Distributed File System) and MapReduce, written in Java.

- Apache Spark:A fast and general-purpose cluster computing framework, Spark is widely used for Big Data processing. It leverages Java’s strengths for its core functionality and offers a Java API for developers to interact with its features.

- Apache Kafka:A distributed streaming platform, Kafka is used for real-time data ingestion and processing. Java is used extensively in Kafka, with its core components and client libraries written in Java.

- Apache Flink:A framework for real-time stream processing, Flink leverages Java’s performance and scalability for efficient data processing. Its core components and API are written in Java, providing a seamless integration with the Java ecosystem.

- Apache Cassandra:A NoSQL database designed for high availability and scalability, Cassandra is often used for Big Data storage and retrieval. Its Java driver provides developers with a convenient way to interact with Cassandra databases from Java applications.

Core Java Concepts for Big Data: Java Big Data Tools

Java, with its robust libraries and a vast ecosystem, plays a pivotal role in Big Data processing. Understanding core Java concepts is crucial for efficiently handling large datasets and performing complex computations. This section delves into essential Java concepts, exploring their applications in Big Data scenarios.

Data Structures

Data structures are fundamental building blocks in any programming language, and Java provides a rich set of data structures that are essential for efficient Big Data processing. Data structures are used to organize and store data in a way that allows for efficient access and manipulation.

In Big Data, data structures are crucial for managing large volumes of data and performing operations on them quickly.Here are some of the most commonly used data structures in Big Data processing:

- Arrays:Arrays are the simplest data structure in Java. They are used to store a fixed-size sequence of elements of the same data type. Arrays are efficient for storing and accessing data sequentially, making them suitable for tasks such as processing large datasets in batches.

- Lists:Lists are dynamic data structures that allow for the addition and removal of elements at any position. Java provides several implementations of lists, including ArrayList and LinkedList. ArrayLists are efficient for random access, while LinkedLists are more efficient for insertions and deletions.

- Maps:Maps are key-value pairs, where each key is associated with a specific value. Java provides several implementations of maps, including HashMap and TreeMap. HashMaps are efficient for searching and retrieving values based on keys, while TreeMaps maintain elements in a sorted order.

- Sets:Sets are collections of unique elements. Java provides several implementations of sets, including HashSet and TreeSet. HashSets are efficient for checking if an element exists in a set, while TreeSets maintain elements in a sorted order.

Collections

Java Collections Framework is a set of interfaces and classes that provide a standard way to represent and manipulate collections of objects. These collections are designed to be efficient, reusable, and flexible, making them ideal for Big Data processing.

- ArrayList:An ArrayList is a dynamic array that allows for the addition and removal of elements at any position. It is efficient for random access, making it suitable for tasks such as storing and retrieving data from large datasets.

- LinkedList:A LinkedList is a linear data structure that stores elements in a sequence. It is efficient for insertions and deletions, making it suitable for tasks such as processing data streams in real time.

- HashMap:A HashMap is a key-value pair data structure that allows for efficient searching and retrieval of values based on keys. It is suitable for tasks such as storing and retrieving data from large datasets based on specific criteria.

- HashSet:A HashSet is a collection of unique elements. It is efficient for checking if an element exists in a set, making it suitable for tasks such as removing duplicates from large datasets.

Multithreading

Multithreading is a technique that allows a program to execute multiple tasks concurrently. In Big Data processing, multithreading can significantly improve performance by distributing tasks across multiple threads, allowing for parallel processing of large datasets.

- Thread:A Thread is a lightweight process that can execute independently of other threads. Java provides a Thread class that allows for the creation and management of threads.

- Runnable:The Runnable interface is used to define a task that can be executed by a thread. It defines a single method, run(), which contains the code to be executed by the thread.

- ExecutorService:The ExecutorService interface provides a mechanism for managing and executing threads. It allows for the creation of thread pools, which can be used to manage a fixed number of threads for executing tasks.

Popular Java Big Data Tools

Java has established itself as a dominant force in the realm of big data, offering a rich ecosystem of powerful tools that enable developers to tackle massive datasets with ease. This section delves into the prominent Java big data tools, exploring their functionalities, advantages, and use cases.

Popular Java Big Data Tools

Java big data tools provide a comprehensive framework for handling massive datasets, enabling developers to perform complex operations with efficiency and scalability. These tools empower organizations to extract valuable insights from vast amounts of data, driving informed decision-making and unlocking new opportunities.

Java big data tools are essential for handling massive datasets, and while they might seem worlds away from fashion, they both involve a similar kind of creativity! Sometimes, I need a break from the complex queries and algorithms, and that’s when I turn to crafting.

If you’re looking for a fun way to personalize your wardrobe, check out these 25 clothing accessory DIYs. Just like Java big data tools help us analyze vast amounts of information, these DIY projects allow us to take simple materials and transform them into unique, stylish accessories.

| Tool Name | Description | Key Features | Use Cases |

|---|---|---|---|

| Apache Hadoop | A distributed file system and processing framework designed for storing and analyzing massive datasets. | – Distributed storage and processing

|

– Data warehousing

|

| Apache Spark | A fast and general-purpose cluster computing framework that supports both batch and real-time processing. | – In-memory processing for faster execution

|

– Real-time data analysis

|

| Apache Flink | An open-source stream processing framework designed for real-time data analysis and processing. | – Low-latency processing

|

– Real-time fraud detection

|

| Apache Kafka | A distributed streaming platform used for building real-time data pipelines. | – High-throughput messaging

|

– Real-time data ingestion

|

Advantages and Disadvantages of Java Big Data Tools

Each Java big data tool possesses its own strengths and weaknesses, making it essential to carefully consider the specific requirements of your project before selecting the most suitable tool.

Apache Hadoop

Advantages:

Scalability

Hadoop excels in handling massive datasets, making it suitable for organizations with large data volumes.

Fault Tolerance

Its distributed architecture ensures high availability and resilience against hardware failures.

Cost-Effectiveness

Hadoop is open-source, reducing the cost of implementation and maintenance. Disadvantages:

Batch Processing

Hadoop is primarily designed for batch processing, which may not be suitable for real-time applications.

Java big data tools are essential for handling the massive datasets generated by modern businesses. These tools help optimize processes, extract valuable insights, and make data-driven decisions. For example, Mitchell Companies enhanced their beverage distribution performance with Descartes routing solution , which leverages data analytics to streamline delivery routes and improve efficiency.

Similar to how Descartes optimizes logistics, Java big data tools empower businesses across industries to leverage their data for better outcomes.

Complexity

Java big data tools are powerful for handling massive datasets, but sometimes I need a break from the complex algorithms and dive into something more lighthearted. That’s where Vampire Survivors comes in – it’s the perfect escape for a quick and satisfying gaming session.

The simple gameplay and endless waves of enemies keep me entertained, and I always feel refreshed and ready to tackle those big data challenges after a few rounds.

Setting up and managing a Hadoop cluster can be complex, requiring expertise in distributed systems.

Performance

Hadoop’s performance can be limited by disk I/O operations, especially for real-time processing.

Apache Spark

Advantages:

Speed

Spark’s in-memory processing capabilities enable faster execution compared to Hadoop.

Versatility

It supports both batch and real-time processing, making it suitable for a wider range of applications.

Integration

Spark integrates well with various data sources and tools, facilitating data flow and analysis. Disadvantages:

Resource Intensive

Spark’s in-memory processing requires significant memory resources, which can increase costs.

Complexity

Managing Spark clusters and tuning performance can be challenging.

Limited Real-Time Capabilities

While Spark supports real-time processing, it may not be as efficient as dedicated stream processing frameworks like Flink.

Apache Flink

Advantages:

Real-Time Processing

Flink is specifically designed for low-latency stream processing, making it ideal for real-time applications.

Fault Tolerance

It provides high availability and fault tolerance, ensuring uninterrupted data processing.

Scalability

Flink scales horizontally, enabling it to handle large volumes of data. Disadvantages:

Learning Curve

Flink’s architecture and concepts can be complex to grasp, requiring a steeper learning curve.

Limited Ecosystem

Flink’s ecosystem is still evolving compared to Hadoop and Spark, with fewer available libraries and tools.

Resource Consumption

Flink can be resource-intensive, requiring sufficient memory and processing power.

Apache Kafka

Advantages:

High Throughput

Kafka excels in handling high volumes of data, making it suitable for real-time data ingestion and streaming.

Scalability

It scales horizontally, enabling it to handle increasing data volumes.

Fault Tolerance

Kafka’s distributed architecture ensures high availability and fault tolerance. Disadvantages:

Limited Processing Capabilities

Kafka primarily focuses on message queuing and streaming, with limited processing capabilities.

Complexity

Managing Kafka clusters can be complex, requiring expertise in distributed systems.

Data Consistency

Kafka guarantees message delivery but does not ensure data consistency across multiple consumers.

Comparison of Java Big Data Tools

Each Java big data tool has its own strengths and weaknesses, making it crucial to consider the specific requirements of your project before making a selection. Here’s a comparison of the features and capabilities of these tools:| Feature | Apache Hadoop | Apache Spark | Apache Flink | Apache Kafka ||—|—|—|—|—|| Processing Model | Batch | Batch & Real-time | Real-time | Streaming || Data Storage | Distributed File System (HDFS) | In-memory & External Storage | In-memory & External Storage | Message Queue || Scalability | High | High | High | High || Fault Tolerance | High | High | High | High || Integration | Limited | Extensive | Limited | Extensive || Use Cases | Data warehousing, log analysis, machine learning | Real-time data analysis, machine learning, data exploration | Real-time fraud detection, stream analytics, event processing | Real-time data ingestion, event streaming, message queuing |

Building Big Data Applications with Java

Java is a powerful language that can be used to build robust and scalable big data applications. Its strong typing, object-oriented nature, and rich ecosystem of libraries make it an ideal choice for handling large datasets and complex processing tasks.

In this section, we will delve into the practical aspects of building big data applications using Java, outlining a step-by-step guide and illustrating the process with code examples.

Building a Simple Big Data Application

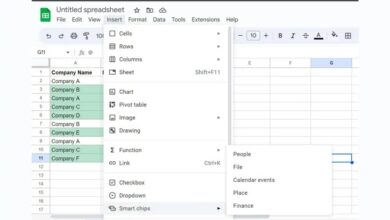

This section Artikels the steps involved in building a simple big data application using the Apache Spark framework. Spark is a popular choice for big data processing due to its speed, versatility, and ease of use. It supports a variety of languages, including Java, and offers a rich API for data manipulation and analysis.

Step 1: Project Setup

Before we begin coding, we need to set up a project environment. This involves installing the necessary tools and dependencies.

- Install Java Development Kit (JDK):Ensure you have a compatible version of the JDK installed on your system. You can download it from the official Oracle website.

- Install Apache Maven:Maven is a build automation tool that simplifies the process of managing project dependencies and building projects. Download and install it from the Apache Maven website.

- Create a Maven Project:Use Maven to create a new project with the necessary dependencies for Spark. You can use the following command to create a basic project:

mvn archetype:generate

- DgroupId=com.example

- DartifactId=bigdata-app

- DarchetypeArtifactId=maven-archetype-quickstart

- DinteractiveMode=false

- Add Spark Dependencies:Add the Spark core and other necessary dependencies to your project’s `pom.xml` file.

<dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.12</artifactId> <version>3.3.1</version> </dependency>

Step 2: Data Ingestion

Data ingestion is the process of importing data from various sources into your application. This step is crucial for any big data application, as it determines the quality and availability of the data for processing and analysis.

- Read Data from a File:Spark provides convenient methods for reading data from files. You can read data from CSV, JSON, Parquet, and other formats.

// Read data from a CSV file Dataset<String> data = spark.read().textFile("path/to/data.csv"); // Read data from a JSON file Dataset<String> data = spark.read().json("path/to/data.json"); - Read Data from a Database:Spark supports connecting to various databases, such as MySQL, PostgreSQL, and MongoDB. You can use the `jdbc()` method to read data from a database.

// Read data from a MySQL database Dataset<Row> data = spark.read() .format("jdbc") .option("url", "jdbc:mysql://localhost:3306/mydatabase") .option("driver", "com.mysql.jdbc.Driver") .option("user", "username") .option("password", "password") .option("query", "SELECT - FROM mytable") .load();

Step 3: Data Processing

Once the data is ingested, you can process it to extract insights and transform it into a more usable format. Spark provides a wide range of operations for data manipulation, such as filtering, sorting, aggregation, and joining.

- Filtering:Filter the data based on specific criteria.

// Filter data based on a condition Dataset<String> filteredData = data.filter(row -> row.getString(0).equals("value")); - Aggregation:Calculate summary statistics, such as count, average, sum, and minimum/maximum values.

// Calculate the average value of a column Dataset<Row> aggregatedData = data.groupBy("column").avg("value"); - Joining:Combine data from multiple sources based on a common key.

// Join two datasets on a common column Dataset<Row> joinedData = data1.join(data2, "common_column");

Step 4: Data Analysis

After processing the data, you can perform analysis to gain insights and answer specific questions. Spark provides various tools and techniques for data analysis, such as machine learning, statistical analysis, and visualization.

- Machine Learning:Spark’s MLlib library offers a wide range of algorithms for tasks like classification, regression, clustering, and recommendation.

// Train a linear regression model LinearRegressionModel model = new LinearRegression().fit(data); // Make predictions using the trained model Dataset<Row> predictions = model.transform(newData);

- Statistical Analysis:Spark provides functions for calculating statistical measures, such as mean, standard deviation, correlation, and hypothesis testing.

// Calculate the mean value of a column double mean = data.agg(avg("value")).first().getDouble(0); - Visualization:Spark can integrate with visualization libraries like Matplotlib and Plotly to create interactive charts and graphs for data exploration.

// Create a bar chart using Matplotlib import matplotlib.pyplot as plt plt.bar(data.select("column").collectAsList(), data.select("value").collectAsList()) plt.show()

Step 5: Application Deployment

Once your application is developed and tested, you can deploy it to a production environment. Spark supports various deployment options, including local mode, standalone mode, and cluster mode.

- Local Mode:Run the application on your local machine for development and testing purposes.

- Standalone Mode:Deploy the application on a cluster of machines managed by Spark’s standalone master. This mode provides more resources and scalability.

- Cluster Mode:Deploy the application on a cluster managed by a cluster manager, such as YARN or Mesos. This mode offers greater flexibility and integration with existing cluster resources.

Best Practices for Java Big Data Development

Building efficient and scalable Java applications for big data requires a solid understanding of best practices. This section explores essential techniques for handling massive datasets, optimizing performance, and ensuring data integrity in your Java big data projects.

Optimizing Java Code for Big Data

Writing efficient Java code is crucial for handling large datasets. Here are some best practices:

- Minimize Object Creation:Excessive object creation can lead to high garbage collection overhead. Utilize object pooling or reuse existing objects whenever possible. For instance, instead of creating a new `String` object every time, consider using a `StringBuilder` for string manipulation.

- Prefer Primitive Data Types:Primitive data types like `int` and `long` are more efficient than their wrapper classes (`Integer`, `Long`) because they don’t involve object overhead. Choose them when possible.

- Avoid Unnecessary Autoboxing and Unboxing:Autoboxing (converting primitives to wrapper objects) and unboxing (converting wrapper objects to primitives) can incur performance penalties. Use primitives directly to avoid these operations.

- Optimize Data Structures:Select the most appropriate data structures for your data. For example, use `HashMap` for fast lookups, `ArrayList` for sequential access, and `TreeSet` for sorted data.

- Utilize Parallel Processing:Leverage Java’s concurrency features like threads and executors to parallelize your operations. This can significantly improve performance for data-intensive tasks.

Handling Large Datasets

Big data applications often involve processing massive amounts of data. Here are some strategies for managing large datasets effectively:

- Data Partitioning:Divide your data into smaller, manageable chunks. This allows you to process data in parallel and reduces memory pressure. Many big data frameworks like Apache Spark support data partitioning.

- Data Serialization:Choose an efficient serialization format to reduce the size of your data and optimize data transfer. Popular options include Apache Avro, Google Protocol Buffers, and Kryo.

- Data Compression:Compress your data to reduce storage space and network bandwidth usage. Algorithms like GZIP and Snappy are commonly used for data compression in big data systems.

- Distributed Storage:Utilize distributed storage systems like Hadoop Distributed File System (HDFS) or Apache Cassandra to store and manage large datasets efficiently.

Performance Optimization

Performance is a critical factor in big data applications. Here are some techniques for optimizing performance:

- Profiling and Tuning:Use profiling tools to identify performance bottlenecks in your code. Then, optimize the critical areas based on the profiling results.

- Caching:Store frequently accessed data in memory to reduce disk I/O. Consider using caching frameworks like Ehcache or Guava Cache.

- Index Optimization:Create appropriate indexes on your data to speed up queries. Understand the indexing strategies of your database or storage system.

- Efficient Query Optimization:Write efficient queries to minimize the amount of data processed. Utilize query optimizers provided by your database or big data framework.

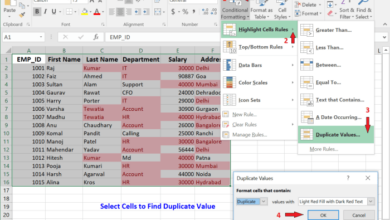

Ensuring Data Integrity

Data integrity is paramount in big data applications. Here are some best practices:

- Data Validation:Implement data validation checks to ensure that data is consistent and conforms to expected formats. This helps prevent errors and inconsistencies in your data pipeline.

- Data Transformation:Transform data into a consistent format before processing. This simplifies data analysis and reduces the risk of errors.

- Data Auditing:Regularly audit your data to identify any discrepancies or inconsistencies. This helps ensure the accuracy and reliability of your data.

Common Pitfalls to Avoid, Java big data tools

- Ignoring Performance Considerations:Failing to optimize your code for performance can lead to slow execution times and scalability issues. Profiling and tuning are essential.

- Insufficient Data Validation:Insufficient data validation can introduce errors and inconsistencies into your data pipeline. Implement robust data validation checks.

- Overlooking Data Integrity:Neglecting data integrity can lead to inaccurate results and unreliable insights. Regularly audit and validate your data.

- Ignoring Distributed System Concepts:Big data applications often rely on distributed systems. Understanding concepts like fault tolerance, consistency, and data partitioning is crucial.