F5 Generative AI: Cybersecurity Interview

F5 generative ai cybersecurity interview – F5 Generative AI: Cybersecurity Interview sets the stage for an exploration of how artificial intelligence is revolutionizing the way we approach security. This interview delves into the exciting potential of generative AI to enhance F5’s cybersecurity solutions, addressing the critical need for advanced threat detection and prevention in today’s digital landscape.

We’ll examine how generative AI can be leveraged to analyze network traffic patterns, identify anomalies, and even create synthetic data for security testing. The discussion also explores the ethical implications of generative AI in cybersecurity, emphasizing the importance of responsible development and deployment to ensure its benefits are realized while mitigating potential risks.

F5 and Generative AI in Cybersecurity

The convergence of F5’s expertise in application security and the transformative power of generative AI promises a new era of cybersecurity. Generative AI, with its ability to create realistic data, learn from vast datasets, and automate complex tasks, presents a unique opportunity to enhance F5’s existing cybersecurity solutions.

Leveraging Generative AI for Enhanced Threat Detection and Prevention

Generative AI can be a game-changer in threat detection and prevention by enabling F5 to analyze massive datasets of security events, identify patterns, and predict potential threats before they materialize. This can be achieved through:

- Generating Synthetic Data:F5 can use generative AI to create synthetic data that mimics real-world attack scenarios, allowing security teams to train and test their defenses against a wide range of threats. This helps improve the accuracy and effectiveness of threat detection systems.

The F5 Generative AI Cybersecurity interview was intense, but I managed to keep my cool thanks to a mental trick I learned: picturing myself baking the most delicious peanut butter honey cupcakes while answering the questions. The sweet aroma and the image of those fluffy treats helped me stay calm and focused, allowing me to showcase my knowledge and skills effectively.

I’m sure the interviewers appreciated my creative approach!

- Building Predictive Models:Generative AI can be used to build predictive models that identify potential threats based on historical data and emerging trends. These models can help F5 proactively detect and prevent attacks before they cause significant damage.

- Automating Threat Intelligence:Generative AI can automate the process of gathering and analyzing threat intelligence, enabling F5 to stay ahead of evolving threats and vulnerabilities.

Automating Security Tasks and Streamlining Workflows

Generative AI can significantly automate repetitive and time-consuming security tasks, freeing up security teams to focus on more strategic initiatives. F5 can leverage generative AI to:

- Automate Security Policy Updates:Generative AI can analyze security logs and events to identify necessary policy updates, reducing the manual effort required for policy management.

- Generate Security Reports:Generative AI can automate the generation of detailed security reports, providing insights into security posture and potential vulnerabilities.

- Create Security Playbooks:Generative AI can assist in creating automated security playbooks, which define the steps to be taken in response to specific security incidents.

Enhancing Existing F5 Cybersecurity Products with Generative AI

F5’s existing cybersecurity products can be significantly enhanced with the integration of generative AI. For example:

- F5 BIG-IP:Generative AI can be integrated into F5 BIG-IP to enhance threat detection and prevention capabilities. This can include identifying and blocking zero-day attacks, improving the accuracy of intrusion detection systems, and automating security policy updates.

- F5 Silverline:Generative AI can be used to improve the effectiveness of F5 Silverline’s managed security services by automating threat intelligence gathering, generating security reports, and creating custom security playbooks for specific customer needs.

- F5 Cloud Services:Generative AI can be integrated into F5’s cloud services to enhance security posture assessment, identify potential vulnerabilities, and automate security remediation tasks.

Generative AI for Network Security

Generative AI is revolutionizing network security by providing innovative ways to detect and prevent threats. By leveraging the power of machine learning, generative AI can analyze vast amounts of network data, identify patterns, and predict potential security breaches. This technology empowers security teams to stay ahead of emerging threats and enhance overall network security posture.

My recent F5 Generative AI cybersecurity interview got me thinking about how these new technologies can be used to protect us from threats. It reminded me of the delicious complexity of a well-crafted red velvet cake, like the ones they make at red velvet sweet shoppe.

Just like the delicate balance of ingredients in a cake, cybersecurity relies on a careful combination of innovative solutions and traditional safeguards to ensure our digital world stays safe and secure.

Analyzing Network Traffic Patterns

Generative AI can analyze network traffic patterns and identify anomalies that might indicate malicious activity. By learning from historical network data, generative AI models can create a baseline of normal network behavior. Any deviation from this baseline can trigger an alert, prompting security teams to investigate potential threats.

The F5 Generative AI Cybersecurity interview was a whirlwind of technical questions, but I managed to stay calm and showcase my expertise. Afterward, I decided to put together a small thank-you gift for the interviewers, and I found a fantastic guide on how to make professional looking gift bags to help me present it nicely.

Hopefully, my thoughtful gesture and technical skills will leave a lasting impression and increase my chances of landing the position.

For example, generative AI can detect unusual traffic spikes, unexpected communication patterns, or unusual data transfers that might indicate a denial-of-service attack or data exfiltration attempt.

Creating Synthetic Network Data for Security Testing

Generative AI can create synthetic network data that mimics real-world network traffic. This synthetic data can be used to test security controls and identify vulnerabilities without impacting live systems. For example, generative AI can create realistic attack scenarios to test the effectiveness of intrusion detection systems (IDS) and firewalls.

This approach allows security teams to identify and address vulnerabilities before they are exploited by real attackers.

F5 Network Security Solutions Enhanced with Generative AI

F5’s network security solutions can be enhanced with generative AI to provide more comprehensive and intelligent security capabilities. For example, F5’s BIG-IP platform can be integrated with generative AI models to enhance threat detection, anomaly detection, and security incident response.

By leveraging generative AI, F5 can provide more accurate and timely threat intelligence, enabling security teams to make faster and more informed decisions.

Ethical Considerations of Generative AI in Cybersecurity

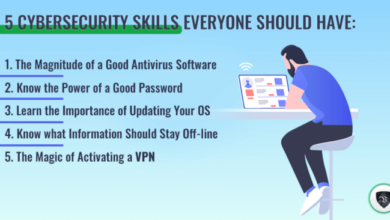

The rapid advancement of generative AI has ushered in a new era of possibilities in cybersecurity. While generative AI holds immense potential for enhancing security measures, its application also raises significant ethical concerns. It is crucial to navigate this landscape with careful consideration, ensuring that the benefits of generative AI are realized while mitigating potential risks and ensuring responsible development and deployment.

Potential Risks of Misusing Generative AI in Security

The misuse of generative AI in cybersecurity poses serious risks, potentially leading to the creation of sophisticated and highly targeted attacks. The potential for misuse stems from the ability of generative AI to create realistic and convincing content, including:

- Phishing emails and websites: Generative AI can create highly persuasive phishing emails and websites that mimic legitimate sources, making it difficult for users to distinguish between real and fake communications. This can lead to increased susceptibility to social engineering attacks and data breaches.

- Malicious code generation: Generative AI can be used to generate sophisticated malware, including zero-day exploits, that can evade traditional security measures. This can create significant challenges for security professionals, as they may struggle to detect and respond to such threats effectively.

- Deepfakes and disinformation campaigns: Generative AI can be used to create deepfakes, realistic manipulated videos and audio recordings, that can be used for malicious purposes, such as spreading misinformation or discrediting individuals. This can have serious consequences for individuals, organizations, and society as a whole.

Importance of Responsible Development and Deployment

Responsible development and deployment of generative AI in cybersecurity are paramount to mitigate ethical concerns and ensure its safe and effective use. This involves:

- Transparency and explainability: It is essential to understand how generative AI models work and the factors influencing their decisions. Transparency and explainability help build trust and ensure accountability. This includes providing clear documentation of model training data, algorithms used, and decision-making processes.

- Bias mitigation: Generative AI models can inherit biases from the training data, leading to unfair or discriminatory outcomes. It is crucial to address bias in training data and model development to ensure equitable and ethical application of generative AI in cybersecurity.

- Security and robustness: Generative AI models must be secure and robust against adversarial attacks. This involves implementing security measures to protect models from manipulation and ensuring their resilience against attacks that aim to compromise their integrity or functionality.

- Privacy and data protection: Generative AI models may process sensitive data, raising privacy concerns. It is essential to implement robust data protection measures to safeguard user privacy and comply with relevant regulations.

Recommendations for Mitigating Ethical Concerns

To address the ethical challenges posed by generative AI in cybersecurity, it is crucial to adopt a proactive approach that emphasizes responsible development, deployment, and use. This includes:

- Establishing ethical guidelines and frameworks: Developing clear ethical guidelines and frameworks for the use of generative AI in cybersecurity can provide a foundation for responsible development and deployment. These guidelines should address issues such as data privacy, bias, transparency, and accountability.

- Promoting collaboration and knowledge sharing: Fostering collaboration and knowledge sharing among researchers, developers, and security professionals can help identify and address ethical challenges related to generative AI in cybersecurity. This includes sharing best practices, research findings, and lessons learned.

- Investing in research and development: Continuous investment in research and development is crucial to advance the understanding of generative AI and develop solutions to mitigate ethical concerns. This includes research on bias detection and mitigation, explainability, and adversarial robustness.

- Raising public awareness: Educating the public about the ethical implications of generative AI in cybersecurity is essential to foster informed decision-making and promote responsible use. This includes raising awareness about the potential risks and benefits of generative AI, as well as the importance of ethical considerations.

Future of Generative AI in Cybersecurity: F5 Generative Ai Cybersecurity Interview

The application of generative AI in cybersecurity is still in its nascent stages, but its potential is vast and promises to revolutionize how we approach security challenges. The future holds exciting possibilities for generative AI to reshape the cybersecurity landscape, enabling us to anticipate and counter evolving threats more effectively.

Generative AI for Proactive Threat Detection

Generative AI can be leveraged to proactively identify potential threats before they materialize. By analyzing massive datasets of past attacks, security logs, and network traffic, generative AI models can learn patterns and anomalies indicative of malicious activity. This allows for the development of predictive models that can anticipate future attacks, enabling security teams to take preemptive measures and strengthen defenses.

Generative AI can be used to create synthetic attack scenarios, simulating real-world attacks to test and improve security defenses. This allows organizations to identify vulnerabilities and weaknesses in their systems before actual attacks occur.

Generative AI for Automated Security Response

Generative AI can automate security responses, allowing for faster and more efficient threat mitigation. By analyzing attack patterns and security policies, generative AI models can generate customized security responses, such as blocking malicious IP addresses, isolating infected systems, and applying security patches.

This automation can significantly reduce the time required to respond to threats, minimizing the impact of attacks.

Generative AI for Personalized Security

Generative AI can be used to create personalized security solutions tailored to the specific needs of individual users and organizations. By analyzing user behavior, network traffic, and security logs, generative AI models can identify vulnerabilities and risks unique to each user or organization.

This allows for the development of customized security policies and controls that provide optimal protection.

Generative AI for Cybersecurity Education and Training

Generative AI can play a crucial role in cybersecurity education and training. By generating realistic attack scenarios and simulations, generative AI models can provide immersive and engaging learning experiences. This allows security professionals to develop practical skills and knowledge, enhancing their ability to identify and respond to real-world threats.

Generative AI can be used to create interactive training modules that simulate real-world cybersecurity scenarios. This allows security professionals to practice their skills in a safe and controlled environment, improving their ability to handle real-world threats.

Generative AI for Threat Intelligence, F5 generative ai cybersecurity interview

Generative AI can be used to enhance threat intelligence by analyzing vast amounts of data from various sources, including social media, dark web forums, and security blogs. This allows for the identification of emerging threats and the development of comprehensive threat intelligence reports.

By understanding the latest attack techniques and tactics, organizations can proactively mitigate risks and improve their overall security posture.