Best Free HR Software: Streamline Your Business

Best free HR software sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset. In today’s competitive business landscape, finding the right HR software can be a game-changer, especially for small and medium-sized enterprises (SMEs).

With the advent of free HR software solutions, businesses now have access to powerful tools that can simplify HR processes, improve efficiency, and enhance employee satisfaction, all without breaking the bank.

Free HR software offers a plethora of benefits, including streamlined employee onboarding, efficient time and attendance tracking, and comprehensive performance management capabilities. These solutions can also help businesses manage payroll, track employee benefits, and ensure compliance with labor laws. The best free HR software options provide a user-friendly interface, robust security features, and excellent customer support, making them an invaluable asset for any growing business.

Free HR Software Options

Finding the right HR software can be a challenge, especially for small businesses and startups with limited budgets. Fortunately, several excellent free HR software options are available, offering essential features without breaking the bank. This article will explore some of the best free HR software options, highlighting their key features, functionalities, and pros and cons.

Free HR Software Options

Free HR software can be a valuable resource for businesses of all sizes. It provides essential tools for managing employee information, tracking time and attendance, and automating various HR tasks. However, it’s crucial to understand that “free” often comes with limitations.

Some free HR software options may have restricted features, limited storage space, or a cap on the number of users.Here are some of the best free HR software options available:

- Zoho People: Zoho People is a comprehensive HR software solution that offers a free plan for up to 10 users. It provides features like employee onboarding, performance management, time and attendance tracking, and payroll integration.

- Pros: Zoho People is user-friendly, offers a wide range of features, and has excellent customer support.

Finding the best free HR software can be a challenge, especially when you’re juggling so many tasks. It’s like trying to bake a perfect cake without a recipe! But just like Elsie’s Kitchen needs the right ingredients, your HR needs the right tools.

That’s why I’m always on the lookout for free HR software solutions, and I recently discovered a great resource called elsies kitchen progress report , which offers some insightful reviews. With the right free HR software, you can streamline your processes and focus on what really matters – your employees and your business growth.

- Cons: The free plan has limited storage space and features.

- Pros: Zoho People is user-friendly, offers a wide range of features, and has excellent customer support.

- OrangeHRM: OrangeHRM is another popular open-source HR software that offers a free plan for up to 50 users. It provides core HR functionalities like employee management, recruitment, and performance management.

- Pros: OrangeHRM is highly customizable and offers a wide range of integrations.

- Cons: It can be more complex to set up and manage compared to other free HR software options.

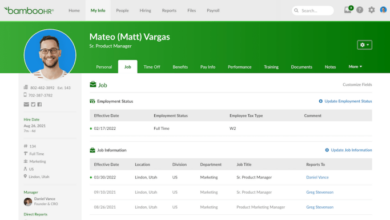

- BambooHR: BambooHR is a cloud-based HR software that offers a free trial period. While not entirely free, it provides a comprehensive suite of HR tools, including employee onboarding, performance reviews, and benefits administration.

- Pros: BambooHR is known for its user-friendly interface and excellent customer support.

- Cons: The free trial period is limited, and the paid plans can be expensive.

- TimeCamp: TimeCamp is a free time tracking software that offers a limited free plan for up to two users. It provides features like time tracking, project management, and reporting.

- Pros: TimeCamp is a simple and easy-to-use time tracking tool that integrates with popular project management software.

- Cons: The free plan has limited features and storage space.

- Workday: Workday is a cloud-based HR software that offers a free trial period. Similar to BambooHR, Workday provides a comprehensive suite of HR tools, including talent management, payroll, and benefits administration.

- Pros: Workday is highly scalable and offers advanced analytics and reporting features.

- Cons: The free trial period is limited, and the paid plans can be expensive.

Choosing the Right Free HR Software

When choosing free HR software, consider your business needs, the number of employees, and the features you require. Evaluate the pros and cons of each option and select the software that best meets your needs.

Choosing the Right Free HR Software

Choosing the right free HR software for your business can be a daunting task. With so many options available, it can be difficult to know where to start. This guide will help you navigate the process and select the best free HR software for your needs.

Factors to Consider When Choosing Free HR Software

Several factors need to be considered when selecting free HR software. It is important to evaluate your business needs, the features offered by the software, and the level of support provided.

- Business Size and Industry:The size and industry of your business will influence the features you need in HR software. Small businesses may only need basic features like time tracking and payroll, while larger businesses may require more advanced features like performance management and employee onboarding.

- Features:Consider the features that are essential for your business. Some common features include time tracking, payroll, employee onboarding, performance management, and benefits administration. Some free HR software options may offer limited features, while others may offer a comprehensive suite of tools.

- Ease of Use:The software should be easy to use and navigate. Look for a user-friendly interface that is intuitive and easy to understand.

- Customer Support:Free HR software options may offer varying levels of customer support. Some may offer email or phone support, while others may only offer online resources.

- Security and Privacy:It is essential to choose software that takes security and privacy seriously. Look for software that is compliant with industry standards and offers robust security measures.

- Integration:If you use other business applications, consider whether the HR software integrates with those applications. Integration can streamline processes and save time.

Comparing Free HR Software Options

Once you have identified your business needs, you can start comparing different free HR software options. The following table Artikels some of the most popular free HR software options, their key features, pricing, and target audience.

Finding the best free HR software can be a real headache, but it’s a crucial step for any small business. I’m planning on making my life a little easier this year by adding some helpful tools to my Christmas wishlist for the whole family – like this awesome list of gifts I put together.

Once I’ve got the right HR software, I can finally say goodbye to those endless spreadsheets and hello to a more efficient and organized workplace!

| Software Name | Key Features | Pricing | Target Audience |

|---|---|---|---|

| Zoho People | Employee onboarding, performance management, time tracking, payroll, benefits administration | Free plan available with limited features | Small to medium-sized businesses |

| OrangeHRM | Employee onboarding, performance management, time tracking, payroll, recruitment | Free plan available with limited features | Small to medium-sized businesses |

| BambooHR | Employee onboarding, performance management, time tracking, payroll, benefits administration | Free trial available, paid plans start at $45 per month | Small to medium-sized businesses |

| Workday | Employee onboarding, performance management, time tracking, payroll, benefits administration | Free trial available, paid plans start at $45 per month | Medium to large businesses |

Tips for Choosing the Right Free HR Software

Here are some additional tips for choosing the right free HR software:

- Read Reviews:Read reviews from other businesses that have used the software to get an idea of its strengths and weaknesses.

- Try a Free Trial:Most free HR software options offer a free trial. This allows you to test the software and see if it meets your needs.

- Consider Your Future Needs:As your business grows, you may need to upgrade to a paid plan. Consider the scalability of the free HR software option you choose.

Integrating Free HR Software

Integrating free HR software with your existing business systems can streamline your HR processes and improve efficiency. By connecting your HR software with other applications, you can automate tasks, share data seamlessly, and gain valuable insights into your workforce.

Finding the best free HR software can be a challenge, especially when you need to manage everything from payroll to employee records. One key feature to look for is CRM integration, which can streamline your processes and boost efficiency.

If you’re unfamiliar with CRM integration, check out this helpful article on what is crm integration. By understanding the benefits of CRM integration, you can choose the best free HR software to help your business thrive.

Connecting with Existing Systems

To effectively integrate free HR software, it’s crucial to understand how it connects with your existing systems. Most free HR software offers various integration options, including:

- API Integration:This method allows you to connect your HR software to other applications through a set of rules and specifications. APIs enable real-time data exchange and automation, facilitating seamless data flow between systems.

- Import/Export Functionality:Some free HR software provides import/export features, allowing you to transfer data between applications in a structured format like CSV or Excel. This method is suitable for transferring data periodically, but it may not offer real-time updates.

- Third-Party Integrations:Many free HR software platforms offer integrations with popular business applications through partnerships with third-party providers. These integrations often streamline data exchange and automate tasks, enhancing efficiency.

Data Migration and Integration

When integrating free HR software, data migration is a crucial step. To ensure a smooth transition, consider these tips:

- Plan and Prepare:Carefully plan your data migration strategy, defining the scope, data sources, and target systems. Identify potential data inconsistencies and address them beforehand.

- Clean and Validate Data:Before migrating data, ensure its accuracy and consistency. Clean up any errors, duplicates, or outdated information to avoid issues during integration.

- Test Thoroughly:Conduct thorough testing before fully implementing the integration. Migrate a small sample of data first to identify and resolve any potential problems.

- Document the Process:Document the entire integration process, including data mapping, transformation rules, and troubleshooting steps. This documentation will be valuable for future reference and maintenance.

Optimizing Free HR Software

To maximize the benefits of free HR software, follow these best practices:

- Customize Settings:Configure the software to align with your specific HR needs and processes. Adjust user roles, permissions, and workflows to optimize efficiency.

- Train Users Effectively:Provide comprehensive training to all users on the software’s features and functionalities. Encourage regular practice to ensure everyone is comfortable using the system.

- Monitor Performance and Feedback:Regularly monitor the software’s performance and gather user feedback. Identify areas for improvement and implement necessary adjustments to enhance usability.

- Leverage Reporting and Analytics:Utilize the software’s reporting and analytics features to gain insights into your workforce data. Track key metrics, identify trends, and make informed decisions.

Security and Privacy Considerations

When choosing free HR software, it’s crucial to prioritize data security and privacy. Employee information is highly sensitive, and a breach can have serious consequences for your organization. Therefore, understanding the security measures implemented by the free HR software provider is essential.

Data Security Measures

Data security is paramount when handling sensitive employee information. Free HR software providers should implement robust security measures to protect this data from unauthorized access, use, disclosure, alteration, or destruction.

- Data Encryption:All data should be encrypted both in transit and at rest. This means that data is scrambled during transmission and storage, making it unreadable to unauthorized individuals.

- Access Control:Implement strong access control mechanisms to limit access to sensitive data to authorized personnel. This includes assigning specific roles and permissions based on job responsibilities.

- Regular Security Audits:Conduct regular security audits to identify vulnerabilities and ensure that security measures are effective. These audits should be conducted by independent third parties to ensure objectivity.

- Two-Factor Authentication (2FA):Implement 2FA to add an extra layer of security to user accounts. This requires users to provide two forms of authentication, such as a password and a code sent to their mobile device, before accessing the system.

Privacy Considerations

Employee privacy is a crucial aspect of data security. Free HR software providers should adhere to data privacy regulations and ensure that employee data is handled responsibly.

- Data Minimization:Collect only the necessary employee data and avoid collecting excessive or irrelevant information. This helps minimize the risk of data breaches and ensures compliance with data privacy regulations.

- Data Retention Policies:Establish clear data retention policies that specify how long employee data is stored and how it is disposed of after the retention period. This ensures that data is not stored indefinitely and minimizes the risk of unauthorized access or use.

- Transparency and Consent:Be transparent with employees about how their data is collected, used, and shared. Obtain explicit consent from employees before collecting or using their personal information.

- Data Subject Rights:Ensure that employees have the right to access, rectify, erase, and restrict the processing of their personal data. This aligns with data privacy regulations such as the General Data Protection Regulation (GDPR).

Potential Risks of Free HR Software

While free HR software offers cost savings, it’s essential to be aware of potential risks associated with using these platforms.

- Limited Security Features:Free HR software providers may offer fewer security features compared to paid solutions. This could compromise the security of employee data, especially if the platform lacks encryption, access controls, or regular security audits.

- Data Ownership and Control:Free HR software providers may have access to your employee data and may use it for their own purposes. This could raise privacy concerns, as you may not have complete control over your data.

- Data Backup and Recovery:Free HR software providers may not offer robust data backup and recovery services. This could result in data loss in the event of a system failure or cyberattack.

- Limited Support:Free HR software providers may offer limited customer support or technical assistance. This could make it difficult to resolve issues or obtain help when needed.

Future Trends in Free HR Software: Best Free Hr Software

The world of free HR software is constantly evolving, driven by technological advancements and changing workforce needs. This dynamic landscape presents exciting possibilities for businesses seeking efficient and cost-effective HR solutions.

Integration with Other Business Applications

Free HR software is increasingly integrating with other business applications, such as accounting, CRM, and project management tools. This integration creates a seamless workflow and eliminates the need for manual data entry, streamlining processes and improving data accuracy. For instance, integrating free HR software with an accounting system can automate payroll and expense tracking, saving time and reducing errors.

Artificial Intelligence and Automation, Best free hr software

AI and automation are transforming the HR landscape, making free HR software more intelligent and efficient. AI-powered features like chatbots for employee queries, automated scheduling, and personalized learning recommendations are becoming increasingly common. For example, AI-powered recruitment tools can analyze resumes and identify top candidates, automating the initial screening process.

Data Analytics and Insights

Free HR software is leveraging data analytics to provide valuable insights into workforce trends and employee performance. Data-driven insights can help businesses make informed decisions regarding recruitment, training, and employee engagement. For instance, analyzing employee feedback data can identify areas for improvement in employee satisfaction and engagement.

Mobile-First Approach

With the rise of remote work and mobile devices, free HR software is adopting a mobile-first approach. This means providing user-friendly mobile apps that allow employees to access HR information and complete tasks from anywhere, anytime. Mobile-first HR software enhances employee experience and facilitates communication and collaboration.

Focus on Employee Experience

Free HR software is increasingly focusing on improving the employee experience. Features like self-service portals, personalized onboarding, and gamified learning platforms are designed to engage employees and enhance their overall experience. For example, a gamified learning platform can make training more interactive and enjoyable, leading to better knowledge retention.

Enhanced Security and Privacy

As businesses handle sensitive employee data, security and privacy are paramount. Free HR software providers are implementing robust security measures, such as encryption, multi-factor authentication, and data backups, to protect sensitive information.