AI Caution Risk Statement: Navigating the Future of Artificial Intelligence

AI Caution Risk Statements are becoming increasingly important as artificial intelligence (AI) rapidly advances. These statements serve as a critical tool for organizations to communicate potential risks and ethical considerations associated with AI development and deployment. By outlining potential dangers and outlining responsible practices, these statements aim to foster public trust and encourage the ethical development of AI.

Imagine a world where AI-powered systems make decisions that impact our lives, from healthcare to finance. As we navigate this uncharted territory, it’s essential to have clear guidelines and responsible practices in place. AI Caution Risk Statements provide this framework, ensuring that AI is developed and used ethically and responsibly, for the benefit of all.

Understanding AI Caution and Risk

An AI caution and risk statement is a formal document that Artikels the potential risks and limitations associated with the development and deployment of artificial intelligence (AI) systems. These statements are crucial for responsible AI development and adoption, ensuring transparency and accountability.

It’s important to remember that AI development comes with its own set of risks, and we need to be cautious about its potential impact on society. While the tech giants are busy innovating, like Apple’s rumored all-glass iMac, all glass imac of the future spotted again as apple updates its patent , we must also ensure that these advancements are used responsibly and ethically.

This means addressing concerns about job displacement, privacy violations, and potential biases in AI systems.

The Purpose of AI Caution and Risk Statements

AI caution and risk statements serve several important purposes across various contexts:

- Transparency and Accountability:These statements provide transparency about the potential risks and limitations of AI systems to stakeholders, including users, developers, and policymakers. They help establish accountability by outlining the responsibilities of those involved in AI development and deployment.

- Risk Mitigation:By identifying potential risks early on, these statements facilitate proactive risk mitigation strategies. This can involve implementing safeguards, developing ethical guidelines, and establishing robust monitoring systems.

- Informed Decision-Making:AI caution and risk statements empower stakeholders to make informed decisions about AI adoption. They provide a comprehensive understanding of the potential benefits and drawbacks, enabling responsible and ethical integration of AI into various domains.

- Public Trust and Confidence:By openly addressing potential risks, these statements foster public trust and confidence in AI. They demonstrate that developers and organizations are taking a responsible approach to AI development and deployment.

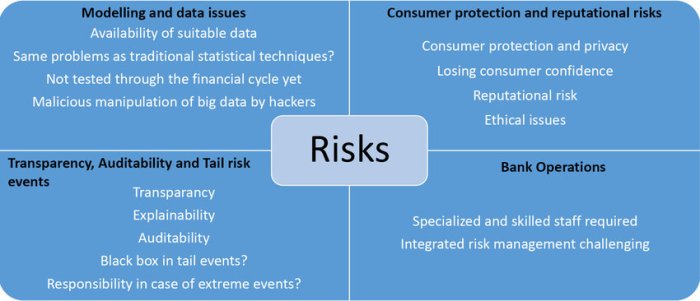

Potential Risks Associated with AI Development and Deployment

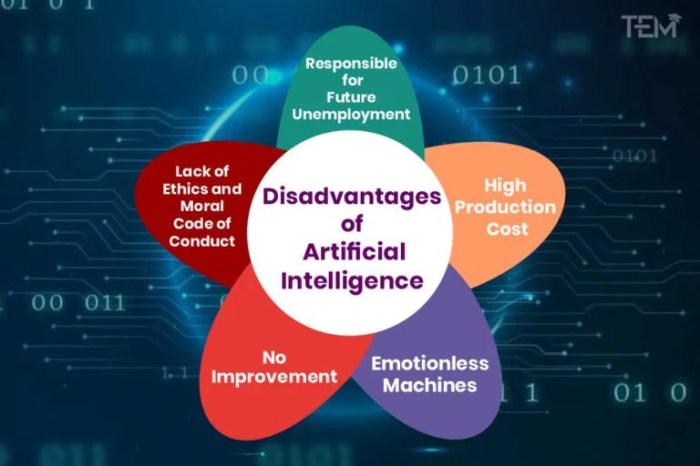

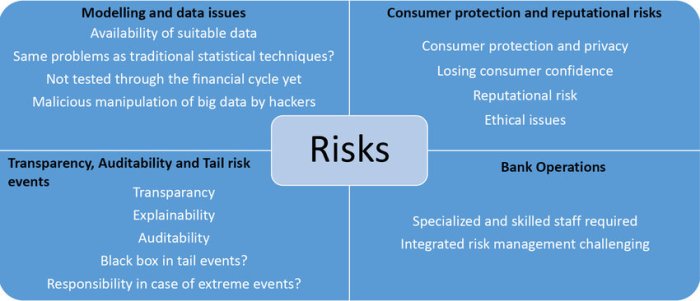

The development and deployment of AI systems pose several potential risks, which are often categorized into:

- Bias and Discrimination:AI algorithms can perpetuate existing biases present in training data, leading to unfair or discriminatory outcomes. For example, a facial recognition system trained on a dataset primarily composed of light-skinned individuals may perform poorly on darker-skinned individuals, potentially leading to inaccurate identification and discriminatory practices.

- Privacy and Security:AI systems often collect and process vast amounts of personal data, raising concerns about privacy violations and security breaches. For instance, AI-powered surveillance systems can track individuals’ movements and activities, potentially leading to misuse or unauthorized access to sensitive information.

- Job Displacement:AI automation has the potential to displace human workers in certain sectors, raising concerns about unemployment and economic inequality. For example, AI-powered chatbots and virtual assistants are already replacing human customer service representatives in some industries.

- Lack of Explainability:AI algorithms can be complex and opaque, making it difficult to understand their decision-making processes. This lack of explainability can lead to distrust and hinder accountability. For instance, a loan approval algorithm might deny a loan application without providing clear reasons, making it challenging to challenge the decision.

It’s important to remember that while AI is a powerful tool, it’s not without its risks. We need to be mindful of the potential for bias and misuse, especially when it comes to sensitive data like customer identities. Understanding the trends in customer identity management, like those outlined in this article on Okta customer identity trends , can help us develop more secure and ethical AI solutions.

- Misuse and Malicious Intent:AI systems can be misused for malicious purposes, such as creating deepfakes or developing autonomous weapons. For example, AI-generated deepfakes can be used to spread misinformation or damage reputations, while autonomous weapons raise ethical concerns about the potential for unintended consequences and loss of human control.

Examples of Real-World AI Caution and Risk Statements

Several organizations have published AI caution and risk statements to address the potential risks associated with their AI systems:

- Google’s AI Principles:Google’s AI Principles Artikel a set of ethical guidelines for AI development and deployment, emphasizing fairness, accountability, and responsible innovation.

- Microsoft’s AI Principles:Microsoft’s AI Principles focus on fairness, reliability, privacy, security, and inclusiveness. These principles guide the company’s AI development and deployment practices.

- The Partnership on AI:The Partnership on AI, a non-profit organization with members from industry, academia, and civil society, has published guidelines on AI safety and ethics, advocating for responsible AI development and deployment.

- The European Union’s General Data Protection Regulation (GDPR):The GDPR includes provisions related to data protection and privacy in the context of AI, requiring organizations to ensure transparency and accountability in their use of personal data.

Key Elements of an AI Caution and Risk Statement: Ai Caution Risk Statement

An AI caution and risk statement is a crucial document that Artikels the potential risks and limitations associated with using AI systems. It acts as a transparent communication tool for stakeholders, including users, developers, and policymakers, to understand the potential implications of AI technology.

We’re all excited about the potential of AI, but it’s important to remember the cautionary risk statement. As we push the boundaries of technology, we need to be mindful of its ethical implications. It’s interesting to see how tech giants like Apple are navigating this space.

For instance, in a recent interview, Tim Cook blindly ranked his top five Apple products of all time, including one of its most controversial sorts. This kind of introspection from industry leaders can help guide the conversation about responsible AI development.

A comprehensive AI caution and risk statement should include various essential elements to ensure its effectiveness.

Clarity and Transparency

Clarity and transparency are paramount in AI caution and risk statements. They ensure that the information is easily understandable and accessible to a broad audience.

- Plain Language:Use clear and concise language that avoids technical jargon. The statement should be comprehensible to individuals with varying levels of technical expertise.

- Specific Examples:Provide concrete examples to illustrate the potential risks and limitations of the AI system. This helps users understand the practical implications of the technology.

- Avoid Ambiguity:The statement should be unambiguous and avoid vague or misleading language. It should clearly define the scope of the AI system and the risks associated with it.

Accessibility

An AI caution and risk statement should be readily accessible to all stakeholders.

- Publicly Available:The statement should be published on a publicly accessible website or platform. This ensures that anyone can access and review the information.

- Multiple Formats:The statement should be available in multiple formats, such as PDF, HTML, and audio, to cater to different user preferences and accessibility needs.

- Translation:Consider translating the statement into multiple languages to reach a wider audience.

Ethical Considerations

Ethical considerations are crucial in formulating AI caution and risk statements.

- Bias and Fairness:The statement should address potential biases in the AI system and highlight the importance of fairness and inclusivity in AI development and deployment.

- Privacy and Data Security:It should Artikel the potential risks to user privacy and data security and emphasize the need for responsible data management practices.

- Transparency and Accountability:The statement should promote transparency in AI development and deployment and establish mechanisms for accountability in case of harm or unintended consequences.

Best Practices for Crafting Effective AI Caution and Risk Statements

Crafting an effective AI caution and risk statement requires careful planning and consideration. Here are some best practices:

- Identify the Target Audience:Define the intended audience for the statement and tailor the language and content accordingly.

- Involve Experts:Consult with experts in AI ethics, law, and technology to ensure the statement is comprehensive and accurate.

- Use a Structured Format:Employ a clear and structured format to organize the information and make it easy to navigate.

- Review and Update Regularly:The statement should be reviewed and updated regularly to reflect advancements in AI technology and evolving ethical considerations.

Applications of AI Caution and Risk Statements

AI caution and risk statements are becoming increasingly important as AI technologies are rapidly integrated into various aspects of our lives. These statements serve as a vital tool for responsible AI development and deployment, ensuring that potential risks are identified and mitigated.

AI Caution and Risk Statements in Different Industries, Ai caution risk statement

AI caution and risk statements are applicable across a wide range of industries, each presenting unique challenges and considerations.

- Healthcare:In healthcare, AI systems are used for diagnosis, treatment planning, and drug discovery. Caution and risk statements are crucial to address concerns regarding data privacy, algorithmic bias, and the potential for misdiagnosis.

- Finance:AI is employed in financial institutions for fraud detection, risk assessment, and algorithmic trading. Caution and risk statements highlight the need for transparency, explainability, and robustness in these AI systems to prevent financial instability.

- Transportation:Autonomous vehicles and traffic management systems rely heavily on AI. Caution and risk statements focus on safety concerns, ethical considerations in decision-making, and potential vulnerabilities to cyberattacks.

- Education:AI is being used for personalized learning, automated grading, and educational resource recommendations. Caution and risk statements emphasize the importance of equity, access, and ensuring that AI systems do not perpetuate existing biases in education.

- Law Enforcement:AI is employed in law enforcement for facial recognition, predictive policing, and crime analysis. Caution and risk statements address the potential for bias, privacy violations, and the misuse of AI for discriminatory practices.

Challenges and Future Directions

While AI caution and risk statements hold significant promise for responsible AI development, their implementation faces various challenges. The rapidly evolving nature of AI necessitates continuous adaptation and refinement of these statements, demanding a robust framework for evaluation and ongoing improvement.

Challenges in Developing and Implementing AI Caution and Risk Statements

The development and implementation of AI caution and risk statements are not without their hurdles. These statements often require a deep understanding of the technical intricacies of AI systems, along with a nuanced comprehension of the ethical and societal implications of their use.

- Defining Scope and Boundaries:Accurately defining the scope and boundaries of AI systems is crucial for crafting effective caution and risk statements. The rapid advancements in AI technologies, particularly in areas like machine learning and deep learning, pose challenges in defining the scope of potential risks and impacts.

- Data Bias and Fairness:AI systems are trained on data, and biases present in the data can lead to unfair or discriminatory outcomes. Identifying and mitigating these biases in AI caution and risk statements is essential for ensuring equitable and responsible AI deployment.

- Transparency and Explainability:AI systems, particularly complex ones, can be difficult to understand and interpret. This lack of transparency and explainability poses challenges in effectively communicating risks and limitations to users and stakeholders.

- Evolving AI Landscape:The field of AI is constantly evolving, with new technologies and applications emerging at a rapid pace. This dynamic landscape necessitates ongoing updates and revisions of AI caution and risk statements to remain relevant and effective.

- Collaboration and Coordination:Developing and implementing AI caution and risk statements effectively requires collaboration and coordination among diverse stakeholders, including researchers, developers, policymakers, and users.

Ongoing Updates and Refinement

The dynamic nature of AI necessitates ongoing updates and refinement of AI caution and risk statements. As AI technologies evolve, so too must the associated cautionary measures. This requires a proactive approach to monitoring emerging trends, evaluating the effectiveness of existing statements, and adapting them accordingly.

Future Directions for AI Caution and Risk Statements

Looking ahead, AI caution and risk statements will play an increasingly crucial role in shaping the future of AI. These statements will need to be more comprehensive, adaptable, and integrated into the broader governance framework for AI.

- Role of Regulation and Governance:Regulation and governance play a vital role in ensuring responsible AI development and deployment. AI caution and risk statements should be integrated into regulatory frameworks, providing guidance and standards for developers and users.

- Standardization and Best Practices:Developing standardized frameworks and best practices for AI caution and risk statements will facilitate consistency and promote interoperability across different AI systems and applications.

- AI Ethics and Values:AI caution and risk statements should be grounded in ethical principles and values, ensuring that AI development and deployment align with societal expectations and promote the well-being of individuals and communities.

Evaluating the Effectiveness of AI Caution and Risk Statements

Evaluating the effectiveness of AI caution and risk statements is essential for ensuring their ongoing relevance and impact. A robust framework for evaluation should consider:

- Clarity and Accessibility:The statements should be clear, concise, and accessible to a broad audience, including non-technical users.

- Comprehensiveness and Accuracy:The statements should accurately reflect the potential risks and limitations of AI systems, covering a comprehensive range of considerations.

- Impact and Effectiveness:The statements should be evaluated based on their impact on user behavior, decision-making, and the overall responsible development and deployment of AI.