AI Deepfake Risks: A Threat to APAC Enterprises

Ai deepfake risks enterprises apac – AI Deepfake Risks: A Threat to APAC Enterprises – The rise of deepfake technology in the Asia-Pacific region (APAC) is causing significant concern, particularly for businesses. Deepfakes, which are hyperrealistic AI-generated videos or images that can manipulate individuals’ identities, pose a serious threat to reputation, brand image, and financial stability.

From fabricated news reports to impersonation scams, the potential for damage is immense. The increasing accessibility of deepfake tools, coupled with the region’s rapid digital transformation, has created a perfect storm for malicious actors to exploit.

The impact of deepfakes extends beyond individual harm. Enterprises in APAC face unique vulnerabilities due to the region’s complex regulatory landscape, diverse cultural contexts, and rapidly evolving digital ecosystem. This article will explore the key risks associated with deepfakes, discuss detection and mitigation strategies, and delve into the legal and regulatory implications.

We’ll also examine real-world examples and future trends to understand the evolving threat landscape and offer recommendations for businesses to protect themselves.

The Rise of Deepfakes in APAC

The Asia-Pacific (APAC) region is experiencing a rapid surge in the use of deepfake technology, with both its potential benefits and risks becoming increasingly apparent. Deepfakes, synthetic media that manipulate real individuals, are finding their way into various aspects of life in APAC, from entertainment to politics and even personal relationships.

The rise of AI deepfakes poses serious risks for enterprises across APAC, particularly in areas like reputation management and brand security. Imagine a deepfake video of a CEO endorsing a competitor’s product, or a fabricated statement attributed to a company spokesperson – the damage could be significant.

This is why it’s crucial to stay vigilant and informed about these technologies. For instance, you might be surprised to learn about the viral emma trey wedding video , which was actually a sophisticated deepfake created for entertainment purposes. While this example may be harmless, it highlights the potential for malicious use, making it even more critical for APAC businesses to prioritize cybersecurity measures against deepfake threats.

Examples of Deepfake Incidents in APAC

Deepfake incidents have been reported across APAC, highlighting the technology’s growing impact and raising concerns about its potential misuse.

- In 2020, a deepfake video of a prominent Indian politician making inflammatory remarks went viral, sparking outrage and raising questions about the potential for deepfakes to be used for political manipulation.

- In 2021, a deepfake video of a popular South Korean K-pop star was used to promote a fraudulent cryptocurrency scheme, highlighting the potential for deepfakes to be used for financial fraud.

- In 2022, a deepfake audio recording of a Chinese businessman was used to extort money from his business partners, demonstrating the potential for deepfakes to be used for blackmail and extortion.

Factors Contributing to the Increasing Adoption of Deepfake Technology in APAC

Several factors contribute to the increasing adoption of deepfake technology in APAC.

- Rapid technological advancements:The rapid advancement of artificial intelligence (AI) and machine learning (ML) technologies has made it easier and cheaper to create realistic deepfakes.

- Growing digital literacy:Increased internet penetration and smartphone adoption in APAC have made it easier for people to access and share deepfake content.

- Lack of awareness and regulation:There is a lack of public awareness about deepfakes and their potential risks, and regulatory frameworks for addressing deepfakes are still under development in many APAC countries.

- Economic opportunities:Deepfake technology has opened up new economic opportunities for businesses and individuals in APAC, particularly in the entertainment and advertising industries.

Deepfake Risks for Enterprises in APAC

The rise of deepfake technology presents a significant threat to enterprises in the Asia-Pacific (APAC) region. These sophisticated AI-powered tools can create highly realistic fabricated videos and audio recordings, posing a wide range of risks that can damage reputation, brand image, and financial stability.

Reputation and Brand Damage

Deepfakes can be used to create damaging content that portrays individuals or organizations in a negative light. For example, a deepfake video of a CEO making offensive remarks or a fabricated audio recording of a company spokesperson announcing a fraudulent product recall could severely damage the reputation of the enterprise.

This reputational damage can lead to loss of trust among customers, partners, and investors, ultimately impacting brand value and market share.

The rise of AI deepfakes is a serious concern for enterprises in APAC, as it poses significant risks to reputation, brand integrity, and even financial stability. While we grapple with these challenges, it’s interesting to note that the tech world is moving forward with exciting developments like the upcoming iPhone 16 series.

Recent leaks suggest that the iPhone 16 Pro will feature a design change , while the standard model might stick with a familiar look. These advancements in consumer technology highlight the need for a proactive approach to mitigating the risks posed by AI deepfakes, ensuring that businesses can navigate this evolving landscape with confidence.

Financial Stability

Deepfake attacks can directly impact an enterprise’s financial stability. Fabricated financial reports, fraudulent transactions, or even fake stock market announcements can manipulate market sentiment and cause significant financial losses. Additionally, the cost of managing a deepfake crisis, including legal fees, public relations campaigns, and remediation efforts, can be substantial.

Specific Vulnerabilities of APAC Enterprises

APAC enterprises face unique vulnerabilities to deepfake attacks. The region’s rapid digital transformation and adoption of new technologies have created a fertile ground for deepfake creators to exploit. Additionally, the cultural context in APAC, with its emphasis on trust and respect for authority, makes deepfakes particularly effective in manipulating public opinion.

- Rapid Digital Adoption:The widespread adoption of social media and online communication channels in APAC provides numerous avenues for deepfake dissemination.

- Cultural Context:Deepfakes can exploit cultural sensitivities and trust in authority figures to create highly impactful misinformation campaigns.

- Data Availability:The abundance of online data in APAC, including social media posts, news articles, and public speeches, provides ample material for deepfake creators to use in generating realistic fabrications.

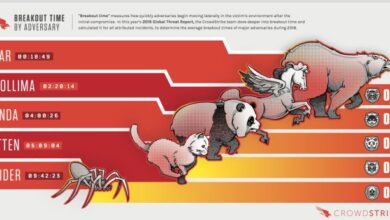

Deepfake Detection and Mitigation Strategies: Ai Deepfake Risks Enterprises Apac

Deepfakes, the synthetic media generated by artificial intelligence (AI) to manipulate and create realistic fake content, pose a significant threat to enterprises in APAC. Recognizing and addressing this threat is crucial for safeguarding reputation, protecting business interests, and maintaining trust in the digital ecosystem.

This section delves into the strategies for detecting and mitigating deepfake risks, exploring the role of AI and machine learning in combating these threats.

Best Practices for Detecting Deepfakes

Detecting deepfakes requires a multi-faceted approach that combines technological tools, human expertise, and a strong awareness of potential vulnerabilities. Enterprises in APAC can leverage the following best practices to enhance their detection capabilities:

- Analyze Facial Micro-expressions:Deepfakes often struggle to replicate subtle facial expressions, such as eye blinks or lip movements, with the same accuracy as real videos. Analyzing these micro-expressions can be a valuable indicator of authenticity.

- Examine Lighting and Shadows:Inconsistent lighting or shadows across the face can indicate a deepfake. Deepfake algorithms often struggle to accurately replicate the way light interacts with real-world objects.

- Assess Background Inconsistencies:Deepfakes may exhibit inconsistencies in the background of the video, such as blurry areas or objects that appear out of place.

- Look for Artifacts and Blurs:Deepfakes can leave behind artifacts or blurs in the image or video, particularly around the edges of the face or other objects. These anomalies can be detected by trained AI models.

- Analyze Audio Inconsistencies:Deepfakes can sometimes produce unnatural-sounding audio, such as a mismatch between lip movements and speech, or a lack of natural variations in tone and inflection.

AI and Machine Learning for Deepfake Detection

AI and machine learning play a pivotal role in deepfake detection. These technologies can be trained on vast datasets of real and synthetic media to identify subtle patterns and anomalies that indicate a deepfake.

- Deep Learning Algorithms:Deep learning algorithms, such as convolutional neural networks (CNNs), can be trained to analyze images and videos, detecting inconsistencies in facial features, lighting, and other visual cues.

- Machine Learning Models:Machine learning models can be used to analyze audio and text, identifying inconsistencies in speech patterns, language usage, and other linguistic features.

- Automated Deepfake Detection Tools:Several automated tools are available that leverage AI and machine learning to detect deepfakes. These tools can be integrated into enterprise security systems to provide real-time monitoring and alert systems.

Deepfake Risk Management Framework

Developing a comprehensive deepfake risk management framework is crucial for enterprises in APAC. This framework should include the following key elements:

- Risk Assessment:Identify and assess the potential risks posed by deepfakes to the enterprise, considering factors such as industry, target audience, and potential impact on reputation and business operations.

- Policy and Procedures:Establish clear policies and procedures for handling deepfake incidents, including reporting protocols, investigation procedures, and communication strategies.

- Technology Implementation:Implement deepfake detection tools and technologies, including AI-powered solutions, to enhance the ability to identify and mitigate risks.

- Employee Training:Train employees on deepfake awareness, detection techniques, and appropriate response protocols.

- Collaboration and Information Sharing:Establish partnerships with other organizations, industry associations, and law enforcement agencies to share information, best practices, and resources on deepfake threats.

Mitigation Strategies for Deepfake Threats

Once a deepfake is detected, enterprises must take swift and decisive action to mitigate the potential damage. Effective mitigation strategies include:

- Rapid Response:Respond promptly to deepfake incidents to minimize the spread of misinformation and limit potential damage to reputation.

- Fact-Checking and Verification:Verify the authenticity of content using trusted sources and independent fact-checking organizations.

- Legal Action:Consider legal options, such as filing lawsuits against those responsible for creating or disseminating deepfakes, if appropriate.

- Public Awareness Campaigns:Educate the public about the dangers of deepfakes and how to identify and verify information.

- Collaboration with Social Media Platforms:Work with social media platforms to flag and remove deepfake content.

Legal and Regulatory Landscape

The legal and regulatory landscape surrounding deepfakes in APAC is rapidly evolving, driven by the increasing prevalence of deepfake technology and its potential for misuse. Governments and regulatory bodies across the region are grappling with the challenges of balancing the need to protect individuals and society from the harms of deepfakes while fostering innovation and promoting freedom of expression.

Challenges in Regulating Deepfake Technology

Regulating deepfake technology presents a complex challenge due to the inherent nature of the technology and its diverse applications. Deepfakes are often difficult to detect, making it challenging to identify and prosecute instances of misuse. The technology’s potential for both harm and benefit further complicates the regulatory landscape.

- Defining Deepfakes:A key challenge is establishing a clear definition of what constitutes a deepfake. The definition should be broad enough to encompass various forms of deepfake manipulation while being specific enough to avoid unintended consequences. For instance, the definition should differentiate between harmless deepfakes created for entertainment purposes and those intended to deceive or cause harm.

AI deepfakes are a growing concern for enterprises in APAC, especially with the region’s rapidly evolving digital landscape. It’s a complex issue that requires a multifaceted approach, much like building a unique piece of furniture. For example, you might find inspiration in the creative designs of diy diamond shaped book shelves , which require careful planning and execution.

Similarly, mitigating deepfake risks requires careful planning and collaboration between stakeholders to ensure the integrity of information and protect reputations.

- Identifying Intent:Determining the intent behind the creation and dissemination of deepfakes is crucial for effective regulation. A deepfake created for entertainment purposes may not require the same level of regulation as one used to spread disinformation or damage an individual’s reputation.

- Balancing Free Speech:Regulating deepfakes raises concerns about potential restrictions on freedom of expression. Striking a balance between protecting individuals from harm and safeguarding free speech rights is a delicate task.

- Technological Advancements:Deepfake technology is constantly evolving, making it difficult to create regulations that can keep pace with technological advancements. New techniques and applications emerge regularly, requiring ongoing updates to regulatory frameworks.

Potential Legal and Regulatory Frameworks

Several potential legal and regulatory frameworks are being explored to address deepfake risks. These frameworks aim to establish clear guidelines for the creation, dissemination, and use of deepfakes, while also addressing concerns about freedom of expression and innovation.

- Disclosure Requirements:Requiring the disclosure of deepfakes, particularly in contexts where they could be used to deceive or mislead, could mitigate potential harm. For example, social media platforms could implement policies requiring users to label content created using deepfake technology.

- Liability Frameworks:Establishing clear liability frameworks for individuals or organizations involved in the creation or dissemination of harmful deepfakes could deter malicious use. This could include holding individuals or entities responsible for damages caused by deepfakes.

- Industry Self-Regulation:Encouraging industry self-regulation through the development of ethical guidelines and best practices can help address deepfake risks. Technology companies can play a proactive role in developing tools and technologies to detect and mitigate deepfakes.

- International Cooperation:Deepfakes are a global issue, necessitating international cooperation to develop effective regulatory frameworks. Sharing best practices, coordinating enforcement efforts, and developing common standards for deepfake detection and mitigation are crucial steps.

Case Studies and Real-World Examples

The rise of deepfakes has brought about a new wave of challenges for enterprises in APAC. Understanding real-world incidents and their impact can provide valuable insights into the risks and opportunities associated with deepfakes. This section will delve into case studies of enterprises in APAC that have been affected by deepfakes, explore successful detection and mitigation efforts, and discuss lessons learned from real-world incidents.

Deepfake-Related Incidents in APAC

Several high-profile cases involving deepfakes have made headlines in APAC, highlighting the growing threat posed by this technology.

- In 2019, a deepfake video of a prominent Indian politician making inflammatory remarks went viral, sparking widespread public outrage and raising concerns about the potential for deepfakes to be used for political manipulation.

- A case in Singapore involved a deepfake video of a company CEO being used to authorize a fraudulent transaction, highlighting the vulnerability of businesses to financial fraud through deepfakes.

- In 2020, a deepfake video of a Chinese celebrity endorsing a product without their consent was circulated online, raising concerns about the potential for deepfakes to be used for commercial exploitation.

Successful Deepfake Detection and Mitigation Efforts

While deepfakes pose significant challenges, enterprises are developing strategies to mitigate their risks.

- Several companies in APAC have implemented deepfake detection tools that use artificial intelligence (AI) algorithms to analyze video and audio content for signs of manipulation. These tools can identify inconsistencies in facial expressions, lip movements, and other subtle cues that can indicate a deepfake.

- Some organizations have adopted policies and procedures to educate employees about the risks of deepfakes and train them to identify potential deepfake content. This includes awareness campaigns, workshops, and simulations that help employees develop critical thinking skills and identify potential red flags.

- Several financial institutions in APAC have implemented multi-factor authentication (MFA) systems to enhance security and prevent unauthorized access to accounts, even if a deepfake video is used to impersonate a legitimate user.

Lessons Learned from Real-World Deepfake Incidents

The incidents discussed above offer valuable lessons for enterprises in APAC.

- The rapid advancement of deepfake technology underscores the importance of proactive measures to address the associated risks. Enterprises need to stay informed about the latest developments in deepfake technology and implement appropriate security measures to mitigate potential threats.

- Deepfakes can be used for a wide range of malicious purposes, including financial fraud, political manipulation, and reputational damage. Enterprises need to be prepared to respond to these threats effectively and develop robust strategies for damage control.

- Collaboration between industry stakeholders, government agencies, and research institutions is crucial for developing effective deepfake detection and mitigation strategies. Sharing best practices and knowledge can help enterprises stay ahead of the curve and protect themselves from deepfake-related threats.

Future Implications and Recommendations

The rapid evolution of deepfake technology in APAC presents both exciting opportunities and significant challenges for enterprises. Understanding the emerging trends and potential implications is crucial for navigating this complex landscape effectively. This section will explore the future of deepfakes in APAC, provide recommendations for enterprises, and highlight the importance of collaboration in mitigating deepfake threats.

Emerging Trends and Future Implications, Ai deepfake risks enterprises apac

Deepfake technology is expected to become even more sophisticated and accessible in the coming years, posing new challenges for enterprises in APAC. Several emerging trends are likely to shape the future of deepfakes:

- Increased Accessibility and Ease of Use:Deepfake creation tools are becoming increasingly user-friendly and readily available, making it easier for individuals with limited technical expertise to create convincing deepfakes. This democratization of deepfake technology could lead to a surge in the number of deepfakes created and disseminated.

- Integration with Other Technologies:Deepfakes are increasingly being integrated with other technologies, such as artificial intelligence (AI), virtual reality (VR), and augmented reality (AR), to create more realistic and immersive experiences. This convergence of technologies could lead to the creation of deepfakes that are even more difficult to detect.

- Growth of Deepfake-as-a-Service (DaaS):The emergence of deepfake-as-a-service platforms will allow individuals and organizations to outsource the creation of deepfakes to third-party providers. This could further accelerate the proliferation of deepfakes and make it more challenging to trace their origins.

- Deepfakes in Emerging Technologies:Deepfakes are being explored for use in various emerging technologies, such as autonomous vehicles, healthcare, and education. While these applications have potential benefits, they also raise concerns about the ethical implications and potential misuse of deepfake technology.

These trends highlight the growing need for enterprises in APAC to proactively address the risks associated with deepfakes. Failing to do so could lead to significant reputational damage, financial losses, and legal consequences.

Recommendations for Enterprises

To prepare for the evolving deepfake landscape, enterprises in APAC should consider implementing the following recommendations:

- Develop a Deepfake Risk Management Strategy:Enterprises should develop a comprehensive risk management strategy that identifies potential deepfake threats, assesses the likelihood and impact of these threats, and Artikels mitigation strategies.

- Invest in Deepfake Detection and Mitigation Technologies:Enterprises should invest in advanced deepfake detection and mitigation technologies, such as AI-powered tools that can identify and flag potential deepfakes. They should also explore technologies that can help to authenticate content and verify the authenticity of individuals in videos and images.

- Educate Employees and Stakeholders:Enterprises should educate their employees and stakeholders about the risks associated with deepfakes and how to identify and report potential instances of deepfake manipulation. This includes providing training on the latest deepfake technologies and best practices for identifying deepfakes.

- Collaborate with Industry Partners:Enterprises should collaborate with industry partners, government agencies, and academic institutions to share information, develop best practices, and collectively address the challenges posed by deepfakes. This collaborative approach can help to accelerate the development of effective deepfake detection and mitigation solutions.

- Advocate for Policy and Regulatory Changes:Enterprises should advocate for the development of clear legal frameworks and regulatory policies that address the use and misuse of deepfake technology. This includes advocating for regulations that require the disclosure of deepfake content and hold individuals and organizations accountable for creating and disseminating harmful deepfakes.

The Role of Collaboration and Information Sharing

Collaboration and information sharing are crucial in combating deepfake threats. Enterprises should work together to:

- Establish Industry-Wide Standards:Collaborate to develop industry-wide standards for identifying and reporting deepfakes, ensuring consistency and interoperability across different platforms and technologies.

- Share Best Practices and Lessons Learned:Share best practices and lessons learned from real-world experiences with deepfakes, enabling organizations to learn from each other and develop more effective mitigation strategies.

- Develop Joint Research Initiatives:Partner with academic institutions and research organizations to conduct joint research on deepfake detection and mitigation technologies, accelerating the development of innovative solutions.

- Promote Public Awareness:Collaborate with government agencies and media outlets to raise public awareness about the risks of deepfakes and empower individuals to identify and report potential instances of deepfake manipulation.

By working together, enterprises in APAC can create a more resilient and secure environment that is better equipped to address the evolving challenges posed by deepfake technology.