Generative AI Cloud Security: A New Frontier

Generative AI cloud security sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail with personal blog style and brimming with originality from the outset. Generative AI, with its ability to create new content, has revolutionized industries, but its rapid adoption in the cloud has brought forth a new set of security challenges.

From data poisoning to model theft, the risks are real and require a proactive approach to ensure the integrity and safety of these powerful technologies.

This blog post dives into the complexities of securing generative AI in the cloud, exploring the unique vulnerabilities, essential security measures, and emerging threats. We’ll discuss how to protect sensitive data, secure models from malicious attacks, and navigate the ethical considerations surrounding this rapidly evolving field.

Introduction to Generative AI in the Cloud

Generative AI is a powerful new technology that is rapidly transforming many industries. This type of artificial intelligence is capable of creating new content, such as text, images, audio, video, and code, based on the data it has been trained on.

Generative AI has the potential to revolutionize the way we work, learn, and create.Cloud computing plays a crucial role in the development and deployment of generative AI. The scalability, flexibility, and cost-effectiveness of cloud platforms make them ideal for training and running large generative AI models.

Cloud providers offer a wide range of services and tools that simplify the process of building and deploying generative AI applications.However, the use of generative AI in the cloud also presents unique security challenges. The complex nature of generative AI models and the vast amounts of data they require make them vulnerable to various security threats.

Generative AI Applications

Generative AI has a wide range of applications across various industries. Some of the most prominent applications include:

- Content creation:Generative AI can be used to create various types of content, such as articles, blog posts, poems, scripts, music, and even images and videos. For example, AI-powered tools can generate marketing copy, write product descriptions, or create realistic images for social media campaigns.

- Code generation:Generative AI models can assist developers in writing code by suggesting code snippets, identifying errors, and even generating entire functions. This can significantly speed up the development process and reduce the risk of errors.

- Drug discovery:Generative AI can be used to design new drugs and therapies by generating novel molecular structures and predicting their potential efficacy.

- Customer service:Generative AI-powered chatbots can provide personalized customer support, answer frequently asked questions, and handle basic customer requests.

Role of Cloud Computing in Generative AI

Cloud computing plays a critical role in the development and deployment of generative AI by providing:

- Scalability:Generative AI models require vast amounts of computing power and storage capacity, which can be easily scaled up and down on cloud platforms as needed.

- Flexibility:Cloud platforms offer a wide range of services and tools that can be customized to meet the specific requirements of generative AI applications.

- Cost-effectiveness:Cloud computing eliminates the need for expensive hardware investments, making generative AI accessible to businesses of all sizes.

- Accessibility:Cloud-based generative AI platforms can be accessed from anywhere with an internet connection, enabling collaboration and remote work.

Security Challenges of Generative AI in the Cloud

The use of generative AI in the cloud presents several unique security challenges:

- Data privacy and security:Generative AI models are trained on vast amounts of data, which may contain sensitive information. Protecting this data from unauthorized access and misuse is critical.

- Model poisoning:Attackers can intentionally introduce malicious data into the training dataset to manipulate the model’s output or create vulnerabilities.

- Adversarial attacks:Attackers can use carefully crafted inputs to fool generative AI models and cause them to generate unintended outputs. This can be used to spread misinformation, generate harmful content, or even compromise sensitive systems.

- Intellectual property theft:Generative AI models can be used to generate content that is similar to existing copyrighted works, raising concerns about intellectual property theft.

Security Risks Associated with Generative AI in the Cloud

Generative AI models, trained on vast datasets in the cloud, offer unparalleled capabilities. However, this transformative technology comes with inherent security risks that require careful consideration and mitigation strategies. This section explores the potential attack vectors targeting generative AI models and the cloud infrastructure that supports them, highlighting the vulnerabilities of these models to data poisoning, model theft, and adversarial attacks.

Generative AI is revolutionizing the tech landscape, but with great power comes great responsibility, especially when it comes to cloud security. It’s a constant game of cat and mouse, trying to stay ahead of potential threats. Speaking of unexpected things, did you hear about Apple’s next design trick?

They’re building a modern-day pyramid in Malaysia! Check out this article for more details. Back to AI, the key to securing generative AI models lies in robust data governance, secure infrastructure, and constant vigilance against emerging vulnerabilities.

It also discusses the risks associated with unauthorized access to sensitive data used for training and inference.

Data Poisoning

Data poisoning is a type of attack that aims to corrupt the training data used to build a generative AI model. This can result in a model that generates biased, inaccurate, or even harmful outputs. Attackers can introduce malicious data points into the training set, which can influence the model’s learning process and ultimately compromise its performance.

Generative AI is revolutionizing the way we work, but it also introduces new security challenges in the cloud. One crucial aspect of securing your cloud environment is maintaining financial control, and that’s where best expense tracker apps can be invaluable.

By meticulously tracking your cloud spending, you can identify potential vulnerabilities and optimize resource allocation, ultimately bolstering your overall generative AI cloud security posture.

- Example:Imagine a spam filter trained on a dataset of emails. If an attacker injects malicious emails into the training data, the filter may be less effective at identifying spam, potentially allowing unwanted messages to reach users.

Model Theft

Generative AI models are valuable assets, and attackers may seek to steal them for malicious purposes or to gain a competitive advantage. Model theft can occur through various means, including:

- Unauthorized Access:Attackers can gain access to the cloud infrastructure where the model is stored and download it without authorization.

- Reverse Engineering:Attackers can attempt to reconstruct the model’s architecture and parameters by analyzing its outputs or by exploiting vulnerabilities in the model’s training process.

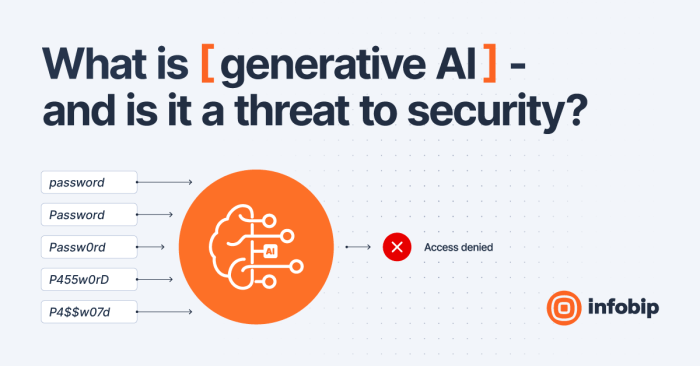

Adversarial Attacks

Adversarial attacks are designed to manipulate the input data provided to a generative AI model, causing it to produce incorrect or unexpected outputs. These attacks exploit the model’s inherent vulnerabilities and can be used to bypass security measures, spread misinformation, or even compromise critical systems.

Generative AI is revolutionizing the cloud, but it also brings new security challenges. Understanding how to secure your cloud infrastructure is crucial, and a good starting point is to learn about the different attack vectors. For a helpful resource on Apple’s security landscape, check out this apple intelligence cheat sheet.

By staying informed about common vulnerabilities and best practices, you can better protect your generative AI models and the data they process.

- Example:An attacker could create an image that appears benign to a human but is classified as a different object by an image recognition model, potentially leading to misidentification and security breaches.

Unauthorized Access to Sensitive Data

Generative AI models often rely on vast datasets containing sensitive information, such as personal data, financial records, or proprietary business data. Unauthorized access to these datasets can lead to privacy violations, data breaches, and reputational damage.

- Example:A generative AI model trained on a dataset of medical records could be used to generate realistic-looking fake medical records, potentially leading to identity theft and fraud.

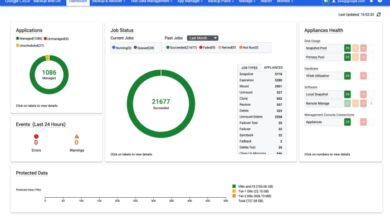

Security Measures for Generative AI in the Cloud: Generative Ai Cloud Security

Generative AI in the cloud offers immense potential, but it also introduces new security challenges. Safeguarding generative AI models and their underlying infrastructure is crucial to prevent data breaches, model manipulation, and other threats. Implementing robust security measures is essential for responsible and secure use of this technology.

Data Security

Protecting the data used to train and operate generative AI models is paramount. Data security ensures the integrity and confidentiality of sensitive information.

- Data Encryption:Encrypting data at rest and in transit is crucial to prevent unauthorized access. This involves using strong encryption algorithms like AES-256 to protect data stored in databases, file systems, and during data transfer.

- Data Access Control:Implement granular access controls to restrict access to sensitive data based on user roles and permissions. This ensures only authorized individuals can access and modify data.

- Data Masking and Anonymization:Employ techniques like data masking and anonymization to protect sensitive information during training and testing. This involves replacing sensitive data with synthetic or random values while preserving data structure and integrity.

Model Security

Generative AI models themselves can be targets of attacks, such as model poisoning, model extraction, and model inversion. Protecting model integrity and preventing unauthorized access is vital.

- Model Integrity Verification:Regularly verify the integrity of generative AI models to ensure they haven’t been tampered with or compromised. This can involve using techniques like model watermarking and model provenance tracking.

- Model Access Control:Restrict access to trained models based on user roles and permissions. Only authorized individuals should be able to access, modify, or deploy models. This prevents unauthorized model manipulation and deployment.

- Model Sandboxing:Run generative AI models in isolated environments (sandboxes) to prevent them from interacting with other systems and potentially compromising sensitive data.

Infrastructure Security, Generative ai cloud security

Securing the cloud infrastructure hosting generative AI models is essential to prevent attacks on the underlying systems. This includes protecting the servers, networks, and other components.

- Network Segmentation:Segment the network to isolate generative AI workloads from other systems and prevent lateral movement of attacks. This helps contain security breaches and limits the impact of potential compromises.

- Intrusion Detection and Prevention Systems (IDS/IPS):Deploy IDS/IPS to monitor network traffic for malicious activity and block suspicious connections. This provides real-time protection against network attacks.

- Vulnerability Management:Regularly scan the infrastructure for vulnerabilities and patch them promptly. This ensures the system is up-to-date and protected against known exploits.

Emerging Security Threats and Solutions

Generative AI’s power to create realistic and convincing content has led to a surge in security threats. The ability to manipulate data and generate deepfakes poses significant risks to individuals, organizations, and society as a whole. This section explores these emerging threats and examines how new security solutions are being developed to address them.

Deepfakes and Synthetic Data Generation

Deepfakes are synthetic media, often videos or audio, that have been manipulated to depict individuals saying or doing things they never actually did. These deepfakes can be used for malicious purposes, such as spreading misinformation, damaging reputations, and even influencing elections.

The generation of synthetic data, including images, text, and audio, is also a growing concern. Synthetic data can be used to train AI models, but it can also be used to create fake accounts, manipulate financial markets, and conduct other fraudulent activities.

- Deepfake Detection:Researchers are developing sophisticated algorithms to detect deepfakes by analyzing subtle inconsistencies in facial expressions, lip movements, and other visual cues. These detection methods rely on machine learning models trained on large datasets of real and fake media.

- Synthetic Data Verification:Techniques are being developed to identify and verify the authenticity of synthetic data. These methods often involve analyzing the statistical properties of the data, looking for patterns that are indicative of artificial generation.

Explainable AI and Adversarial Robustness

Explainable AI (XAI) is an emerging field that aims to make AI models more transparent and understandable. XAI techniques can help to identify potential vulnerabilities in AI systems and understand how they are making decisions. This increased transparency can help to mitigate the risks associated with generative AI by allowing security professionals to identify and address potential security flaws.

Adversarial robustness is another important area of research that focuses on developing AI models that are resilient to malicious attacks. These techniques aim to make AI models less susceptible to manipulation and to ensure that they continue to function correctly even when faced with adversarial inputs.

- Explainable AI for Deepfake Detection:XAI techniques can be used to explain how deepfake detection models work, making it easier to identify potential vulnerabilities and improve their accuracy.

- Adversarial Robustness for Synthetic Data Generation:Adversarial robustness techniques can be used to make synthetic data generation models more resistant to attacks that aim to manipulate the generated data.

Regulatory Frameworks for Generative AI

Regulatory frameworks are essential for mitigating the security risks associated with generative AI. These frameworks can establish guidelines for the development, deployment, and use of generative AI technologies, helping to ensure that they are used responsibly and ethically.

- Data Privacy and Security:Regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) address the collection, use, and protection of personal data. These regulations are relevant to generative AI because it often relies on large datasets that may contain sensitive information.

- Transparency and Accountability:Regulatory frameworks can require developers of generative AI systems to provide transparency about how their systems work and to be accountable for the outputs they generate. This can help to prevent the misuse of generative AI for malicious purposes.

Future Directions and Research Opportunities

The field of generative AI security in the cloud is rapidly evolving, presenting exciting opportunities for research and innovation. As generative AI models become more sophisticated and widely adopted, it is crucial to address the emerging security challenges and develop robust solutions.

Research Areas for Enhancing Generative AI Security

The advancement of generative AI security in the cloud necessitates dedicated research efforts in several key areas:

- Model Robustness and Adversarial Attacks:Researching techniques to improve the robustness of generative AI models against adversarial attacks is critical. This involves developing methods to detect and mitigate attacks that aim to manipulate model outputs or extract sensitive information.

- Data Privacy and Security:Protecting sensitive data used to train and operate generative AI models is paramount. Research should focus on developing privacy-preserving techniques, such as differential privacy and federated learning, to safeguard data while enabling model training and deployment.

- Explainability and Interpretability:Understanding the decision-making processes of generative AI models is essential for building trust and ensuring accountability. Research efforts should focus on developing techniques to explain model predictions and make them more transparent.

- Security Auditing and Monitoring:Developing effective security auditing and monitoring tools for generative AI systems is crucial for identifying vulnerabilities and potential threats. Research should focus on designing automated systems that can continuously assess model behavior and detect anomalies.

Emerging Technologies for Securing Generative AI

Emerging technologies like blockchain and quantum computing hold immense potential for enhancing generative AI security:

- Blockchain:Blockchain technology can be leveraged to create secure and transparent data provenance systems for generative AI models. This can help track the origin and modifications of training data, improving trust and accountability.

- Quantum Computing:Quantum computing offers the potential to break current encryption methods, posing a significant threat to generative AI security. Research is ongoing to develop quantum-resistant cryptography algorithms that can protect generative AI systems from future attacks.

Ethical Implications of Generative AI

The widespread adoption of generative AI raises significant ethical concerns related to privacy and security:

- Privacy Risks:Generative AI models can generate realistic synthetic data, which can be used to create deepfakes or impersonate individuals, raising concerns about privacy violations and identity theft.

- Security Implications:The potential for misuse of generative AI, such as creating malicious content or manipulating public opinion, presents significant security risks. Research is needed to develop ethical guidelines and frameworks to mitigate these risks.