Top Data Quality Tools: Ensuring Data Accuracy and Reliability

Top data quality tools are essential in today’s data-driven world, where accurate and reliable information is crucial for informed decision-making. Imagine a world where your customer database is riddled with errors, your sales figures are inaccurate, or your marketing campaigns target the wrong audience.

This is the reality of poor data quality, which can lead to significant financial losses, damaged reputation, and missed opportunities.

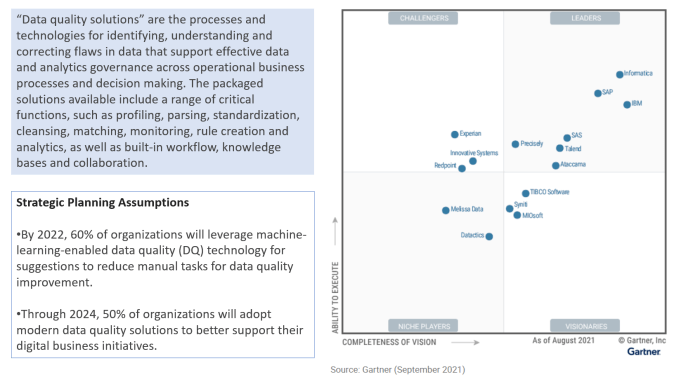

Data quality tools play a vital role in addressing these challenges by helping organizations identify, correct, and prevent data errors. These tools encompass a range of functionalities, including data profiling, cleansing, validation, monitoring, and governance. By leveraging these tools, businesses can ensure the integrity of their data, enabling them to make better decisions, optimize operations, and achieve their goals.

Introduction to Data Quality: Top Data Quality Tools

In today’s data-driven world, the quality of data is paramount. Data is the lifeblood of organizations, driving decision-making, product development, and strategic planning. Poor data quality can lead to costly errors, inaccurate insights, and ultimately, business failure.

Top data quality tools are essential for any business that relies on accurate information. They help you identify and correct errors, ensuring that your data is clean and reliable. And while you’re working on improving your data quality, don’t forget to leverage the power of your voice assistant! Check out this apple siri cheat sheet for business to streamline your daily tasks and free up more time for data analysis.

Ultimately, the best data quality tools are the ones that work seamlessly with your existing workflows and help you make the most of your data.

Significance of Data Quality

Data quality is crucial for ensuring that data is accurate, complete, consistent, timely, and valid. This ensures that organizations can trust their data and make informed decisions based on reliable information. Data quality is not a luxury; it is a necessity for any organization that relies on data to operate effectively.

Consequences of Poor Data Quality

The consequences of poor data quality can be significant and far-reaching. Some common examples include:

- Inaccurate reporting and analysis:Poor data quality can lead to misleading reports and analyses, resulting in incorrect conclusions and decisions. For example, a company might make a strategic investment based on inaccurate sales data, leading to significant financial losses.

- Lost revenue and opportunities:Poor data quality can lead to lost revenue and opportunities. For example, a company might miss out on potential customers due to inaccurate contact information or incomplete marketing data.

- Damaged reputation:Poor data quality can damage an organization’s reputation. For example, a company might be accused of fraud or misrepresentation due to inaccurate financial data.

- Increased costs:Poor data quality can increase costs for organizations. For example, companies might need to spend more time and resources cleaning and correcting data, leading to higher operational costs.

- Compliance issues:Poor data quality can lead to compliance issues. For example, companies might face fines or penalties for failing to meet regulatory requirements related to data accuracy and privacy.

Key Elements of Data Quality, Top data quality tools

The key elements of data quality are:

- Accuracy:Data is accurate if it is free from errors and reflects the true value of the information it represents. For example, a customer’s address should be accurate to ensure that they receive mail and packages correctly.

- Completeness:Data is complete if it contains all the necessary information. For example, a customer record should include their name, address, phone number, and email address.

- Consistency:Data is consistent if it is uniform and follows established standards. For example, customer names should be spelled consistently across different databases and systems.

- Timeliness:Data is timely if it is up-to-date and relevant to the current context. For example, sales data should be updated regularly to reflect the latest trends and patterns.

- Validity:Data is valid if it conforms to predefined rules and constraints. For example, a customer’s age should be within a reasonable range and their gender should be limited to predefined options.

Types of Data Quality Tools

Data quality tools are essential for ensuring the accuracy, completeness, and consistency of data used in various business operations. These tools can be categorized based on their specific functionalities, helping organizations address different aspects of data quality.

Data Profiling Tools

Data profiling tools analyze data to understand its characteristics, such as data types, distributions, ranges, and patterns. They provide insights into the quality of data, identifying potential issues like missing values, duplicates, and inconsistencies.

- Data type validation:This ensures that data conforms to expected types, like integers, strings, or dates. For example, a tool can identify if a field intended for a phone number contains alphanumeric characters.

- Data range validation:This verifies that data falls within acceptable limits. For instance, a tool can detect if a field for age contains values outside the realistic range of human ages.

- Data distribution analysis:This examines the distribution of data values, identifying outliers or unexpected patterns. For example, a tool can reveal unusual spikes in sales figures for a particular product.

- Data pattern detection:This identifies recurring patterns in data, helping to uncover potential errors or inconsistencies. For instance, a tool can detect if a field for customer addresses consistently follows a specific format, indicating potential data entry errors.

Data Cleansing Tools

Data cleansing tools address data quality issues by correcting, transforming, or removing erroneous data. They automate the process of cleaning data, improving its accuracy and consistency.

Top data quality tools are crucial for ensuring data accuracy and consistency, but it’s important to remember that even the best tools can’t protect against human error. Social engineering attacks, often relying on psychological manipulation, can exploit vulnerabilities in our data handling practices.

Understanding the 6 persuasion tactics used in social engineering attacks can help us develop stronger security protocols and educate ourselves on potential risks. By staying vigilant and implementing robust data quality tools, we can minimize the impact of these attacks and maintain the integrity of our data.

- Data standardization:This involves converting data to a consistent format, such as standardizing phone numbers or addresses. For example, a tool can automatically format phone numbers to a standard format like +1 (555) 555-5555.

- Data deduplication:This identifies and removes duplicate records, ensuring data integrity. For instance, a tool can detect and merge duplicate customer entries with the same name and address.

- Data imputation:This replaces missing values with reasonable estimates based on existing data. For example, a tool can impute missing customer ages based on the average age of other customers with similar characteristics.

- Data enrichment:This adds relevant information to existing data, improving its completeness. For example, a tool can enrich customer data by adding demographic information or social media profiles.

Data Validation Tools

Data validation tools ensure that data conforms to predefined rules and constraints. They verify the accuracy and consistency of data before it is processed or stored, preventing errors from propagating further.

- Data format validation:This verifies that data meets specific format requirements, such as date formats or email addresses. For example, a tool can check if a field for email addresses follows the standard format of “[email protected]”.

- Data consistency validation:This ensures that data is consistent across different sources or systems. For example, a tool can verify that customer names are consistent across the sales and marketing databases.

- Data integrity validation:This checks for data completeness and accuracy, ensuring that all required fields are populated and that values are within acceptable ranges. For example, a tool can validate that a field for order quantity is not negative.

Data Monitoring Tools

Data monitoring tools continuously track data quality metrics, identifying potential issues and providing alerts in real time. They help organizations proactively maintain data quality by monitoring data sources, processes, and systems.

- Data quality metrics monitoring:This tracks key data quality metrics, such as accuracy, completeness, and consistency. For example, a tool can monitor the percentage of missing values in a customer database.

- Data drift detection:This identifies changes in data distributions or patterns over time, indicating potential issues with data quality. For example, a tool can detect a significant increase in the number of invalid email addresses in a customer database.

- Data lineage tracking:This traces the origin and transformations of data, helping to identify the root cause of data quality issues. For example, a tool can track how a customer’s name was changed during a data migration process.

Data Governance Tools

Data governance tools establish and enforce data quality policies and standards. They provide a framework for managing data quality, ensuring compliance with regulations and business requirements.

- Data quality policy definition:This involves defining data quality standards and policies for different data types and business processes. For example, a policy might specify acceptable levels of missing values for customer data.

- Data quality rule enforcement:This enforces data quality rules through automated checks and validation processes. For example, a tool can enforce a rule that requires all customer addresses to be validated against a geocoding service.

- Data quality reporting and auditing:This provides reports and audits on data quality performance, tracking progress and identifying areas for improvement. For example, a tool can generate reports on the number of data quality violations detected over time.

Evaluating Data Quality Tools

Choosing the right data quality tool is crucial for ensuring the accuracy, completeness, and consistency of your data. It’s essential to consider various factors when evaluating different options. This evaluation helps you select a tool that aligns with your specific needs, budget, and technical expertise.

Top data quality tools are essential for ensuring accurate and reliable data, which is crucial for effective decision-making. But just like ensuring your content is properly aligned on a webpage, aligning content right, left, or center can impact readability and user experience, top data quality tools help you achieve data integrity and consistency, enabling you to make better-informed decisions.

Criteria for Evaluating Data Quality Tools

Evaluating data quality tools requires a thorough analysis of their capabilities, features, and suitability for your specific requirements. Key criteria include:

- Cost:Data quality tools come with varying pricing models, from fixed monthly subscriptions to pay-per-use options. Consider your budget and the scale of your data quality initiatives when evaluating cost.

- Ease of Use:The tool should be user-friendly, with an intuitive interface and clear documentation. A tool that requires extensive technical expertise may not be suitable for all users within your organization.

- Integration Capabilities:Seamless integration with your existing data sources, data pipelines, and other business intelligence tools is crucial. Look for tools that offer robust connectors and APIs for smooth integration.

- Scalability:The tool should be able to handle the volume and complexity of your data. Consider your future data growth and ensure the tool can scale accordingly.

- Support:Look for tools that offer comprehensive support, including documentation, tutorials, and responsive customer service. Reliable support can be essential for resolving issues and maximizing the tool’s effectiveness.

Comparing Popular Data Quality Tools

Here’s a table comparing some popular data quality tools based on the criteria discussed above:

| Tool | Cost | Ease of Use | Integration Capabilities | Scalability | Support |

|---|---|---|---|---|---|

| Trifacta Wrangler | Subscription-based | User-friendly interface | Integrates with various data sources and platforms | Scalable for large datasets | Comprehensive documentation and support |

| Alation | Subscription-based | Intuitive interface | Integrates with data catalogs and governance platforms | Scalable for enterprise-level deployments | Dedicated customer support |

| Dataiku | Subscription-based | Visual interface with drag-and-drop functionality | Integrates with various data sources and tools | Scalable for large-scale data projects | Extensive documentation and community support |

| Paxata | Subscription-based | Intuitive and user-friendly interface | Integrates with various data sources and platforms | Scalable for enterprise-level deployments | Dedicated customer support and training |

| Informatica PowerCenter | Perpetual license with annual maintenance | Requires technical expertise | Integrates with various data sources and platforms | Highly scalable for large-scale data transformations | Extensive documentation and support services |

Selecting the Right Data Quality Tool

The best data quality tool for your needs depends on your specific requirements, budget, and technical expertise. Here are some considerations:

- Data Volume and Complexity:If you have a large volume of complex data, you’ll need a tool that can handle it efficiently. Tools like Informatica PowerCenter and Dataiku are well-suited for such scenarios.

- Integration Needs:Consider the integration requirements with your existing data sources, pipelines, and other tools. Tools like Alation and Trifacta Wrangler offer strong integration capabilities.

- User Skills:If you have a team with limited technical expertise, a user-friendly tool like Trifacta Wrangler or Paxata would be a better choice.

- Budget:Evaluate the cost of different tools and choose one that aligns with your budget constraints. Some tools offer free trials or limited-time discounts.

Implementing Data Quality Tools

Successfully implementing data quality tools requires a strategic approach and a thorough understanding of your organization’s data landscape. It involves a series of steps that ensure the chosen tools effectively address your specific data quality challenges and align with your overall data management goals.

Steps Involved in Implementing Data Quality Tools

Implementing data quality tools involves a systematic process that ensures successful integration within your organization. The following steps provide a comprehensive framework:

- Define Data Quality Goals and Metrics:Begin by clearly defining your data quality objectives and the metrics that will measure their achievement. This involves identifying specific data quality issues, such as accuracy, completeness, consistency, and timeliness, and establishing quantifiable targets for improvement. For example, you might aim to reduce data errors by 20% or improve the timeliness of data updates by 10%.

- Identify and Prioritize Data Sources:Determine the critical data sources that require data quality improvement efforts. Prioritize these sources based on their importance to business operations, the severity of data quality issues, and the potential impact of data quality improvement. This helps focus resources on areas where data quality improvements will have the most significant impact.

- Select and Evaluate Data Quality Tools:Conduct a thorough evaluation of available data quality tools, considering factors such as functionality, compatibility with your existing infrastructure, cost, and ease of use. It’s crucial to select tools that align with your specific data quality goals and address the identified data quality issues.

- Develop a Data Quality Management Plan:Create a comprehensive plan that Artikels the implementation strategy, timelines, responsibilities, and resource allocation. This plan should detail how data quality tools will be integrated into your existing data management processes, including data ingestion, transformation, and analysis.

- Implement and Configure Data Quality Tools:Once you’ve chosen your data quality tools, implement them according to the plan and configure them to meet your specific data quality requirements. This involves defining data quality rules, establishing data validation processes, and setting up monitoring and reporting mechanisms.

- Test and Validate Data Quality Improvements:Conduct thorough testing to validate the effectiveness of the implemented data quality tools. This involves verifying that the tools accurately identify and address data quality issues and that the expected data quality improvements are achieved.

- Monitor and Evaluate Data Quality Performance:Continuously monitor the performance of the implemented data quality tools and track the progress toward your data quality goals. Regularly review the data quality metrics and make adjustments to the tools or processes as needed to ensure ongoing improvement.

Best Practices for Data Quality Management and Governance

Effective data quality management and governance are crucial for maintaining high data quality standards and ensuring that data is reliable and trustworthy. The following best practices can help organizations establish a robust data quality framework:

- Establish a Data Quality Policy:Develop a clear and comprehensive data quality policy that Artikels the organization’s commitment to data quality, defines data quality standards, and establishes responsibilities for data quality management. This policy should be communicated to all stakeholders and enforced consistently.

- Implement a Data Quality Framework:Create a structured framework that defines the processes, procedures, and tools for managing data quality. This framework should include guidelines for data collection, data validation, data cleansing, and data monitoring.

- Promote Data Quality Awareness:Raise awareness about data quality among all stakeholders, including data owners, data users, and data analysts. This can be achieved through training programs, workshops, and regular communication about data quality best practices.

- Establish Data Quality Metrics:Define clear and measurable data quality metrics to track the performance of data quality initiatives. These metrics should be aligned with the organization’s data quality goals and provide insights into the effectiveness of data quality improvement efforts.

- Implement Data Quality Monitoring and Reporting:Establish a system for continuously monitoring data quality and generating regular reports on data quality performance. This system should provide insights into data quality trends, identify potential issues, and enable timely corrective actions.

Challenges and Considerations Associated with Data Quality Tool Implementation

Implementing data quality tools can present several challenges and considerations that organizations need to address effectively:

- Data Complexity and Heterogeneity:Organizations often deal with large and complex data sets from multiple sources, with varying data formats and structures. This heterogeneity can pose challenges for data quality tool implementation, requiring careful planning and configuration to ensure effective data processing and analysis.

- Integration with Existing Systems:Integrating data quality tools with existing data management systems and applications can be complex and time-consuming. Organizations need to ensure compatibility and seamless data flow between different systems to avoid data inconsistencies and errors.

- Data Governance and Ownership:Establishing clear data governance policies and defining data ownership responsibilities are crucial for successful data quality management. This involves identifying data stewards who are responsible for ensuring the quality of specific data sets and implementing data quality controls.

- Resource Constraints:Implementing data quality tools can require significant investment in terms of resources, including personnel, technology, and training. Organizations need to allocate sufficient resources to ensure the successful implementation and ongoing maintenance of data quality tools.

- Change Management:Implementing data quality tools can require changes to existing data management processes and workflows. Effective change management strategies are essential to ensure that users are adequately trained and supported during the transition to new data quality practices.

Data Quality Best Practices

Data quality is essential for any organization that relies on data-driven decisions. Poor data quality can lead to inaccurate insights, flawed business strategies, and significant financial losses. Implementing a robust data quality framework ensures that your data is accurate, complete, consistent, and timely, ultimately driving better business outcomes.

Data Quality Best Practices

Organizations can follow several data quality best practices to ensure the accuracy and reliability of their data.

- Establish clear data quality standards and definitions.Clearly define data quality metrics and thresholds for different data types and business processes. This ensures everyone understands the desired level of data quality and provides a baseline for measurement and improvement.

- Implement data validation and cleansing processes.Regularly validate data against predefined rules and cleanse data to correct errors, remove duplicates, and ensure consistency. This involves using data quality tools and techniques to identify and address data issues proactively.

- Promote data governance and accountability.Establish clear roles and responsibilities for data quality, assigning ownership for data accuracy and integrity. This fosters a culture of data quality awareness and encourages data stewardship throughout the organization.

- Implement data quality monitoring and reporting.Regularly monitor data quality metrics to track progress and identify areas for improvement. This involves establishing dashboards and reports to visualize data quality trends and highlight potential issues.

- Invest in data quality training and education.Provide training and education to data users and stakeholders on data quality principles, best practices, and the importance of data integrity. This fosters a data-driven culture and empowers individuals to contribute to data quality improvement.

- Embrace data quality automation.Leverage data quality tools and technologies to automate data validation, cleansing, and monitoring tasks. This reduces manual effort, improves efficiency, and enables continuous data quality improvement.

Examples of Successful Data Quality Initiatives

- A leading retailer implemented a data quality program to improve customer segmentation and personalization efforts.By addressing data inconsistencies and inaccuracies in customer data, the retailer was able to create more accurate customer profiles, leading to targeted marketing campaigns and a significant increase in customer engagement and sales.

- A financial institution implemented a data quality initiative to improve fraud detection and risk management.By cleaning and validating transaction data, the institution was able to identify fraudulent activities more effectively, reducing financial losses and enhancing customer trust.

- A healthcare provider implemented a data quality program to improve patient care and reduce medical errors.By ensuring the accuracy and completeness of patient medical records, the provider was able to provide more personalized and effective treatment, leading to better patient outcomes and reduced healthcare costs.