The AU AI Governance Debate: Navigating Ethical and Practical Challenges

The AU AI governance debate is a critical conversation shaping the future of artificial intelligence in Australia. As AI technologies rapidly advance and permeate various sectors, from healthcare to finance, the need for robust governance frameworks becomes increasingly apparent.

This debate centers on balancing the potential benefits of AI with the ethical and societal concerns that arise from its development and deployment.

This exploration delves into the current state of AI in Australia, examining the existing governance landscape and highlighting key challenges. We will discuss the ethical dilemmas surrounding AI bias, data privacy, and the impact on employment. By analyzing stakeholder perspectives and exploring potential solutions, this article aims to provide a comprehensive understanding of the critical issues at play in the AU AI governance debate.

The Rise of AI in Australia

Australia is rapidly embracing the transformative potential of artificial intelligence (AI), with its government, businesses, and research institutions actively investing in and developing AI technologies. The country’s strategic focus on AI is driven by its potential to boost economic growth, enhance productivity, and improve the quality of life for its citizens.

AI Development and Adoption in Australia

The Australian government recognizes AI as a key driver of future economic growth and has implemented several initiatives to foster its development and adoption. The Australian government has launched several initiatives to promote AI research, development, and adoption, including the National Artificial Intelligence Strategy, which Artikels a comprehensive plan to position Australia as a global leader in AI.

This strategy emphasizes the importance of ethical AI development, data sharing, and workforce upskilling to ensure a responsible and inclusive transition to an AI-powered future.

Prominent AI Initiatives and Applications

Australia has witnessed a surge in AI initiatives and applications across various sectors, demonstrating its commitment to leveraging AI for societal and economic progress. These initiatives are driven by both government and private sector investments, reflecting the growing recognition of AI’s transformative potential.

Government Initiatives

The Australian government has taken several steps to promote AI development and adoption, including:

- The National Artificial Intelligence Strategy, launched in 2019, provides a roadmap for Australia’s AI journey, outlining key priorities, including ethical AI development, data sharing, and workforce upskilling.

- The $1 billion investment in AI research and development through the National Collaborative Research Infrastructure Strategy (NCRIS) aims to strengthen Australia’s research capabilities and foster innovation in AI.

- The establishment of the Australian Institute for Machine Learning (AIML) in 2016, a national research center focused on developing and applying AI technologies for real-world problems.

AI Applications in Different Sectors

AI is transforming various sectors in Australia, with notable applications in:

- Healthcare:AI is being used to improve diagnosis, treatment, and patient care. For example, AI-powered systems are being used to analyze medical images for early detection of diseases, personalize treatment plans, and predict patient outcomes.

- Finance:AI is revolutionizing the financial industry by automating tasks, detecting fraud, and providing personalized financial advice. For example, AI-powered chatbots are being used to provide customer support, while AI algorithms are being used to assess creditworthiness and identify investment opportunities.

- Agriculture:AI is being used to optimize crop yields, improve resource management, and enhance food security. For example, AI-powered drones are being used to monitor crops and detect diseases, while AI algorithms are being used to predict weather patterns and optimize irrigation schedules.

- Education:AI is being used to personalize learning experiences, automate grading, and provide students with real-time feedback. For example, AI-powered tutors are being used to provide personalized instruction, while AI algorithms are being used to analyze student performance and identify areas for improvement.

Potential Economic and Social Impacts of AI in Australia

AI has the potential to significantly impact Australia’s economy and society, both positively and negatively. The potential benefits of AI include:

- Economic Growth:AI is expected to contribute significantly to Australia’s economic growth by automating tasks, increasing productivity, and creating new jobs. The Australian government estimates that AI could contribute up to $2.3 trillion to the Australian economy by 2030.

- Job Creation:While AI may automate some existing jobs, it is also expected to create new jobs in fields such as AI development, data science, and AI ethics.

- Improved Quality of Life:AI has the potential to improve the quality of life for Australians by enhancing healthcare, education, and transportation.

However, there are also potential risks associated with AI, including:

- Job Displacement:AI may automate some existing jobs, leading to job displacement and unemployment.

- Bias and Discrimination:AI systems can inherit and amplify existing biases in data, leading to unfair or discriminatory outcomes.

- Privacy Concerns:AI systems collect and analyze large amounts of personal data, raising concerns about privacy and data security.

Governance Challenges in AI

The rapid advancement of artificial intelligence (AI) brings with it a range of ethical and societal concerns, demanding careful consideration of governance frameworks. As AI systems become increasingly sophisticated and integrated into various aspects of our lives, it’s crucial to address the potential risks and ensure responsible development and deployment.

Ethical Considerations in AI Development

Ethical considerations in AI development are paramount. These concerns encompass various aspects, including bias, transparency, accountability, and the potential for misuse.

The AI governance debate is heating up, with everyone from tech giants to philosophers weighing in. It’s a complex issue, but sometimes I just want to go back to the basics, like the simple elegance of the “sister style nothing fancy” aesthetic found on this website.

After all, maybe the key to navigating this complex world of AI is to keep things simple and focus on the core values that matter most.

- Bias in AI Algorithms: AI algorithms are trained on vast datasets, and if these datasets contain biases, the resulting AI systems can perpetuate and amplify these biases. This can lead to discriminatory outcomes in areas like hiring, lending, and criminal justice. For instance, facial recognition systems have been shown to exhibit racial bias, leading to misidentification and unfair treatment of individuals.

- Transparency and Explainability: AI systems, particularly those based on deep learning, can be complex and opaque, making it difficult to understand how they arrive at their decisions. This lack of transparency raises concerns about accountability and the potential for misuse. For example, in medical diagnosis, it’s crucial to understand the rationale behind an AI system’s recommendations to ensure patient safety and trust.

- Accountability for AI Actions: Determining who is responsible for the actions of AI systems, especially in cases of harm or error, presents a significant challenge. If an autonomous vehicle causes an accident, who is held accountable: the manufacturer, the programmer, or the user?

Clear guidelines are needed to establish accountability and ensure justice.

- Potential for Misuse: AI technologies can be used for malicious purposes, such as creating deepfakes for disinformation or developing autonomous weapons systems that pose a threat to human safety. Effective governance frameworks are needed to mitigate these risks and prevent the misuse of AI.

AI-Related Controversies and Incidents

Several controversies and incidents involving AI have highlighted the urgent need for robust governance frameworks. These incidents demonstrate the real-world implications of AI development and deployment.

- The Cambridge Analytica Scandal: This incident involved the misuse of Facebook user data to target political advertising during the 2016 US presidential election. It raised concerns about data privacy, algorithmic manipulation, and the potential for AI to be used for political influence.

- The COMPAS Algorithm Controversy: The COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) algorithm is used by the US criminal justice system to assess the risk of recidivism. Studies have shown that the algorithm exhibits racial bias, disproportionately predicting higher risk for Black defendants compared to White defendants with similar criminal histories.

- Autonomous Weapon Systems: The development of autonomous weapons systems, also known as killer robots, raises serious ethical concerns. The potential for these systems to make life-or-death decisions without human intervention has sparked calls for international regulation and bans on their development and deployment.

Global Approaches to AI Governance

Various countries and international organizations are developing approaches to AI governance, reflecting diverse perspectives and priorities.

- The European Union’s General Data Protection Regulation (GDPR): This regulation, which came into effect in 2018, establishes a comprehensive framework for data protection and privacy, including provisions related to AI. It emphasizes data subject rights, transparency, and accountability in the processing of personal data by AI systems.

- China’s “New Generation Artificial Intelligence Development Plan”: This plan Artikels China’s ambition to become a global leader in AI. It emphasizes the importance of ethical AI development, data security, and the promotion of AI applications for social good. China has also issued guidelines for the ethical development of AI, focusing on principles such as fairness, transparency, and human oversight.

The debate surrounding AI governance is a complex one, filled with ethical and practical considerations. It’s a conversation that often involves finding the right balance between innovation and responsible development. Just like choosing the perfect color filter for an interior photograph, a color story filters tips for interior photographs can help to highlight the best features of a space, AI governance needs careful consideration to ensure that its development benefits humanity as a whole.

- The Organisation for Economic Co-operation and Development (OECD): The OECD has developed a set of AI principles that emphasize human-centered AI, responsible innovation, and the promotion of inclusive growth. These principles aim to provide guidance for governments, businesses, and researchers in developing and deploying AI responsibly.

Australian AI Governance Framework: Au Ai Governance Debate

Australia is actively working to develop a comprehensive and effective AI governance framework to address the challenges and opportunities presented by the rapid advancements in artificial intelligence. This framework aims to promote responsible and ethical AI development and deployment, ensuring that AI benefits society while mitigating potential risks.

Existing Framework

The Australian government has adopted a multi-pronged approach to AI governance, encompassing a range of policies, regulations, and guidelines. This framework is still evolving, with ongoing efforts to strengthen and refine its elements.

- AI Ethics Framework: The Australian government released the “AI Ethics Framework” in 2019, providing principles and guidelines for ethical AI development and use. These principles emphasize human-centered AI, fairness, transparency, accountability, and privacy. The framework encourages organizations to adopt these principles and develop their own AI ethics policies.

- National Artificial Intelligence Action Plan: Launched in 2020, the plan Artikels the government’s vision for AI in Australia, focusing on fostering innovation, building AI capabilities, and promoting responsible AI development. The plan emphasizes collaboration between government, industry, and research institutions to drive AI adoption and address ethical considerations.

- Data Protection Legislation: Australia’s Privacy Act 1988 and the Australian Privacy Principles provide a legal framework for data protection, which is crucial for responsible AI development and use. The Act requires organizations to collect, use, and disclose personal information in a fair and lawful manner, ensuring individuals’ privacy rights are protected.

- Consumer Law: The Australian Consumer Law (ACL) is relevant to AI systems that interact with consumers. The ACL prohibits misleading or deceptive conduct and requires businesses to provide accurate information about their products and services, including AI-powered ones. This ensures consumers are not misled by AI-generated content or recommendations.

- Sector-Specific Regulations: Several sectors, such as healthcare and finance, have specific regulations that apply to AI. For example, the Therapeutic Goods Administration (TGA) regulates AI-powered medical devices, ensuring their safety and efficacy. Similarly, the Australian Prudential Regulation Authority (APRA) oversees AI applications in the financial sector, addressing potential risks and ensuring financial stability.

Strengths and Weaknesses

The existing AI governance framework in Australia has strengths and weaknesses.

- Strengths:

- The framework emphasizes ethical considerations, promoting responsible AI development and use.

- It encourages collaboration between government, industry, and research institutions to foster innovation and address challenges.

- Existing data protection laws provide a foundation for protecting individuals’ privacy in the context of AI.

- Sector-specific regulations address AI-related risks in specific industries.

- Weaknesses:

- The framework is still evolving and lacks comprehensive legislation specifically addressing AI governance.

- The enforcement mechanisms for ethical AI principles and guidelines are not well-defined, potentially leading to inconsistent implementation.

- The framework needs to be more proactive in addressing emerging AI challenges, such as bias, discrimination, and job displacement.

Areas for Improvement

To effectively address the challenges and opportunities of AI, the Australian AI governance framework needs to be strengthened and further developed in several key areas.

- Comprehensive AI Legislation: The development of dedicated AI legislation would provide a clear legal framework for regulating AI development, deployment, and use. This legislation should address ethical considerations, liability, data privacy, and other critical issues.

- Enforcement Mechanisms: Clear and effective enforcement mechanisms are needed to ensure compliance with ethical principles and guidelines. This could involve establishing an independent AI regulator or empowering existing agencies to oversee AI activities.

- Addressing Bias and Discrimination: The framework should proactively address issues of bias and discrimination in AI systems. This could involve requiring organizations to conduct bias audits, develop mitigation strategies, and ensure fairness in AI-powered decision-making.

- Transparency and Explainability: Promoting transparency and explainability in AI systems is crucial for building trust and accountability. This could involve requiring organizations to provide clear explanations of how AI systems work and the basis for their decisions.

- Job Displacement: The framework should address the potential for job displacement caused by AI. This could involve investing in education and training programs to help workers adapt to the changing job market and exploring policies to support workers affected by AI automation.

Key Issues in the Debate

The development and deployment of AI in Australia, like elsewhere, are not without their challenges. These challenges extend beyond technical hurdles and delve into the very fabric of society, raising crucial questions about data privacy, ethical considerations, and the future of work.

Data Privacy and Security

Data is the lifeblood of AI, fueling its learning and decision-making capabilities. However, this dependence raises significant concerns about data privacy and security. The collection, storage, and use of vast amounts of personal data for AI training and application necessitate robust safeguards to protect individual rights and prevent misuse.

- Data breaches:AI systems often rely on large datasets, making them vulnerable to data breaches. A breach could expose sensitive personal information, leading to identity theft, financial loss, and reputational damage.

- Surveillance and privacy:The use of AI for facial recognition, surveillance, and predictive policing raises concerns about excessive surveillance and potential infringement on individual privacy.

- Data ownership and control:The question of who owns and controls the data used to train AI models is complex. Individuals may not be aware of how their data is being used, and they may lack control over its deletion or modification.

Ethical Considerations of AI Bias and Discrimination, Au ai governance debate

AI systems learn from data, and if that data reflects existing biases in society, the AI system can perpetuate and even amplify those biases. This can lead to discriminatory outcomes in areas like hiring, lending, and criminal justice.

- Algorithmic bias:AI algorithms can be biased if the training data they are fed is biased. For example, if a hiring algorithm is trained on historical data that shows men are more likely to be hired for certain roles, the algorithm may perpetuate this bias and discriminate against women.

The debate surrounding AI governance is complex, with many voices weighing in on the best way to ensure responsible development and deployment. As we navigate this intricate landscape, it’s refreshing to find moments of beauty and inspiration, like the arrival of spring summer maple jewelry.

The intricate craftsmanship and natural elegance of these pieces remind us that even in the face of complex challenges, there’s still room for artistry and wonder. Perhaps this reminder can help us approach the AI governance debate with a renewed sense of creativity and collaboration.

- Lack of transparency:The complexity of AI algorithms can make it difficult to understand how they reach their decisions, making it challenging to identify and address potential biases.

- Accountability:Determining who is responsible when an AI system makes a biased or discriminatory decision can be difficult. This raises questions about accountability and the need for ethical frameworks to govern AI development and deployment.

Impact of AI on Employment and the Future of Work

AI is automating tasks previously performed by humans, raising concerns about job displacement and the future of work in Australia. While AI can create new jobs, it also has the potential to displace existing jobs, especially those involving repetitive or routine tasks.

- Job displacement:AI is automating tasks in various sectors, from manufacturing and transportation to customer service and healthcare. This automation can lead to job losses, particularly in sectors with high levels of routine work.

- Skills gap:The rise of AI requires workers with new skills, such as data science, AI development, and AI ethics. There is a growing skills gap between the skills required for the jobs of the future and the skills possessed by the current workforce.

- Reskilling and upskilling:To mitigate the negative impact of AI on employment, governments and businesses need to invest in reskilling and upskilling programs to equip workers with the skills needed for the AI-driven economy.

Stakeholder Perspectives

The development and deployment of AI systems raise significant ethical, social, and economic considerations. These concerns are reflected in the diverse perspectives of various stakeholders involved in the AI governance debate. Understanding these perspectives is crucial for formulating effective and equitable AI governance frameworks.

Stakeholder Perspectives on AI Governance

This section examines the primary concerns and interests of key stakeholders involved in the AI governance debate in Australia. The table below provides a concise overview of these perspectives:

| Stakeholder | Primary Concerns | Interests |

|---|---|---|

| Government Agencies |

|

|

| Industry Leaders |

|

|

| Researchers |

|

|

| Civil Society Organizations |

|

|

Government’s Role in Regulating AI

The role of government in regulating AI is a subject of ongoing debate. Different perspectives exist on the level of government intervention necessary to ensure responsible and ethical AI development and deployment.

| Perspective | Role of Government | Rationale |

|---|---|---|

| Minimalist Approach |

|

|

| Moderate Approach |

|

|

| Maximalist Approach |

|

|

Potential Solutions and Recommendations

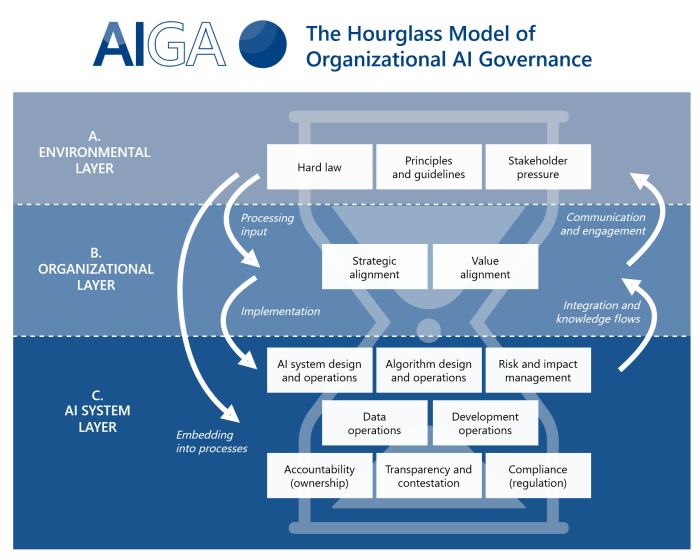

Navigating the complexities of AI governance requires a multifaceted approach that encompasses robust regulatory frameworks, ethical guidelines, and public awareness campaigns. Drawing inspiration from international best practices, this section explores potential solutions and recommendations for strengthening Australia’s AI governance framework.

Regulatory Frameworks

A comprehensive regulatory framework is essential for fostering responsible AI development and deployment. It should provide clear guidelines for businesses, researchers, and developers while ensuring ethical considerations are at the forefront.

- Establish a dedicated AI regulatory body: A dedicated agency, similar to the UK’s Centre for Data Ethics and Innovation, can provide oversight, guidance, and support for AI-related issues. This body could be responsible for developing and enforcing AI regulations, conducting research, and promoting best practices.

- Implement risk-based regulation: Instead of a one-size-fits-all approach, regulations should be tailored to the specific risks posed by different AI applications. High-risk AI systems, such as those used in critical infrastructure or healthcare, could be subject to stricter regulations and oversight.

- Promote transparency and accountability: Regulations should require companies to disclose information about their AI systems, including the data used, algorithms employed, and potential risks. This transparency fosters trust and accountability, enabling stakeholders to assess the potential impact of AI applications.

Ethical Guidelines

Ethical considerations should be embedded within the development and deployment of AI systems. Robust ethical guidelines can help ensure that AI is used responsibly and benefits society as a whole.

- Develop clear ethical principles for AI: These principles should address issues such as fairness, transparency, accountability, privacy, and non-discrimination. They can serve as a framework for guiding ethical decision-making in AI development and deployment.

- Establish ethical review boards: Independent ethical review boards can assess the ethical implications of AI projects before they are implemented. These boards should include experts from diverse backgrounds, such as ethics, law, social sciences, and technology.

- Promote public engagement in AI ethics: Encouraging public dialogue and participation in shaping AI ethics is crucial. This can involve public consultations, workshops, and educational programs to foster understanding and awareness of ethical considerations in AI.

Public Awareness Campaigns

Raising public awareness about AI is essential for fostering informed discussions and building trust in this rapidly evolving technology.

- Educate the public about AI: Public awareness campaigns can help demystify AI and explain its potential benefits and risks. Educational materials, workshops, and online resources can be used to disseminate information and foster understanding.

- Promote responsible AI use: Campaigns should highlight the importance of ethical AI development and deployment. This can involve showcasing examples of responsible AI applications and promoting best practices for using AI in a way that benefits society.

- Encourage public participation in AI governance: Engaging the public in discussions about AI governance can help shape policies and regulations that reflect societal values. This can involve public consultations, surveys, and online forums.

Best Practices in AI Governance

Examining successful AI governance frameworks from other countries and regions can provide valuable insights for Australia.

- The European Union’s General Data Protection Regulation (GDPR): The GDPR sets high standards for data privacy and protection, which are relevant to AI development and deployment. It emphasizes data minimization, consent, and the right to be forgotten, promoting responsible data handling practices.

- The UK’s Centre for Data Ethics and Innovation: This independent body provides guidance and support on data ethics and AI. It conducts research, develops best practices, and engages with stakeholders to promote responsible AI development and deployment.

- Singapore’s Model AI Governance Framework: This framework Artikels principles for responsible AI, including fairness, transparency, accountability, and human oversight. It encourages organizations to adopt a risk-based approach to AI governance, tailoring their practices to the specific risks posed by their AI systems.